Create Customized Gen AI Chatbot

Create Customized Gen AI Chatbot

Gathr brings you No Code Gen AI custom Chatbot builder for your organization. You can now easily create tailored chatbots without any programming skills and enhance customer engagement with advanced AI capabilities.

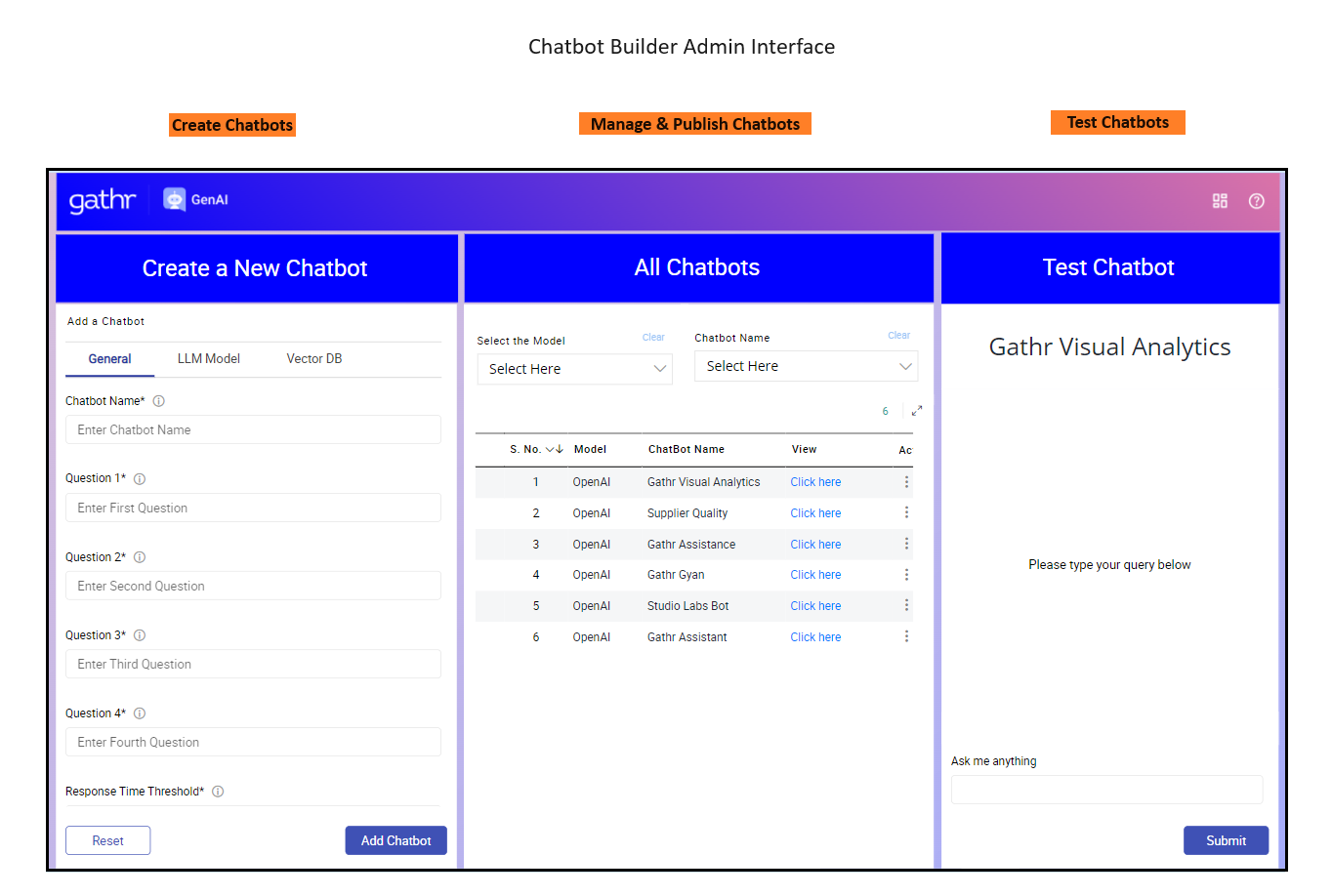

Admin: The Admin is responsible for creating, managing, and deploying chatbots within the organization. They have full control over the chatbot lifecycle and can ensure the chatbot meets the organization’s needs and standards.

- Create Chatbots: Design and configure chatbots from scratch, customizing responses, embedding models, and integrating with databases.

- Publish Chatbots: Deploy chatbots to be accessible by end-users, ensuring they are live and ready for interaction.

- Test Chatbots: Rigorously test chatbots for accuracy, relevance, and performance in a dedicated test environment before going live.

- Manage Chatbots: Update configurations, monitor performance, and make adjustments to ensure optimal functionality.

- User Management: Oversee users who interact with the chatbots, ensuring a smooth and effective user experience.

Users: They interact with the deployed chatbots to get assistance, information, or perform tasks. They rely on the chatbots to provide accurate and helpful responses to their queries.

- Query Chatbots: Ask questions and receive instant, relevant responses from the chatbots.

- Access Information: Utilize chatbots to quickly find information, resolve issues, and complete tasks without needing human intervention.

- Enhanced Engagement: Experience improved customer service and support through intelligent and responsive chatbots tailored to their needs.

By catering to both Admins and Users, Gathr’s no-code Gen AI custom chatbot builder ensures that organizations can efficiently create and manage powerful chatbots while providing users with an enhanced, seamless interaction experience.

Admin Interface

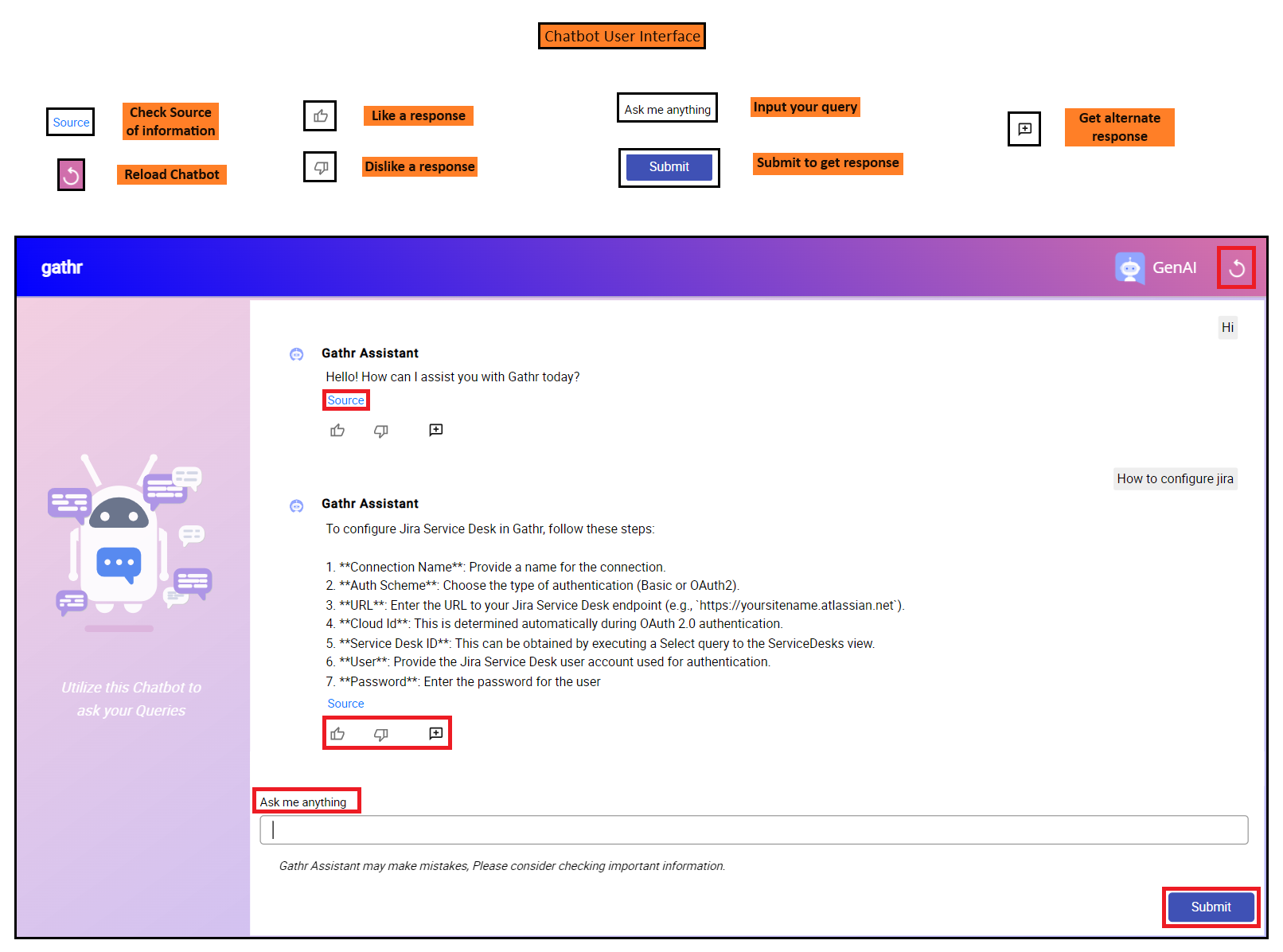

User Interface

Once your chatbots are published, you can interact with them through a simple and user-friendly interface. To effectively use the “Ask me anything” input field and the response buttons to get the most out of your chatbot interactions.

Using the Input Query

- Input Query Field: This is where you enter your questions or queries for the chatbot.

- Type your question into the “Ask me anything” input field.

- Click the “Submit” button to send your query to the chatbot.

Interpreting and Responding to Chatbot Replies

When the chatbot provides a response, you have several options to engage further:

Source: This button allows you to investigate the source of the information provided by the chatbot.

- Click the “Source” button to view the origin of the response.

- Use this information to verify the accuracy and credibility of the chatbot’s answer.

Like: Use this button to indicate that you found the chatbot’s response helpful and accurate.

- Click the “Like” button if you are satisfied with the response.

- This feedback helps improve the chatbot’s future interactions.

Dislike: Use this button to indicate that you are not satisfied with the chatbot’s response.

- Click the “Dislike” button if the response was not helpful or accurate.

- This feedback is valuable for improving the chatbot’s performance and accuracy.

Alternate Response: This button prompts the chatbot to provide an alternative answer to your query.

- Click the “Alternate Response” button if you want to see another possible answer.

- The chatbot will generate and display a different response, which may better address your query.

Create your Chatbot

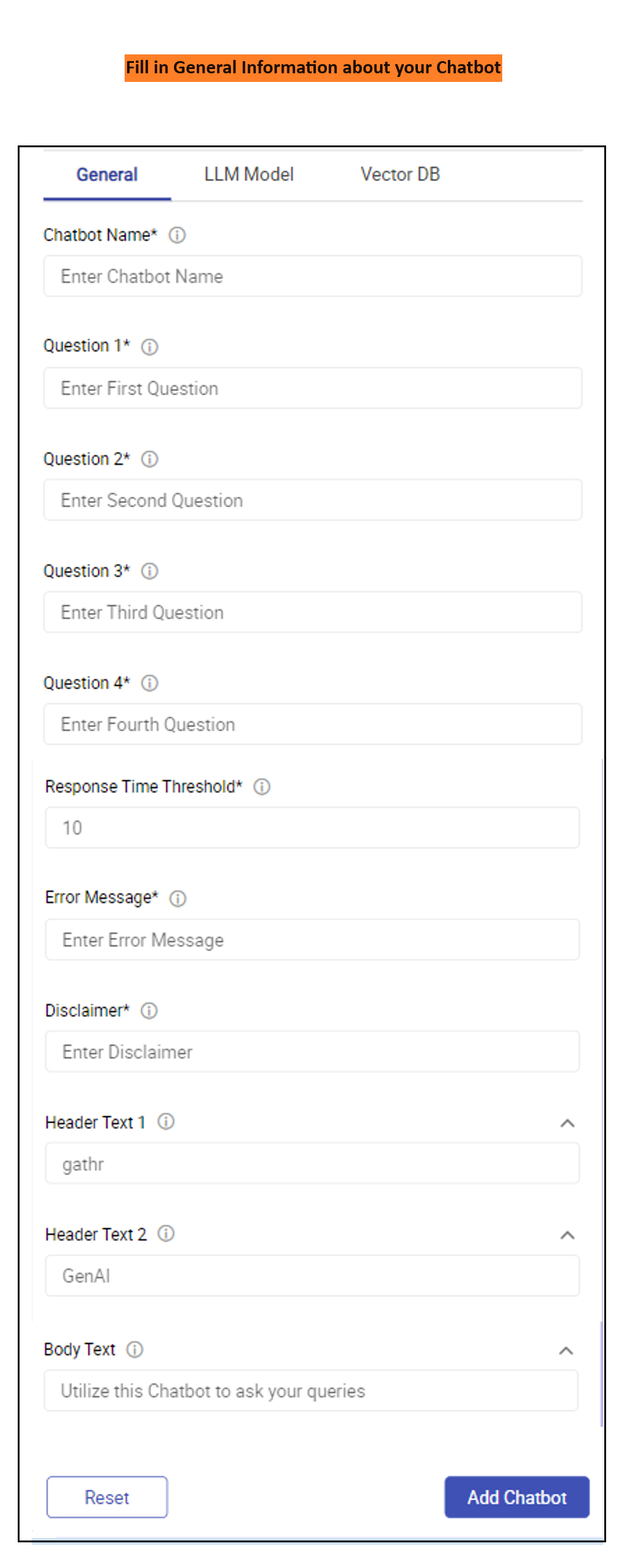

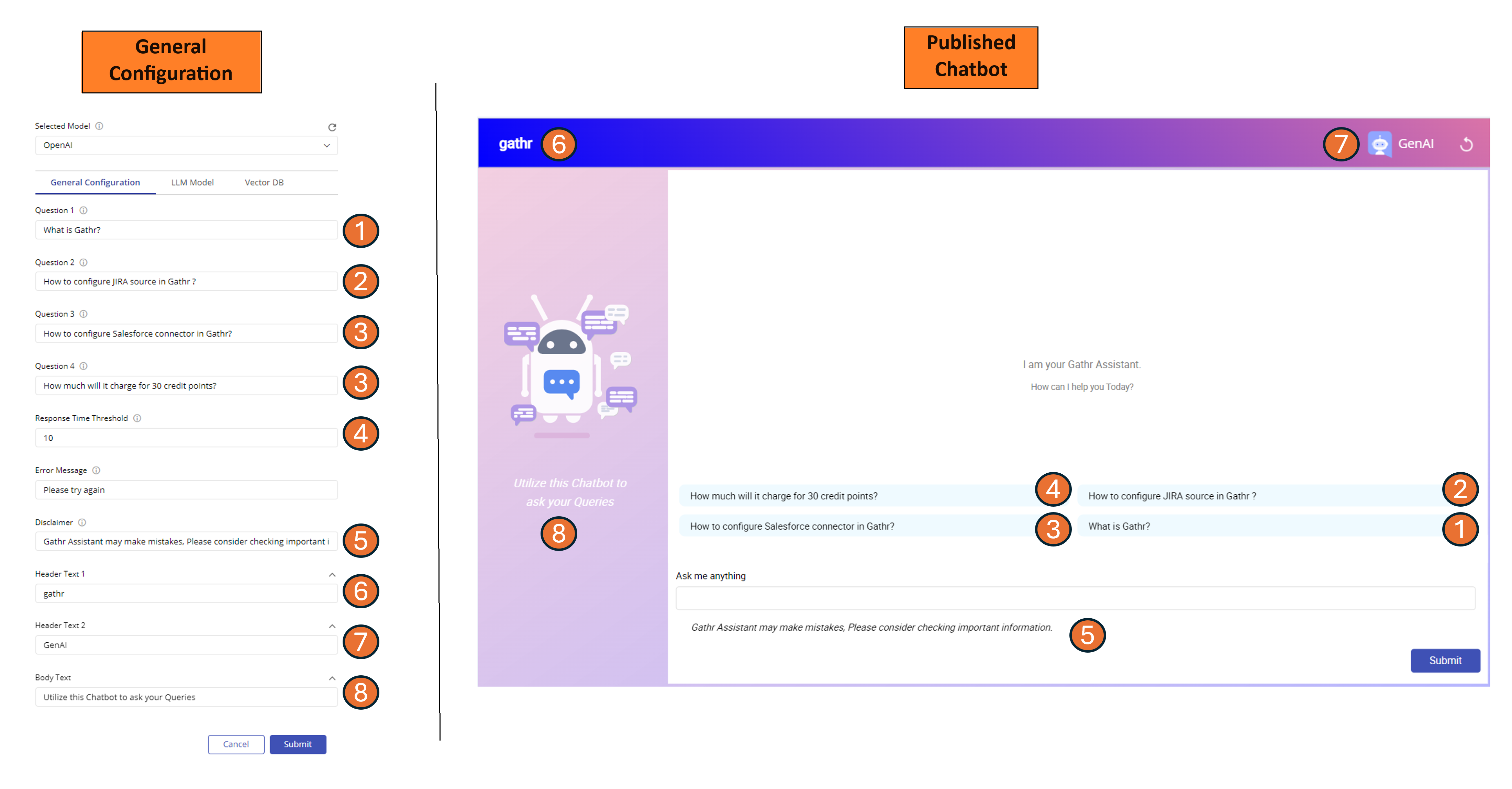

Section 1: General Information

Fill in the following details:

Chatbot Name: Enter a unique and descriptive name for your chatbot. Example: “Customer Support Bot”

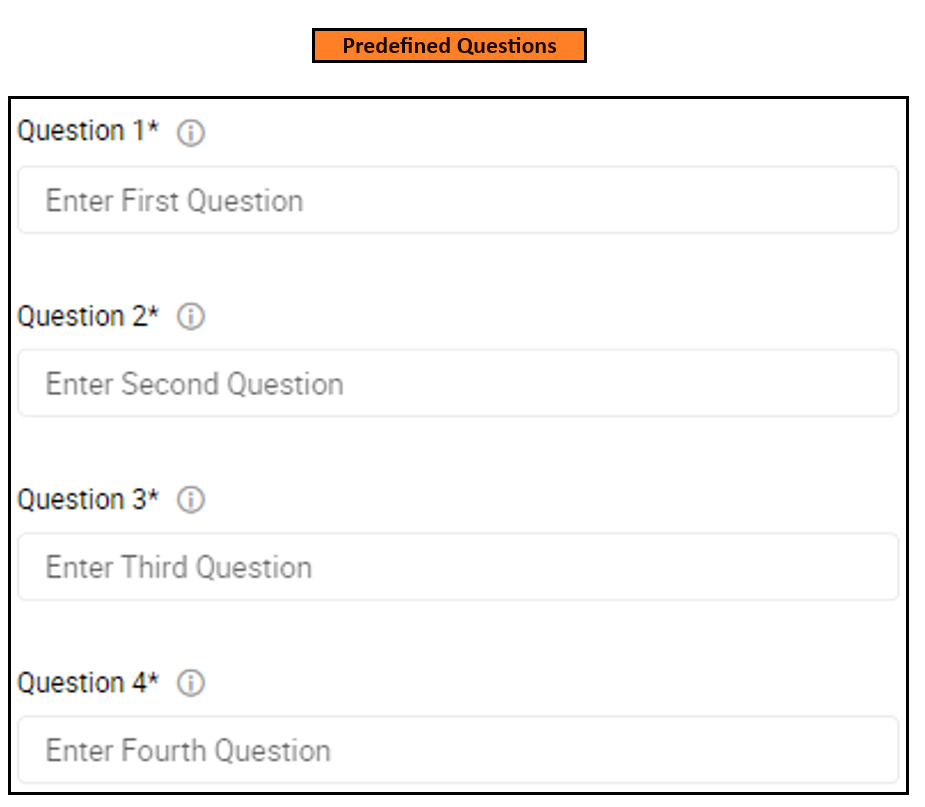

Predefined Questions:

Question 1: Provide the first predefined question for the app users. Example: “How can I reset my password?”

Question 2: Provide the second predefined question for the app users. Example: “What are your hours of operation?”

Question 3: Provide the third predefined question for the app users. Example: “How can I track my order?”

Question 4: Provide the fourth predefined question for the app users. Example: “What is your return policy?”

Response Time Threshold: Set the maximum allowable response time for the chatbot (in seconds). Example: 5

Error Message: Define the error message to be shown to users if something goes wrong. Example: “Sorry, something went wrong. Please try again later.”

Disclaimer: Provide a disclaimer for the chatbot to inform users about its limitations or usage terms. Example: “Chatbot Assistant may make mistakes, please consider checking important information.”

Header Text 1: Enter the name of your organization. Example: “ABC Corp”

Header Text 2: Provide additional header text for the user application. Example: “Welcome to ABC Corp’s Virtual Assistant”

Body Text: Provide the body text for the user application. Example: “Utilize this chatbot to ask your queries and receive instant support.”

You can zoom in on the image below to see how your general information will appear in your published Chatbot.

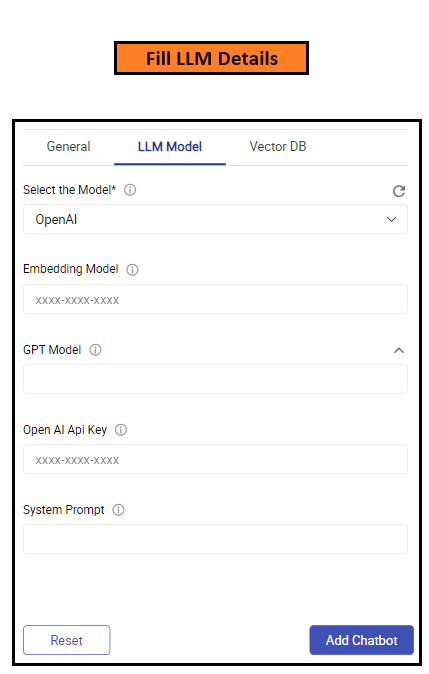

Section 2: LLM Model Configuration

Follow below steps to configure your LLM models:

- Select the Model: Choose the language model from the dropdown list. Options are “OpenAI” and “Llama”.

Case 1: If “OpenAI” is selected:

a. Embedding Model: Embeddings are a numerical representation of text that can be used to measure the relatedness between two pieces of text. Embeddings are useful for search, clustering, recommendations, anomaly detection, and classification tasks.

Example: “text-embedding-ada-002”, “text-embedding-3-small”, “text-embedding-3-large”.

Refer this URL: Embeddings

b. GPT Model: Enter the GPT model name. Example: “gpt-4”

Refer this URL: Models

c. OpenAI API Key: Provide the alphanumeric API key for your OpenAI instance. Example: “sk-abcdef1234567890”

Refer this URL: OpenAI API Key

d. System Prompt: Enter the prompt text to guide the system’s responses.

A system prompt is an instruction or context provided to the chatbot to define its behaviour and responses. A well-crafted system prompt ensures that the chatbot understands its role, the context of interactions, and the tone it should maintain.

Key Elements of a Strong System Prompt

- Define the Role: Clearly specify the role of the chatbot.

- Provide Context: Include relevant context or background information.

- Set the Tone: Indicate the desired tone (formal, friendly, professional, etc.).

- Outline Responsibilities: Describe the tasks or types of queries the chatbot will handle.

- Include Examples: Provide examples of queries and appropriate responses.

Let’s explore few examples on System Prompts. Let’s examine below statement to demonstrate how you can create powerful prompts.

“I am [your role], and would like to use the ……”

Example 1: Customer Support Chatbot

“I am a Customer Support Agent and would like to use the chatbot to assist customers with their queries about our products and services. The chatbot should provide polite and helpful responses, ensuring customer satisfaction. For example:

- If a user asks about product availability, respond with: ‘Our products are currently in stock and available for purchase. You can check specific availability on our website.’

- If a user has a complaint, respond with: ‘I’m sorry to hear about your experience. Please provide more details, and I will assist you further.’”

Example 2: HR Information Chatbot

“I am an HR Representative and would like to use the chatbot to answer employees’ questions about HR policies, benefits, and leave requests. The chatbot should be professional and informative. For example:

- If a user asks about vacation policy, respond with: ‘Employees are entitled to 15 days of paid vacation annually. For more details, please refer to the employee handbook.’

- If a user inquires about health benefits, respond with: ‘Our health benefits include medical, dental, and vision coverage. You can find detailed information on the HR portal.’”

Example 3: IT Support Chatbot

“I am an IT Support Specialist and would like to use the chatbot to help users with technical issues and IT-related inquiries. The chatbot should be technical yet approachable. For example:

- If a user reports a software issue, respond with: ‘Please try restarting your computer and updating the software. If the issue persists, provide more details for further assistance.’

- If a user asks about password reset, respond with: ‘To reset your password, visit the IT support page and follow the instructions. If you encounter any problems, let me know.’”

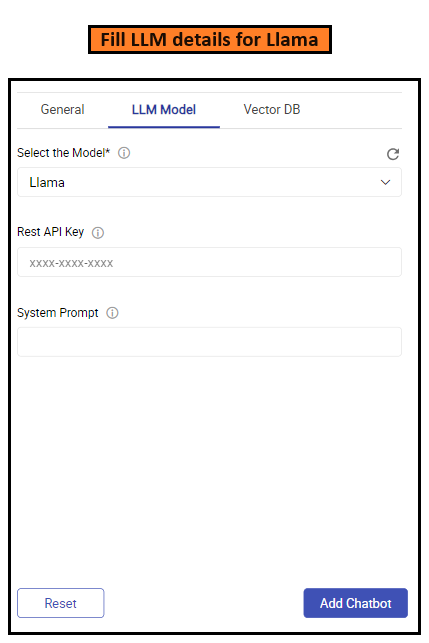

Case 2: If “Llama” is selected:

a. REST API Key: Provide the alphanumeric API key for your Llama instance. Example: “llama-abcdef1234567890”

Refer this URL: Get Llama API

b. System Prompt: Enter the prompt text to guide the system’s responses. Example: “You are a knowledgeable chatbot designed to assist with various queries.”

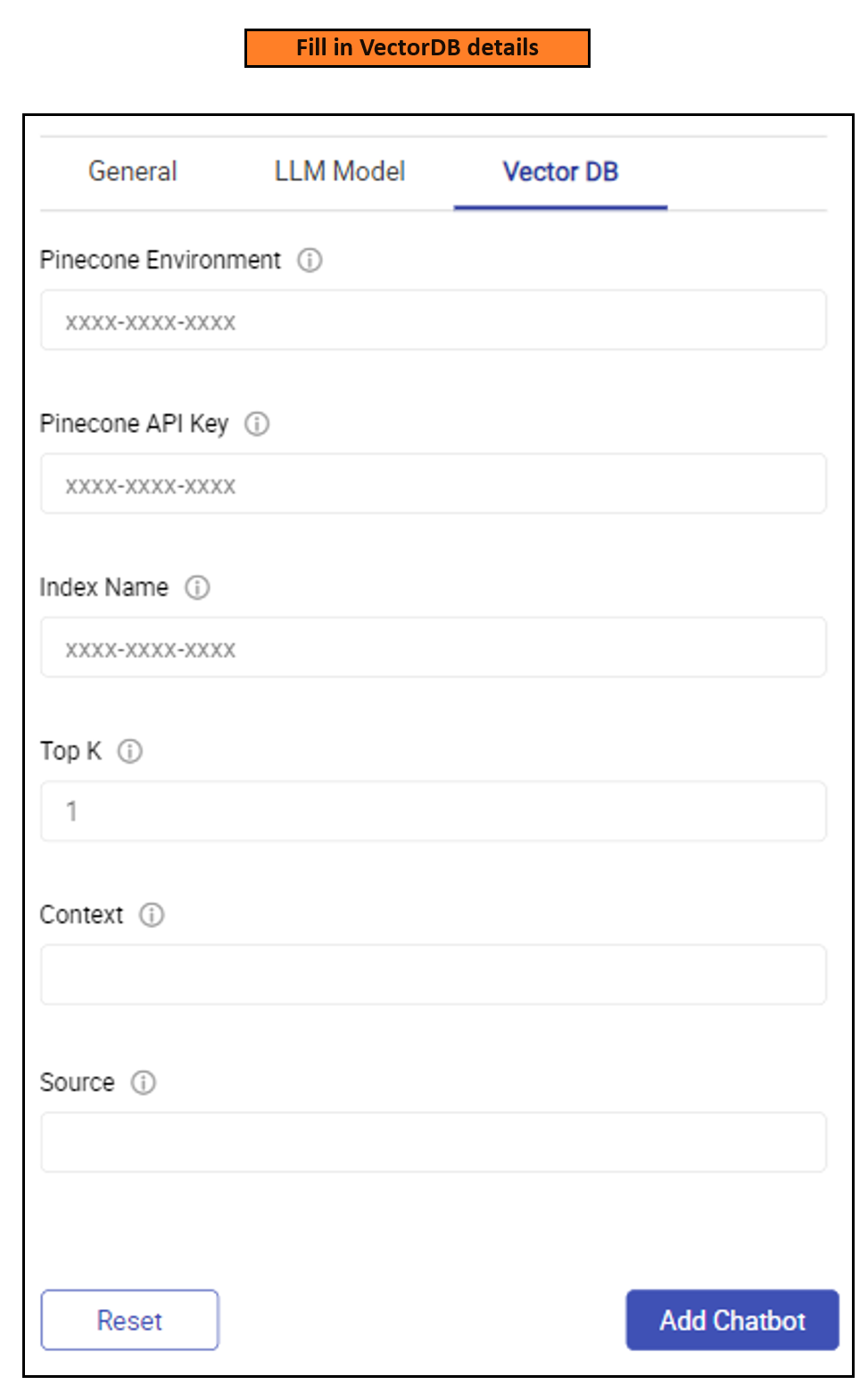

Section 3: Vector DB Configuration

Follow below steps to configure your Vector DB Configuration:

- Pinecone Environment: Enter the name of your Pinecone environment. Example: “production”

To check the Pinecone environment, you can use the ‘list_indexes’ operation, it returns a list of all indexes and their environment details in a Pinecone project.

To know more about it, you can refer to this URL: Pinecone environment

- Pinecone API Key: Provide the API key for your Pinecone database. Example: “pc-abcdef1234567890”

Refer to this URL: Get Pinecone API key

- Index Name: Provide the name of the Pinecone index. Example: “chatbot-index”

To learn more about indexes in Pinecone, refer to this URL: Pinecone List Indexes and Pinecone Index Management

- Top K: Specify the number of top records to consider while maintaining the desired level of relevance.

Top K is a parameter used in Pinecone queries to specify the maximum number of results to return. When you perform a query in Pinecone, you can set ‘top_k=3’ to get the 3 most relevant matches, ‘top_k=10’ to get 10 most relevant results.

The ‘top_k’ parameter allows you to control how many results are returned, which is useful when you only need a few highly relevant results versus when you need a larger set of results.

Pinecone will return up to ‘top_k’ results, but if there are fewer than ‘top_k’ results that match the query, it will return all the matching results. Example: You can input numerical value: 3

For more information, refer to: Pinecone Top-K

- Context: The JSON key in the Pinecone response that holds the context is “metadata”. This key-value pair is used to store additional information about the vector, such as the original sentences that produced the matching vectors.

You need to provide the JSON key in the Pinecone response having the context. Example: “context”

- Source: Enter the JSON key in the Pinecone response that holds the source information. Example: “source”

By following these steps, you can efficiently configure your custom Gen AI chatbot.

Make sure to double-check each input field for accuracy. Once configured, your chatbot will be ready to assist users with predefined questions, provide timely responses, and handle errors gracefully.

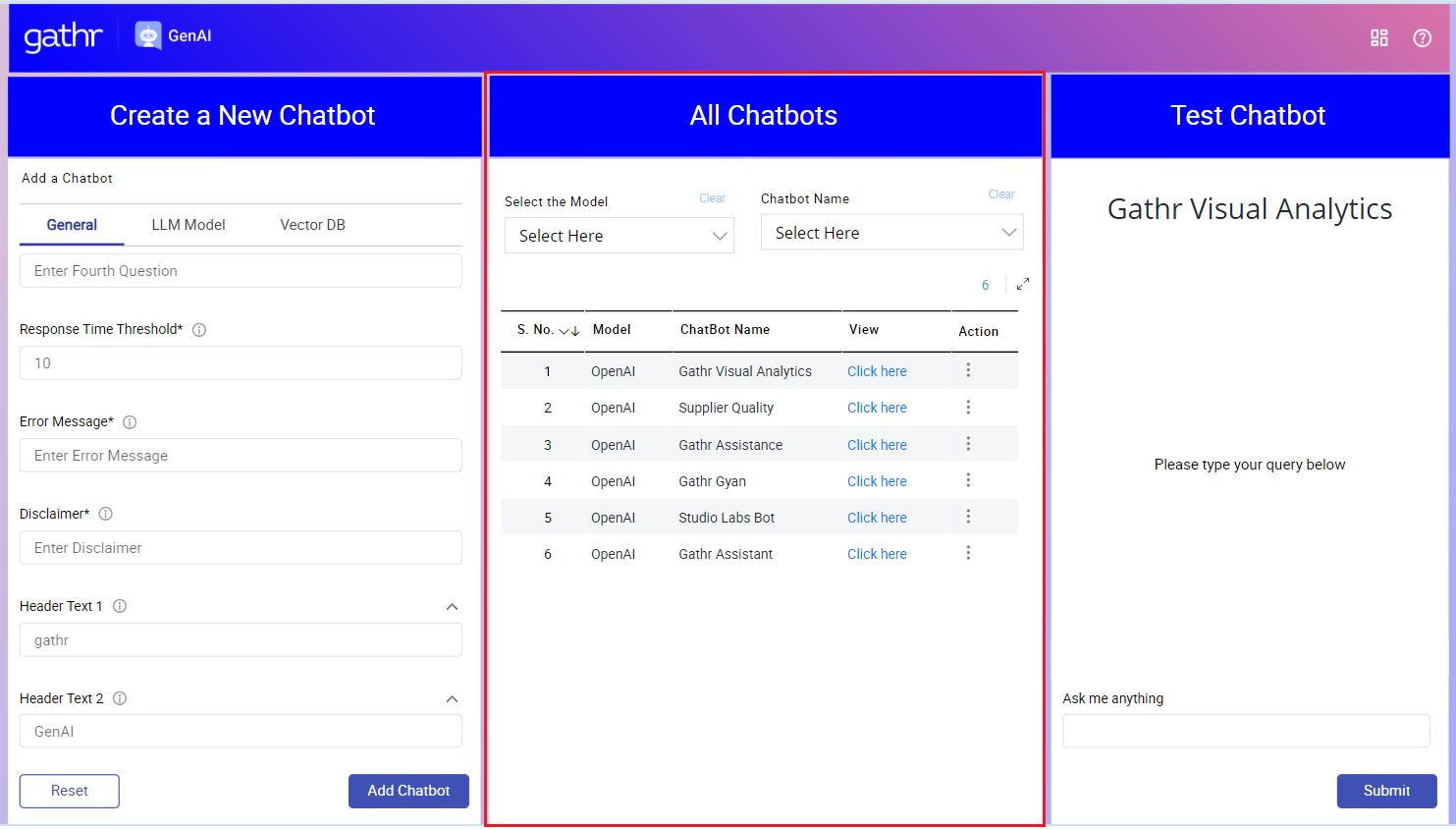

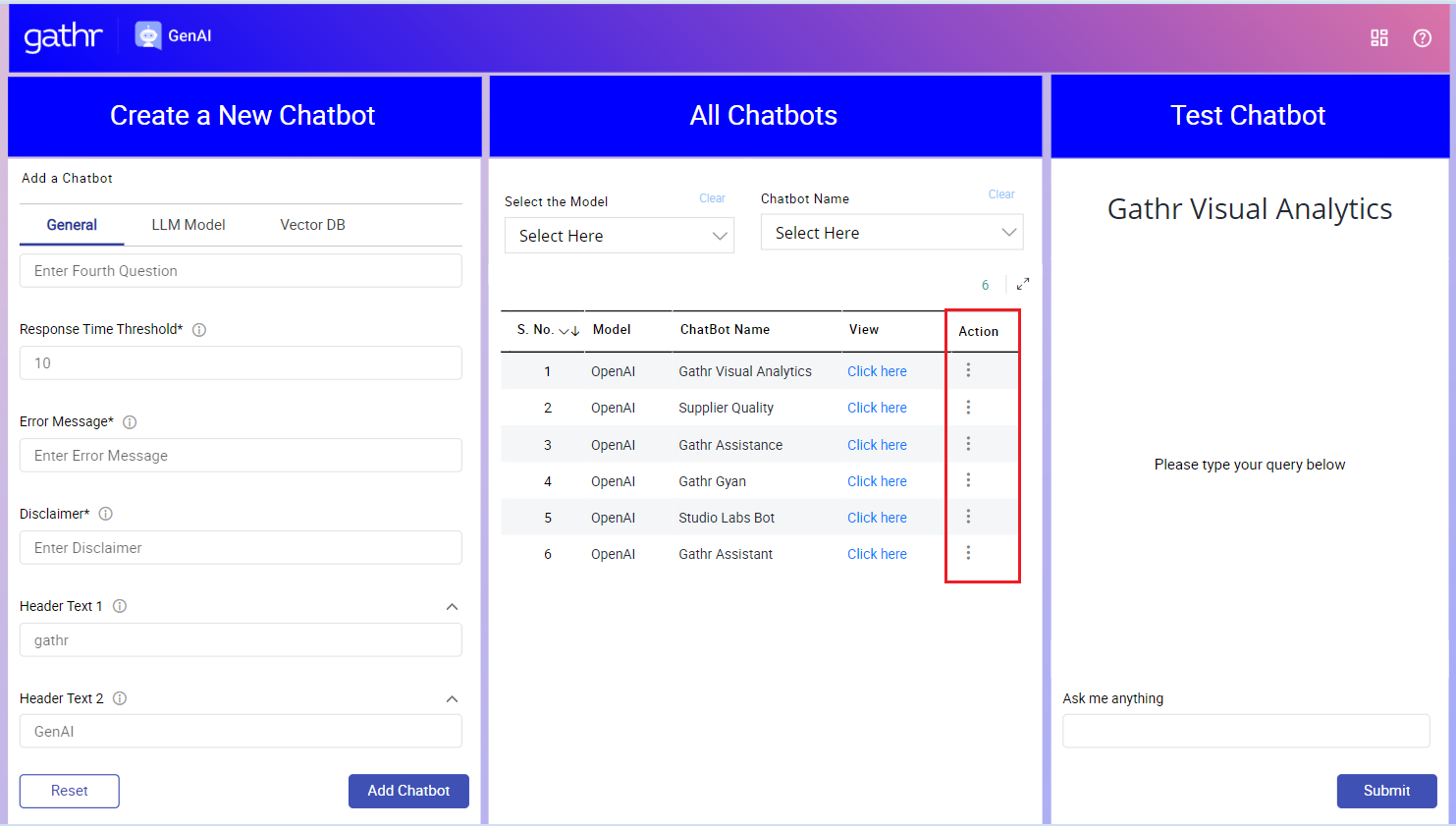

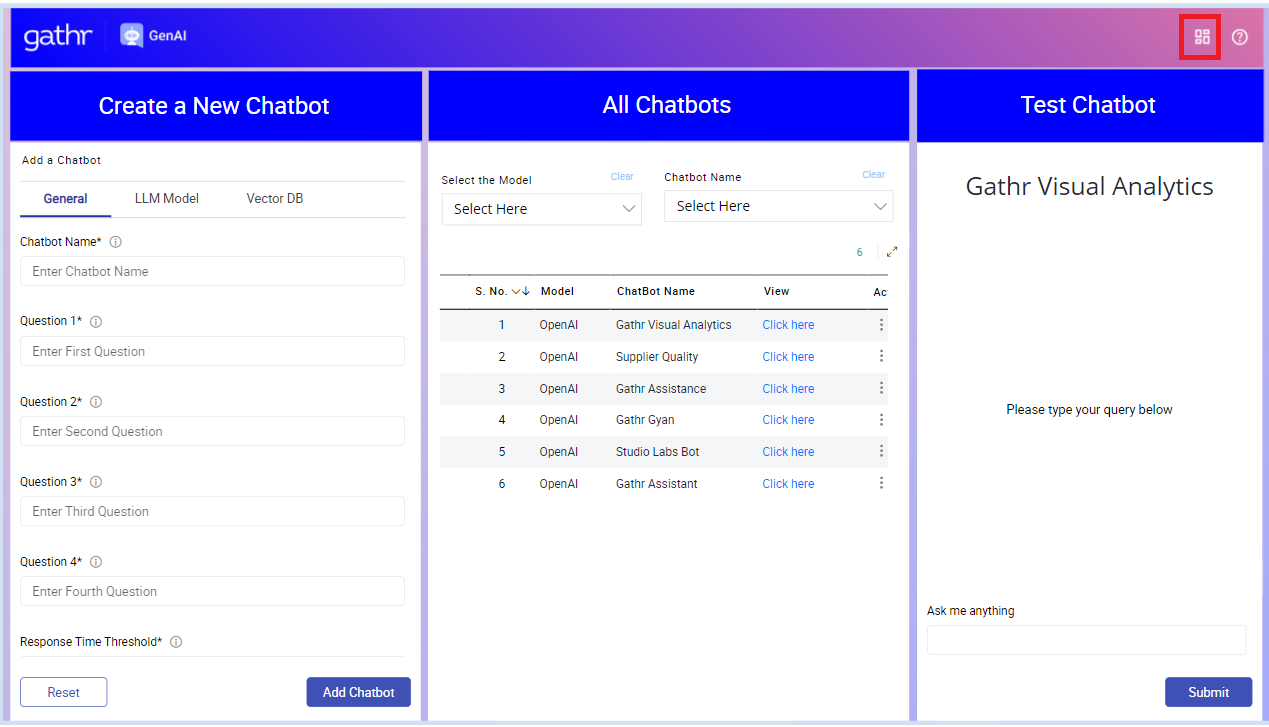

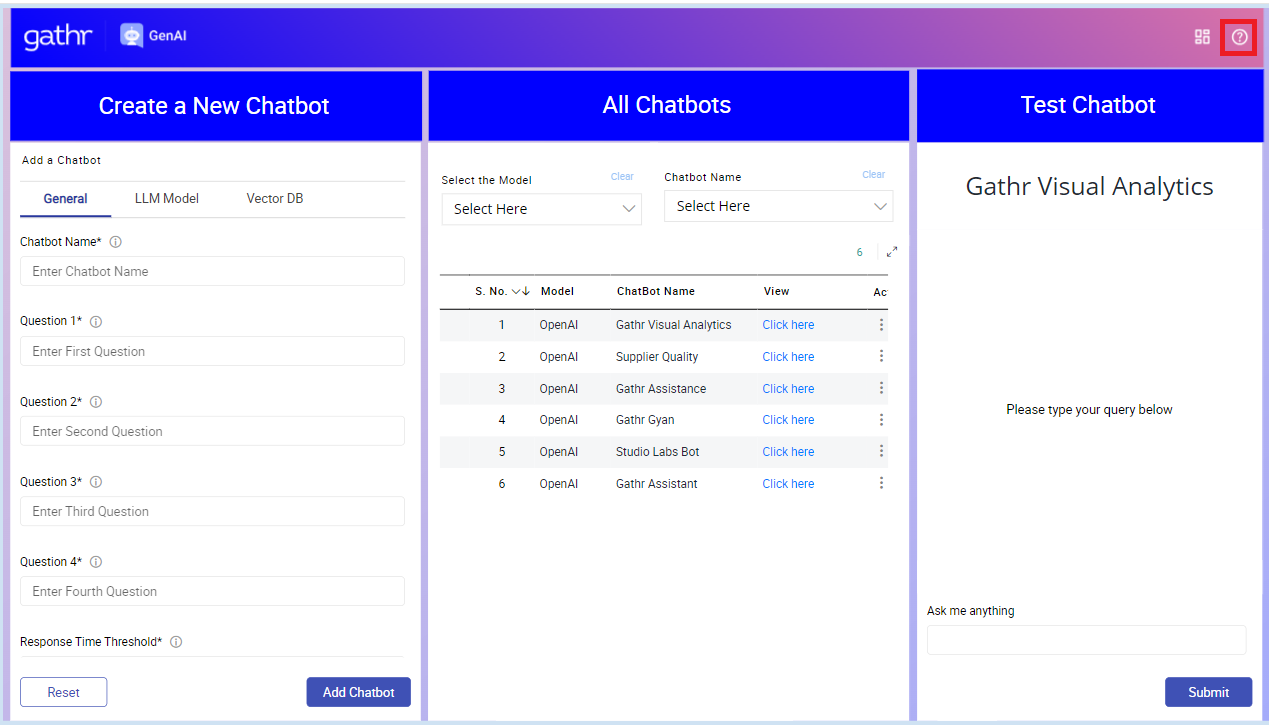

All Chatbots

The “All Chatbots” section in the application provides administrators with a comprehensive overview and management interface for all the chatbots they have created.

Choose the Chatbots

The “All Chatbots” section includes two slicers and a detailed list of chatbots. Here’s how to use each feature:

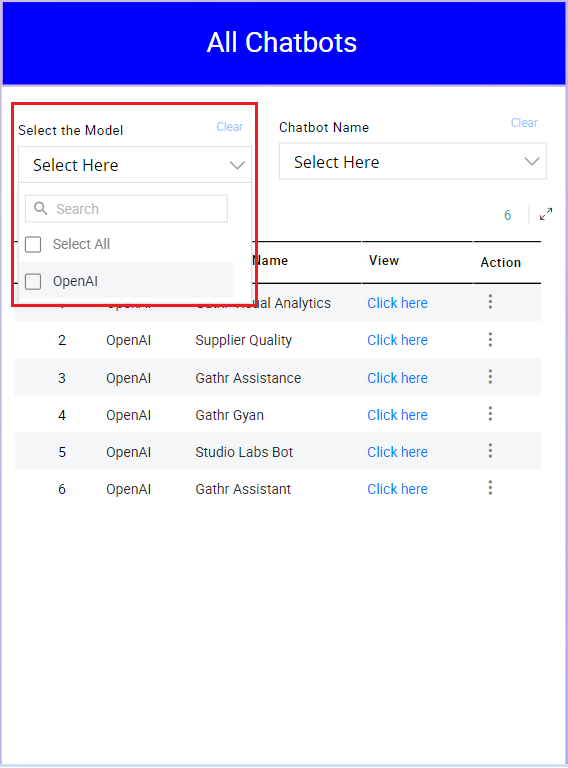

Select the Model:

This slicer allows you to filter the list of chatbots based on the LLM models used.

- Click on the “Select the Model” slicer.

- Choose one or more models from the list (e.g., OpenAI, Llama).

The list of chatbots will update to show only those created with the selected models.

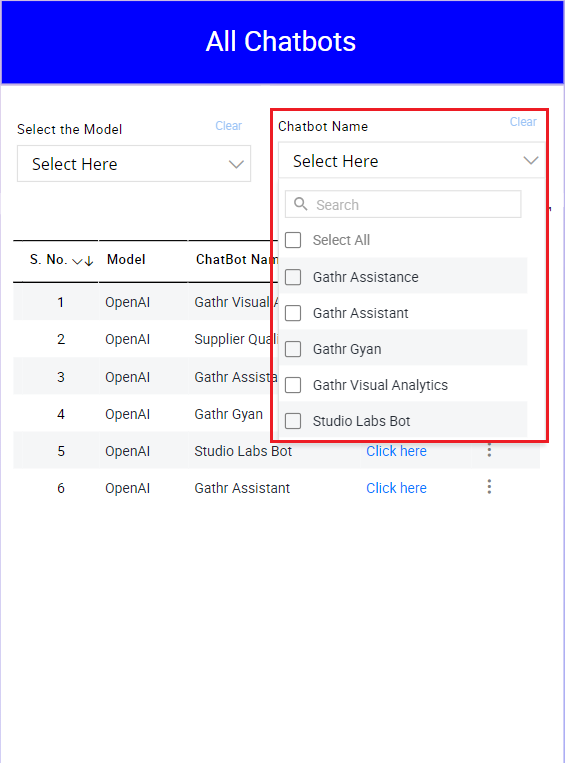

Chatbot Name:

This slicer enables you to filter the list by specific chatbot names.

- Click on the “Chatbot Name” slicer.

- Select one or multiple chatbot names from the list.

The list will refresh to display only the selected chatbots.

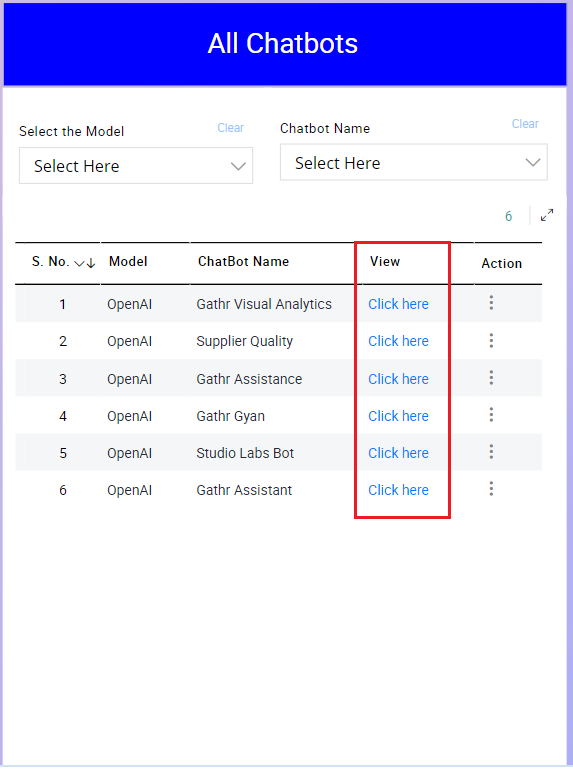

Chatbot List

The list of chatbots includes the following columns:

- Model: Indicates the LLM model (e.g., OpenAI, Llama) used to create the chatbot.

- Chatbot Name: The name assigned to the chatbot during its creation.

- View: Contains a clickable link labelled “Click here” to view the chatbot.

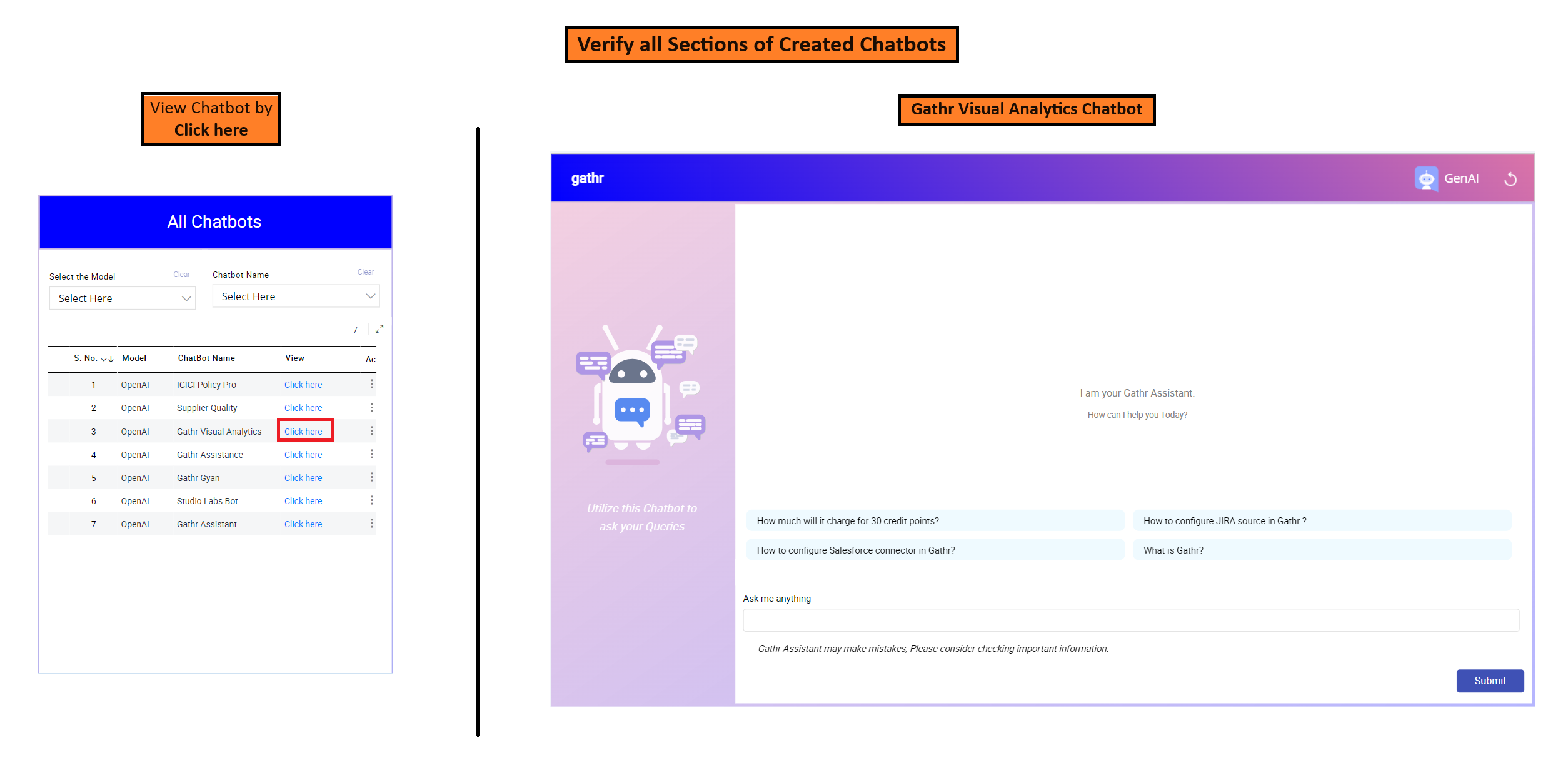

You can zoom in on the image below to see how your Chatbot will appear in new tab.

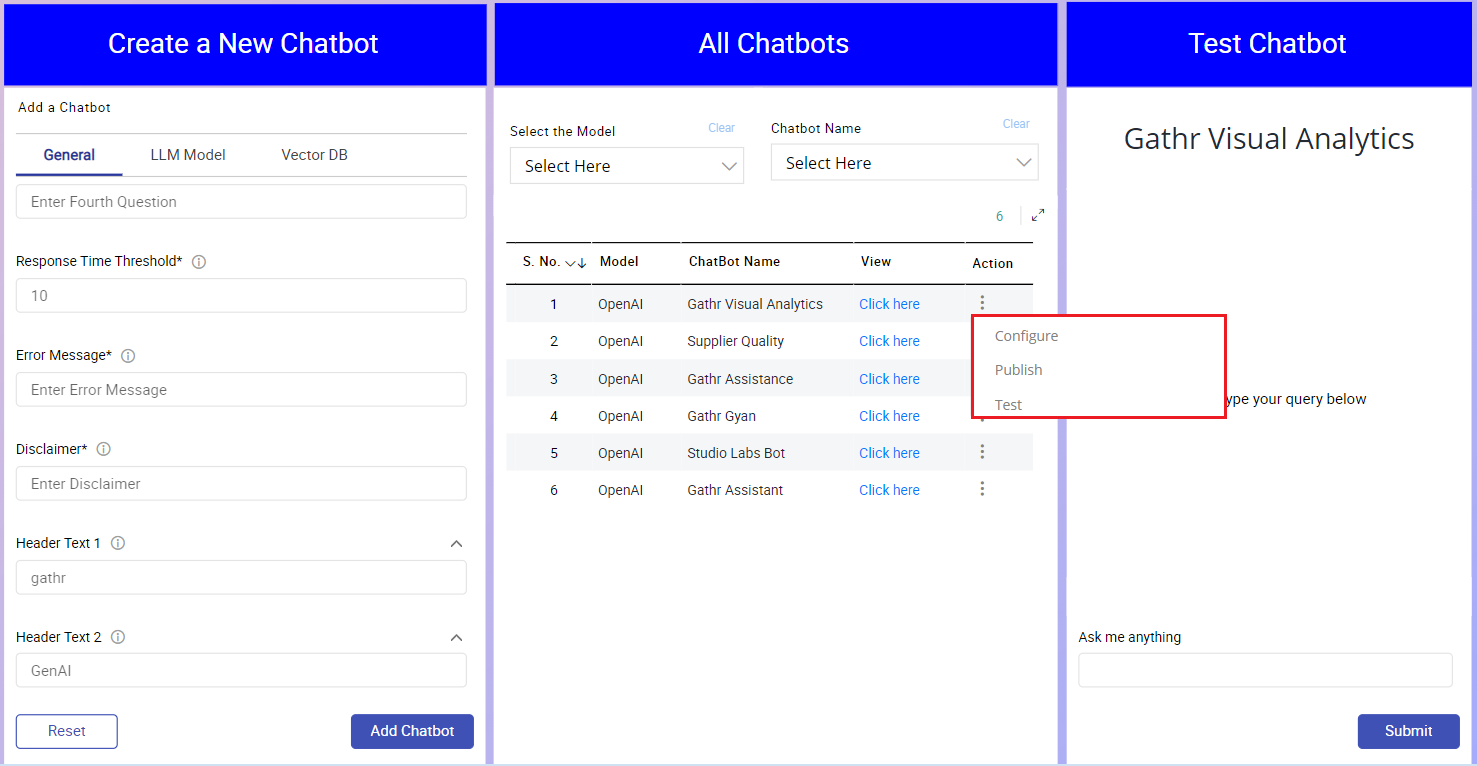

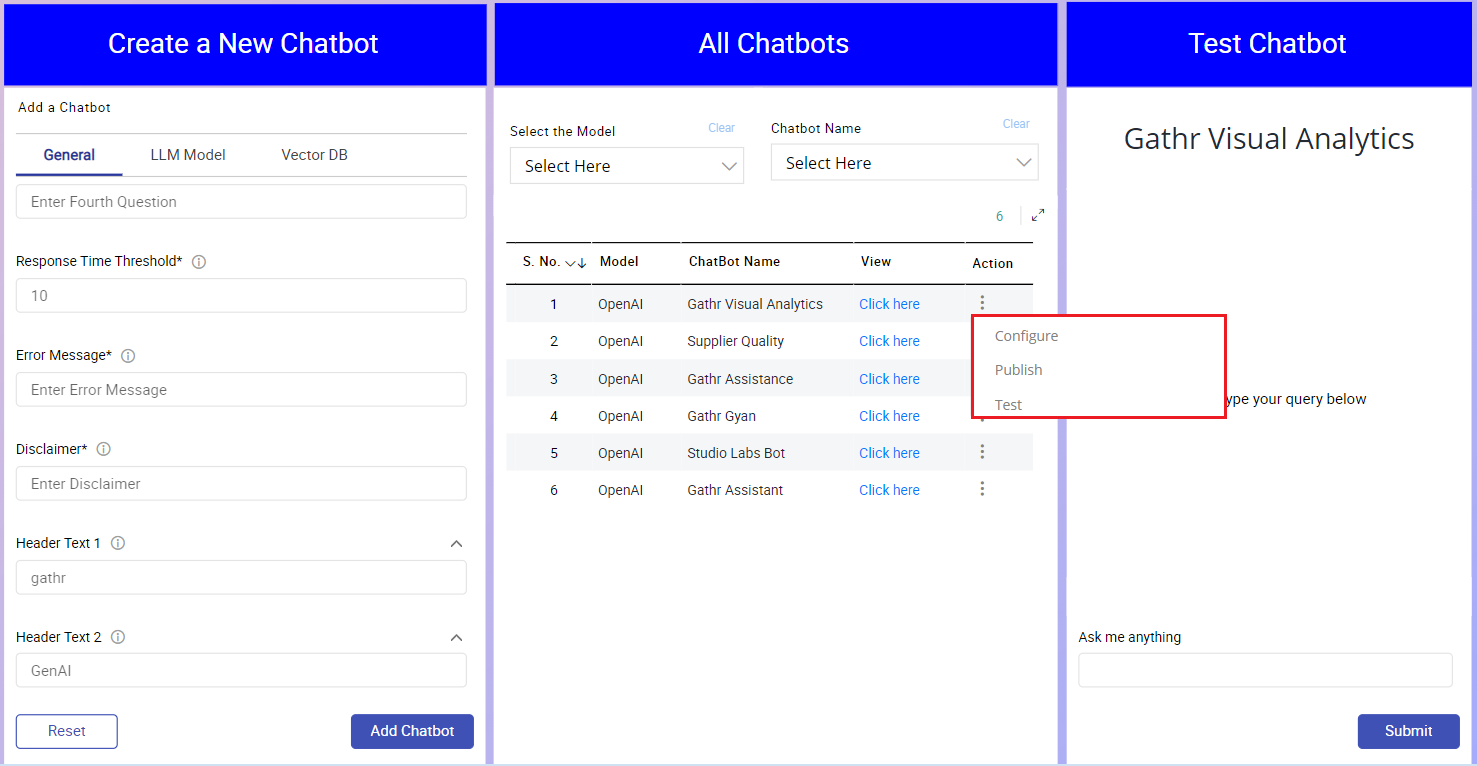

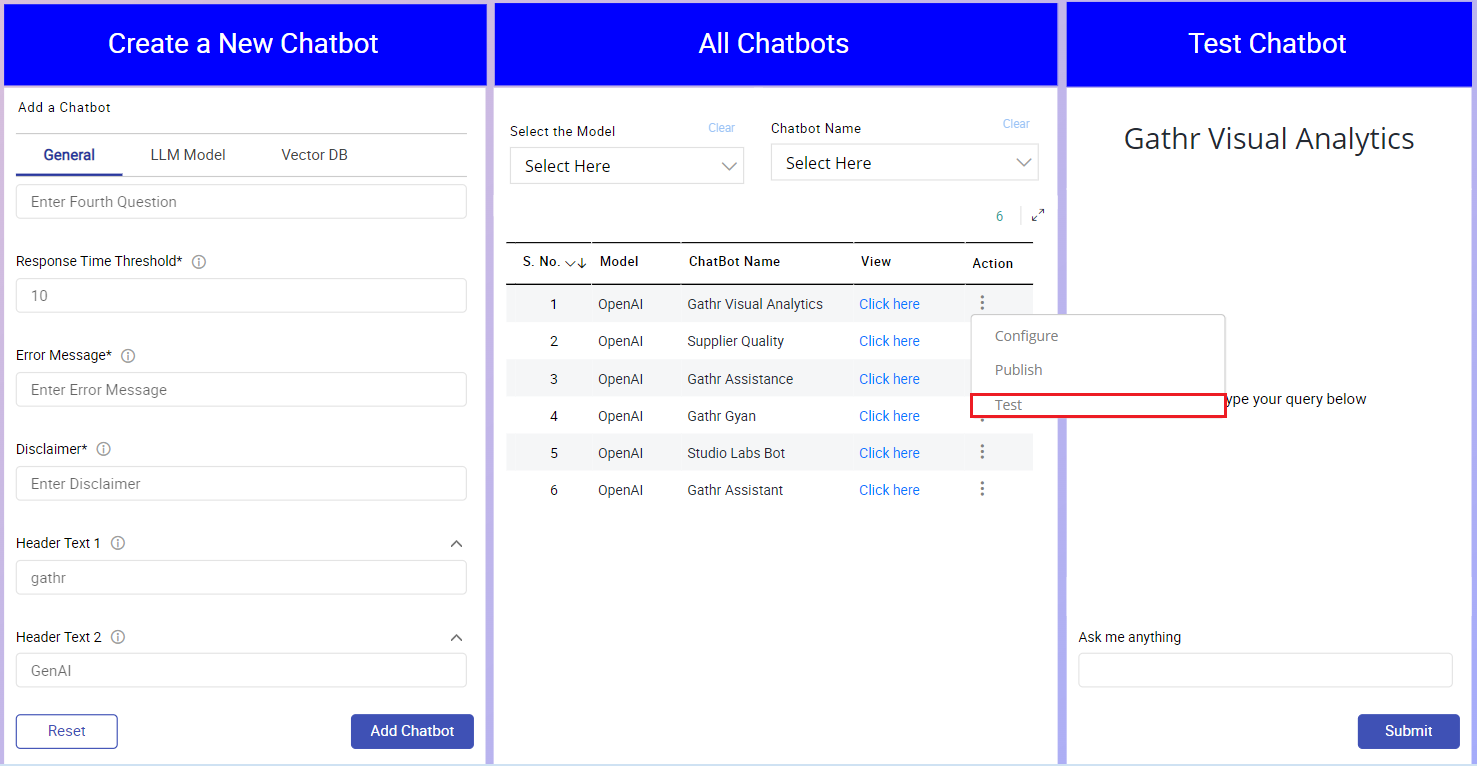

- Action: Contains a menu with three options accessible via three dots (⋮). It provides following options:

Upon clicking on three dots (⋮), following menu options are available:

- Configure: Opens the configuration settings for the chatbot to make changes. Click “Configure” to adjust settings of that Chatbot like name, questions, model configuration, etc.

- Publish: Publishes the chatbot, making it accessible to users. Click “Publish” to make the chatbot live and available for user interaction.

- Test: Opens the chatbot in an adjacent test screen to evaluate its performance. Click “Test” to launch the chatbot in test mode for live testing in adjacent section Test Chatbot.

By following these steps and utilizing the slicers and action menu effectively, administrators can efficiently manage and oversee all their chatbots within the “All Chatbots” section.

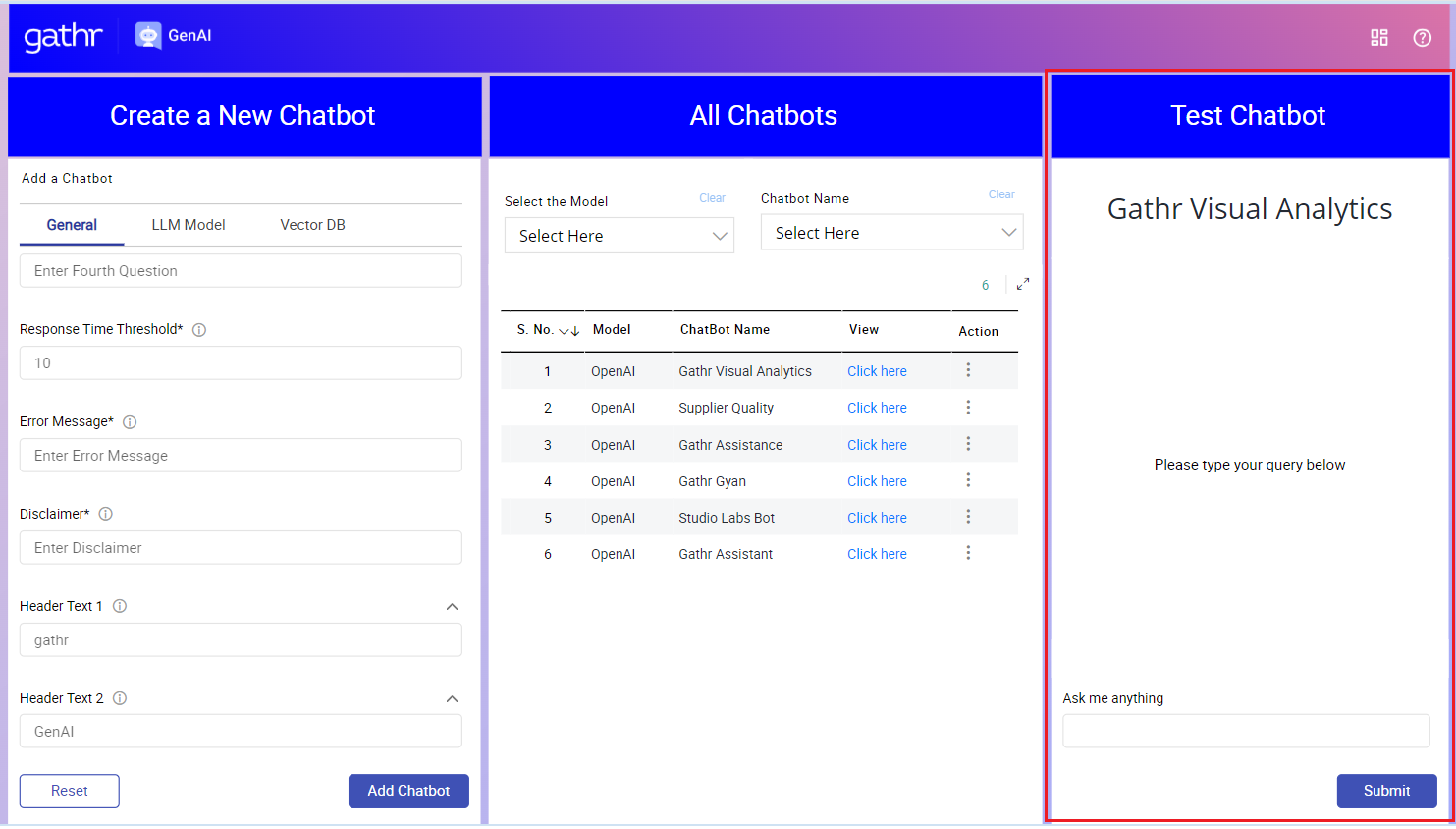

Test Chatbot

The “Test Chatbot” section allows administrators to rigorously test their chatbots before publishing them for user interaction. This guide outlines how to effectively use the “Test Chatbot” section to ensure your chatbot is functioning correctly and meets your quality standards.

Here, you can test for various aspects such as hallucinations, correctness, accuracy etc.

Steps to Test a Chatbot

Step 1: Accessing the Test Environment: In the “All Chatbots” section, click on the three dots (⋮) under the “Action” column for the chatbot you want to test.

Step 2: Select “Test”: This action will configure the test environment in the “Test Chatbot” section, it’ll load chatbot in the Test Chatbot section, by illustrating below you can see in the image we have loaded Gathr Visual Analytics chatbot in Test Chatbot section.

Step 3: Testing for Hallucinations: Ensure the chatbot is providing accurate and relevant information rather than generating false or misleading responses.

- Input a series of queries that the chatbot might encounter.

- Observe the responses and verify the factual correctness of the information provided.

- Note any instances where the chatbot provides incorrect or invented information.

Step 4: Testing for Correctness: Verify that the chatbot understands and correctly processes user inputs.

- Ask common user questions and review the responses for accuracy.

- Ensure that the chatbot follows the logic and guidelines set in its configuration.

- Check if the chatbot handles variations of the same question consistently and correctly.

Step 5: Testing for Accuracy: Evaluate the chatbot’s ability to provide precise and accurate answers.

- Test the chatbot with questions that require specific answers.

- Assess if the responses are detailed and correctly address the user’s query.

- Make sure the responses are concise and directly related to the questions asked.

Step 6: General Functionality Testing: Test all functionalities, including predefined questions, error handling, and response time.

- Run through all predefined questions to ensure they elicit the correct responses.

- Simulate error conditions to check if the error messages are triggered appropriately.

- Measure the response time to ensure it is within the specified threshold.

Post-Testing Actions

Review and Adjust:

- If any issues are identified during testing, return to the “Configure” option in the “All Chatbots” section to make necessary adjustments.

- Retest the chatbot after making changes to ensure all issues have been resolved.

Publish the Chatbot: Once you are satisfied with the chatbot’s performance, accuracy, and reliability, you can proceed to publish it.

- Go back to the “All Chatbots” section.

- Click on the three dots (⋮) under the “Action” column for the chatbot.

- Select “Publish” to make the chatbot live and available for user interaction.

By following these steps, administrators can ensure their chatbots are thoroughly tested and ready for user deployment, providing accurate and reliable support to users.

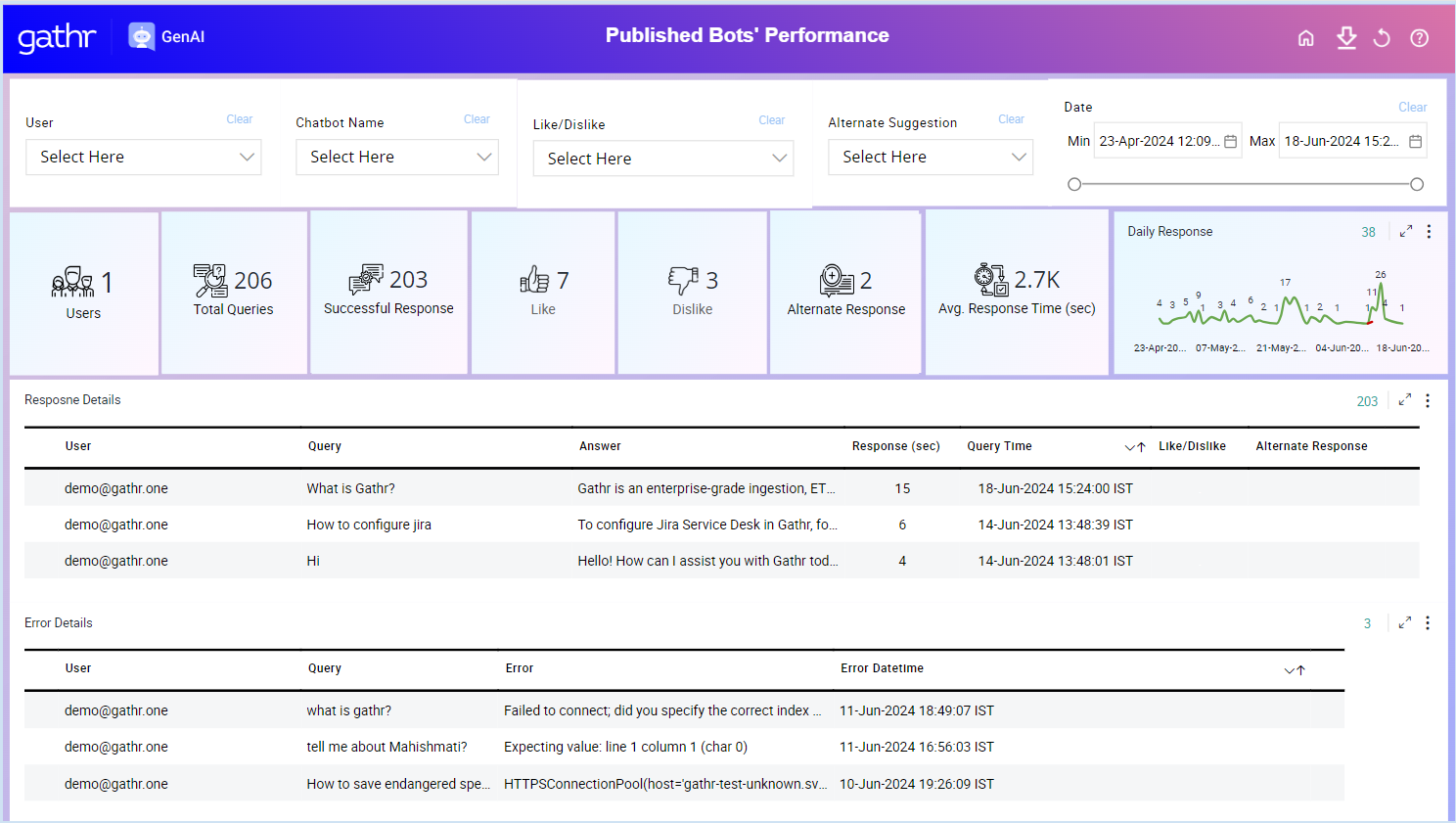

Analytics Dashboard

The AI Chatbot Builder Dashboard provides comprehensive insights into the performance of your published chatbots. This section will help you understand and utilize the dashboard’s features and metrics effectively.

Overview

This dashboard includes five slicers for filtering data and displays key performance metrics of your published chatbots. It also provides detailed response and error information in tabular formats.

Menu Options

Home: Navigate back to the Chatbot Builder interface. Clicking on this option will take you out of the performance dashboard and return you to the main Chatbot Builder page, where you can create, configure, and manage your chatbots.

Export All Responses: Download a comprehensive report of all chatbot responses. This option allows you to download all the responses recorded in the dashboard, including user queries, chatbot answers, response times, and user feedback, in a convenient file format such as CSV or Excel for further analysis and record-keeping.

Refresh Dashboard: Update the dashboard to display the latest data. Clicking this option will refresh the dashboard, ensuring that all metrics, response details, and error information are up-to-date with the latest interactions and performance data of your chatbots.

Help: Access the Help section for guidance and support. This option opens the Help section, providing you with additional resource to get assistance on using the dashboard or troubleshooting any issues you may encounter.

To view this dashboard, click on dashboard icon in Top Right corner of the Admin panel as shown in below image:

Slicers for Filtering Data

- User: Filter the data by specific users.

- Click on the “User” slicer.

- Select one or multiple users from the list.

- Chatbot Name: Filter the data by specific chatbot names.

- Click on the “Chatbot Name” slicer.

- Select one or multiple chatbot names from the list.

- Like/Dislike: Filter the data based on user feedback (likes or dislikes).

- Click on the “Like/Dislike” slicer.

- Select either “Like” or “Dislike” or both.

- Alternate Suggestion (Yes/No): Filter the data based on whether alternate suggestions were provided.

- Click on the “Alternate Suggestion” slicer.

- Select “Yes” or “No”.

- Date (Min and Max): Filter the data by a specific date range.

- Click on the “Date” slicer.

- Set the minimum and maximum dates to define the range.

Key Metrics Displayed

- Users: Total number of unique users who interacted with the chatbot.

- Total Queries: Total number of queries received by the chatbot.

- Successful Response: Number of queries that received a successful response.

- Like: Number of positive feedback (likes) received from users.

- Dislike: Number of negative feedback (dislikes) received from users.

- Alternate Response: Number of times alternate suggestions were provided.

- Avg Response Time (sec): Average time taken by the chatbot to respond to queries.

- Daily Response: Number of responses provided by the chatbot on a daily basis.

Response Details

- User: The user who made the query.

- Query: The query submitted by the user.

- Answer: The response given by the chatbot.

- Response (sec): Time taken to respond to the query.

- Query Time: The time when the query was made.

- Like/Dislike: User feedback on the response.

- Alternate Response: Indicates if an alternate response was provided.

You review the details to analyse individual query interactions. Use the slicers to filter the data for more specific insights.

Error Details

- User: The user who encountered the error.

- Query: The query that resulted in an error.

- Error: Description of the error encountered.

- Error datetime: Date and time when the error occurred.

These insights let’s you to examine the error details to understand issues encountered by users. Use this information to troubleshoot and improve the chatbot’s performance.

Help Section

The Help section provides comprehensive guidance on configuring your chatbots and effectively using the user dashboard. Whether you’re setting up a new chatbot, adjusting existing configurations, or interpreting performance metrics.

You can take view it by clicking on “?” icon on top right corner as shown in figure below.

If you have any feedback on Gathr documentation, please email us!