Sample ETL Source

In this article

Schema Type

Max no. of Rows: Option to limit the number of rows fetched during application design time. This option has no impact during the application run.

Its default value is 100.

Example: Upload File feature is used to provide sample data, and the file has 10000 rows. Now, if the Max no. of Rows is set to 200, then only 200 rows will be fetched from the file according to the sampling method provided and the schema will be defined as per the 200 rows data.

Trigger time for Sample: Minimum wait time before system fires a query to fetch data.

Example: If it is 15 Secs, then system will first wait for 15 secs and will fetch all the rows available and create a dataset out of it.

Sampling Method: Choose if the sample records for designing the application should be fetched serially or randomly.

Following are the ways:

Top N: Extract serially the specified number of records from top of the table using limit() on dataset.

Random Sample: Extract random sample records applying sample transformation and then limit to max number of rows in dataset.

Schema Description

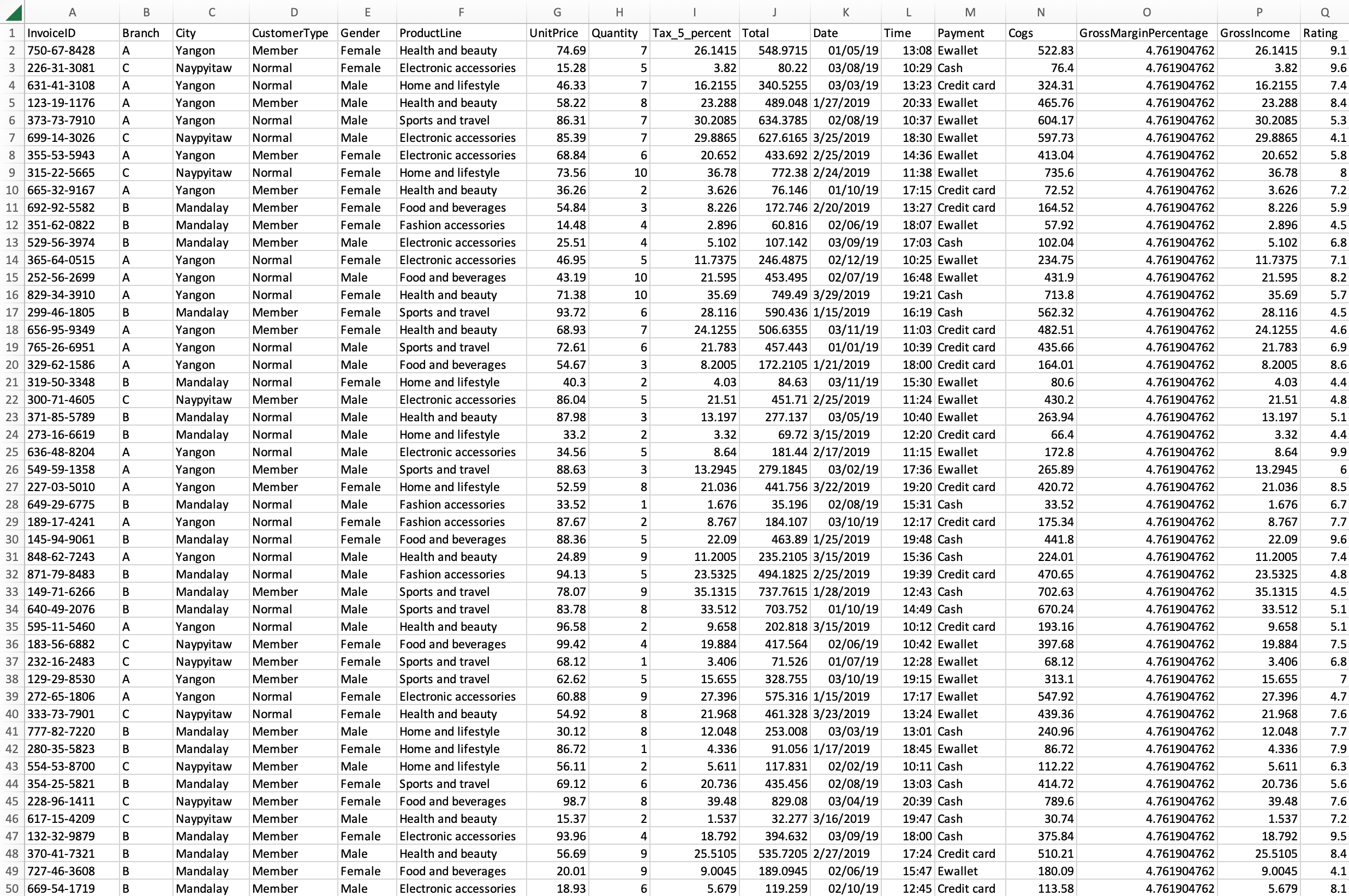

A sample data source is offered that contains a range of marketing data. It is in CSV format and has 17 columns with a total of 1000 rows.

You can use this data source to experience the flow of data in Gathr applications right from the ingestion stage and experiment in the subsequent steps with different transformations to understand how the data can be organized before storing it in a target.

You can even use Gathr Store as a target to complete the end-to-end flow of data transfer by providing a very simple target configuration. See Gathr Store ETL Target → for more details.

Detect Schema

Check the populated schema details. For more details, see Schema Preview →

Pre Action

To understand how to provide SQL queries or Stored Procedures that will be executed during pipeline run, see Pre-Actions →)

Notes

Optionally, enter notes in the Notes → tab and save the configuration.

If you have any feedback on Gathr documentation, please email us!