EMR Cluster Configuration

Amazon EMR is an analytics service offered by AWS. Gathr provides the interface to create EMR cluster configurations that can be consumed to run applications or data assets on the EMR clusters as per the set configuration.

Prerequisites

The following prerequisites must be fulfilled before creating EMR Cluster configurations via Gathr.

An active AWS account with necessary permissions to create EMR clusters.

A compute environment should be set up in Gathr using the VPC endpoint service.

An EMR cluster should be available in the AWS account, that you have associated with Gathr during compute environment setup.

(Optional) Make sure that you have an Amazon EC2 key pair that you can use to authenticate the EMR cluster using SSH protocol.

Create EMR Cluster Configuration

The steps to create an EMR cluster configuration are same for data ingestion, CDC, ETL applications, and data assets.

The option to add new EMR cluster configuration can be accessed by following ways:

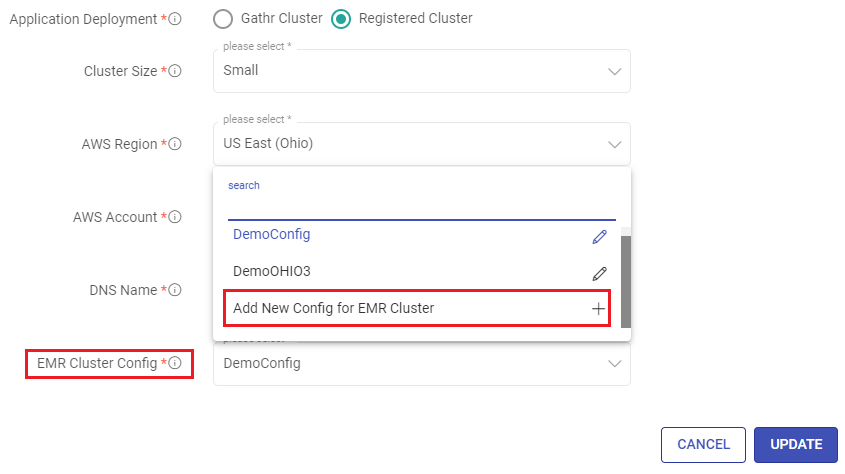

During the design phase of an application or a data asset, the last step is to save the job. The EMR Cluster Config option is available along with other fields when the application/data asset deployment preference is set to registered cluster.

From the applications/data assets listing page, you can get the option to Change Cluster.

Using any of the above ways you can get to the Add New Config for EMR Cluster page.

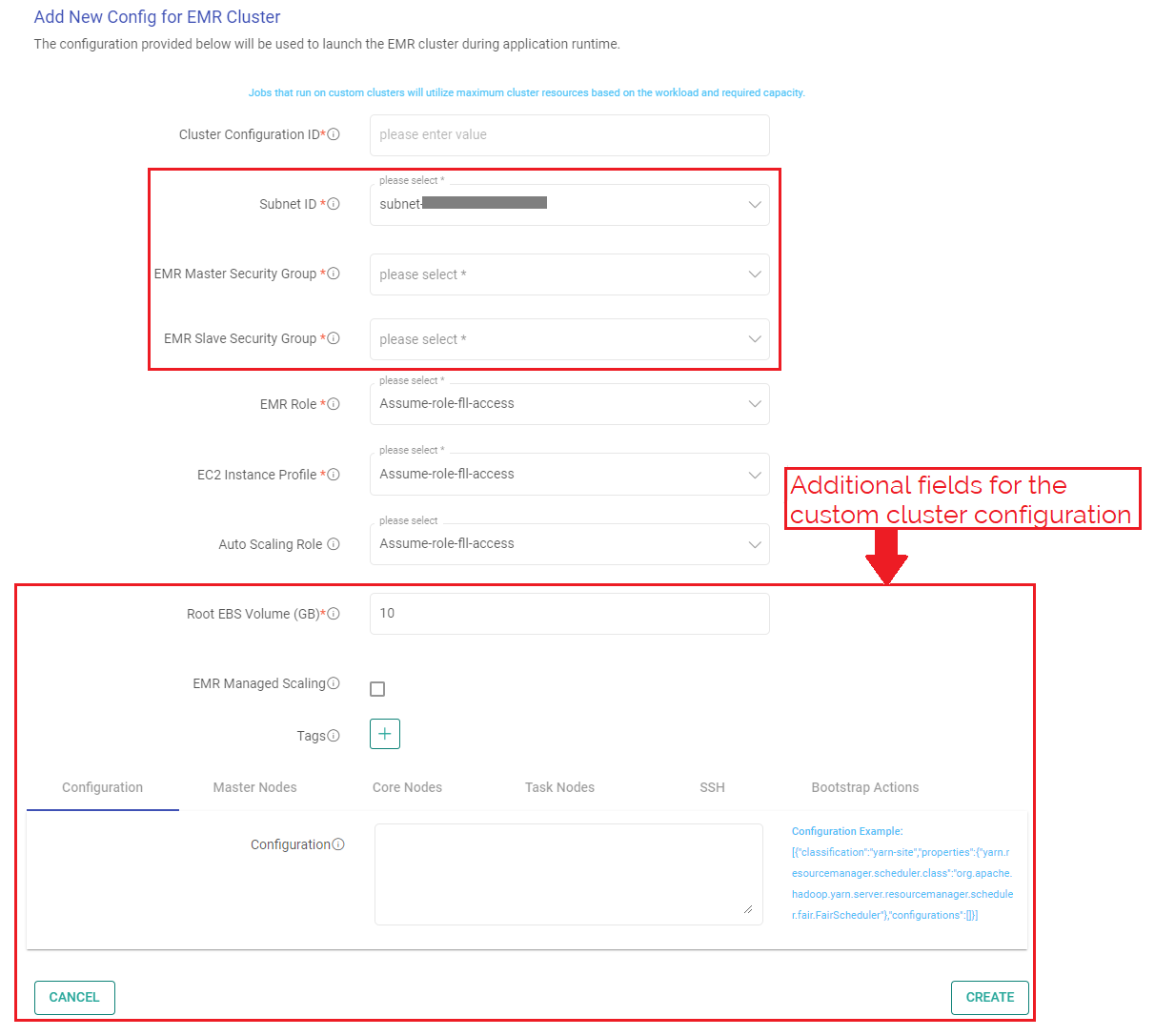

The configuration options for adding a new EMR cluster are explained below:

Cluster Configuration ID: Unique name to identify the EMR cluster configuration should be provided.

Subnet ID: Subnet ID from the drop-down list should be selected.

EMR Master Security Group: Master security group option(s) should be selected for the EMR cluster configuration.

EMR Slave Security Group: Slave security group option(s) should be selected for the EMR cluster configuration.

EMR Role: EMR role to be assigned to the cluster should be selected.

EC2 Instance Profile: EC2 instance profile should be selected from the drop-down.

Auto Scaling Role: Auto scaling role to be assigned to the cluster should be selected.

Tags: Customized tags can be added for the EMR cluster.

Custom Cluster Specific Configuration

The fields described below are specific to custom cluster creation:

Root EBS Volume (GB): Option to attach additional storage space to the server in form of virtual hard disks. Storage space required should be specified in GB.

To know more, refer to the link: Specifying Root EBS Volume

EMR Managed Scaling

Option to adjust the number of Amazon EC2 instances available to an EMR cluster.

EMR will automatically increase and decrease the number of instances in core and task nodes based on the workload. Master nodes do not scale.

Minimum Units: Provide the minimum number of core or task units allowed in a cluster. Minimum value is 1.

Maximum Units: Provide the maximum number of core or task units allowed in a cluster. Minimum value is 1.

On-Demand Limit: Provide the maximum allowed core or task units for On-Demand market type in a cluster. If this parameter is not specified, it defaults to maximum units value. Minimum value is 0.

Maximum Core Units: Provide the maximum allowed core nodes in a cluster. If this parameter is not specified, it defaults to maximum units value. Minimum value is 1.

To know more, refer to the link: EMR Managed Scaling

Configuration Tab

Configuration: You can provide customized configuration to override the default configurations for an application. Configuration provided should be in JSON format and should contain classification, properties, and optional nested configurations.

Configuration Example:

[{"classification":"yarn-site","properties":{"yarn.resourcemanager.scheduler.class":"org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler"},"configurations":[]}]

To know more, refer to the link: Configure Applications

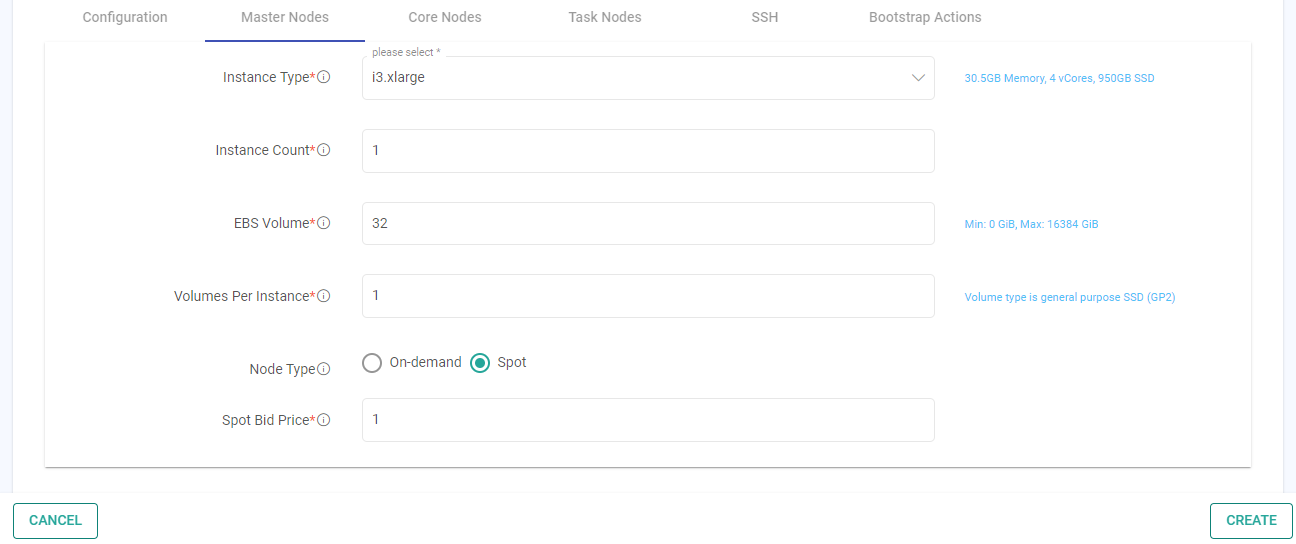

Master Nodes, Core Nodes and Task Nodes

All three tabs have similar fields as described below:

Instance Type: The type of instance having some predetermined hardware configurations should be selected for all types of Nodes based on your computing load.

To know more, refer to the link : Configure Amazon EC2 Instances

Instance Count: The number of instances that you require for core and task nodes should be set.

EBS Volume: EBS storage required per instance should be specified.

Volumes Per Instance: The block level storage volumes required for use with each EC2 instance should be specified.

Node Type: The purchasing option should be chosen out of On-demand or Spot.

To know more about when to use On-demand instances, refer to the link: On-Demand Instances

To know more about when to use Spot instances, refer to the link: Spot Instances

Spot Bid Price: Bidding price per instance should be set in dollars ($). The value provided will be used as a maximum price per spot instance/hour.

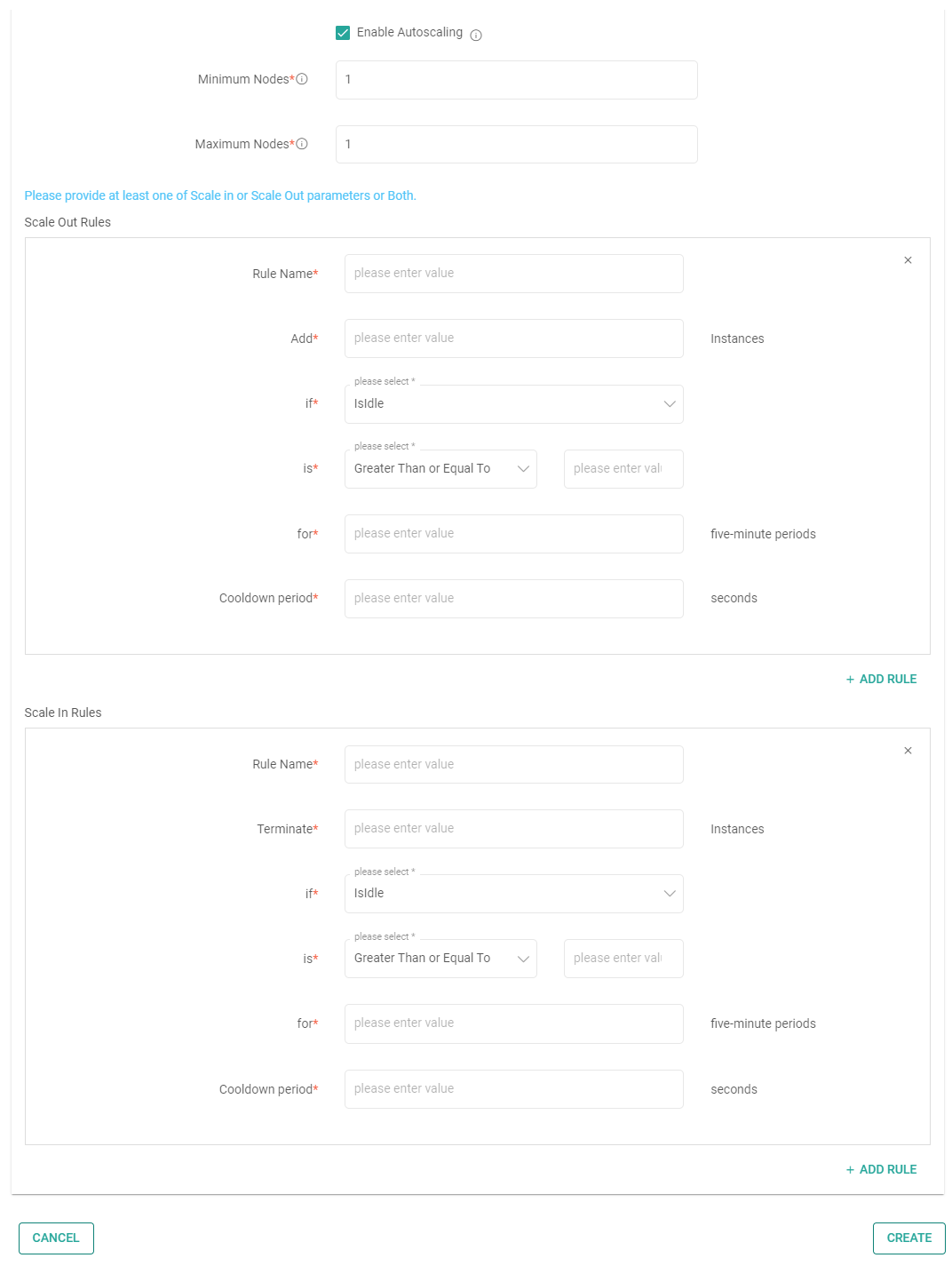

The fields described below are for custom automatic scaling and will be available on Core and Task Nodes tab if you have not opted for EMR managed scaling.

To know more, refer to the link: Using Custom Automatic Scaling

If Autoscaling is enabled on Core or Task Nodes tab, additional fields will be appear as described below:

Minimum Nodes: Provide the minimum possible number of EC2 instances for your instance group.

Maximum Nodes: Provide the maximum possible number of EC2 instances for your instance group.

Please provide at least one of Scale in or Scale Out parameters or Both.

Scale Out Rules

Rule Name: A name for the scale out rule should be provided.

Add: Provide the number of EC2 instances to be added each time the autoscaling rule is triggered.

if: Choose the AWS CloudWatch metric that should be used to trigger autoscaling.

is: Enter the threshold value and condition for the CloudWatch metric selected above.

for: Enter the number of consecutive five-minute periods over which the metric data will be compared to the threshold. Autoscaling will be triggered if the condition is met for each consecutive period.

Cooldown period: The time specified will be the cool-down time taken to start the next scaling activity after an ongoing scaling activity is completed.

ADD RULE: Click to add additional scale out rules.

Scale In Rules

Rule Name: A name for the scale in rule should be provided.

Terminate: Provide the number of EC2 instances to be terminated each time the autoscaling rule is triggered.

if: Choose the AWS CloudWatch metric that should be used to trigger autoscaling.

is: Enter the threshold value and condition for the CloudWatch metric selected above.

for: Enter the number of consecutive five-minute periods over which the metric data will be compared to the threshold. Autoscaling will be triggered if the condition is met for each consecutive period.

Cooldown period: The time specified will be the cool-down time taken to start the next scaling activity after an ongoing scaling activity is completed.

ADD RULE: Click to add additional scale in rules.

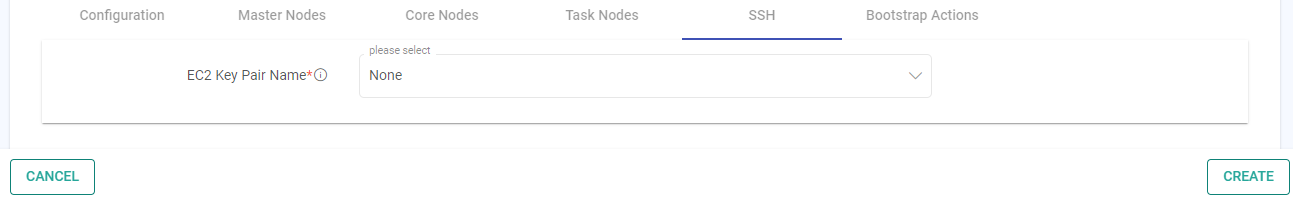

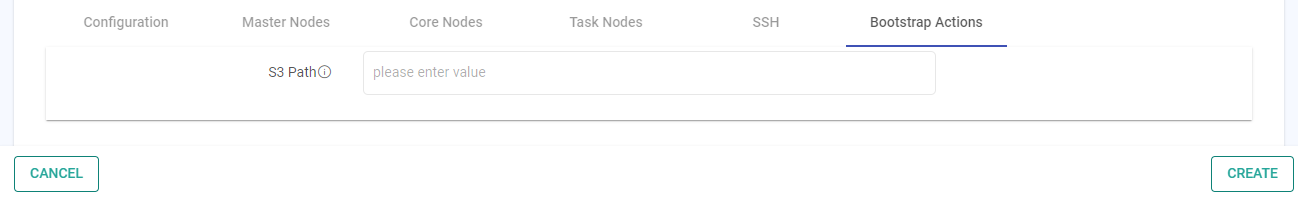

SSH Tab

EC2 Key Pair Name: This option enables users to access the EMR cluster securely using an EC2 Key Pair.

Bootstrap Actions Tab

S3 Path: The path of the script file that you need to run while bootstrapping of the cluster should be provided.

Example: s3://GathrSaaS/bootstrapscripts/xyz.sh

Once all the required fields are set, continue to create the EMR cluster.

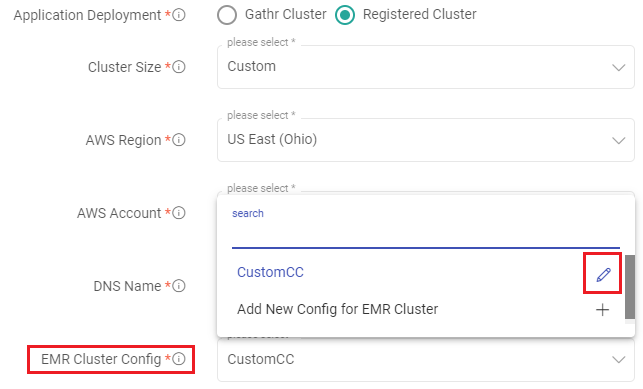

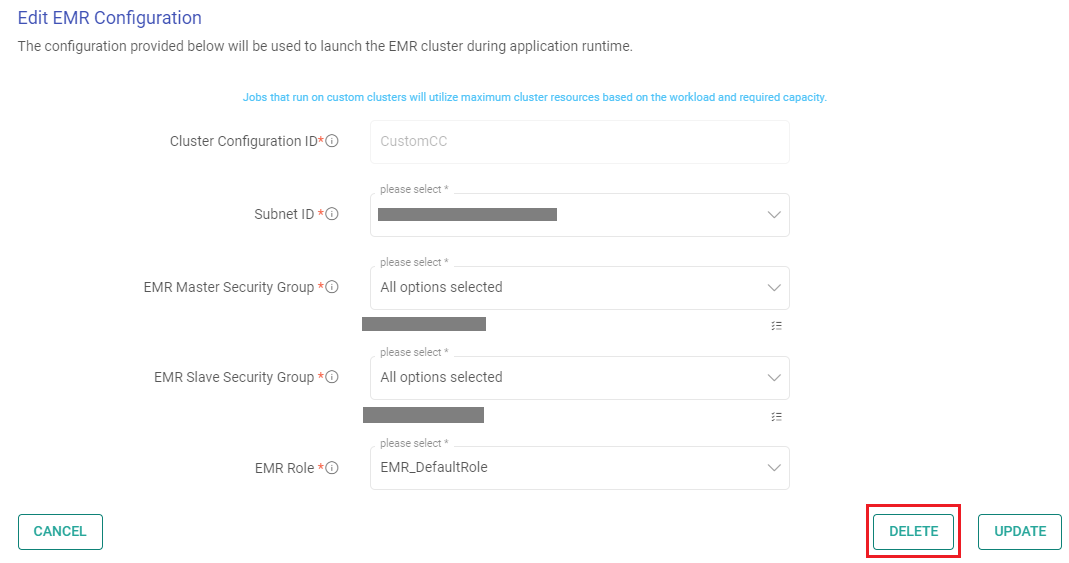

Edit EMR Cluster Configuration

The cluster configurations that you have saved in Gathr will appear in the drop-down list for the EMR cluster config field.

Click on edit icon to open the Edit EMR Configuration page, do the necessary changes and update the EMR cluster configuration.

Delete EMR Cluster Configuration

The cluster configurations that you have saved in Gathr will appear in the drop-down list for the EMR cluster config field.

Click on the edit icon to open the Edit EMR Configuration page.

The option to delete the cluster configuration will be available at the bottom of the page.

If you have any feedback on Gathr documentation, please email us!