Create a Workflow

To create a workflow, do as follows:

Click on Create Application button.

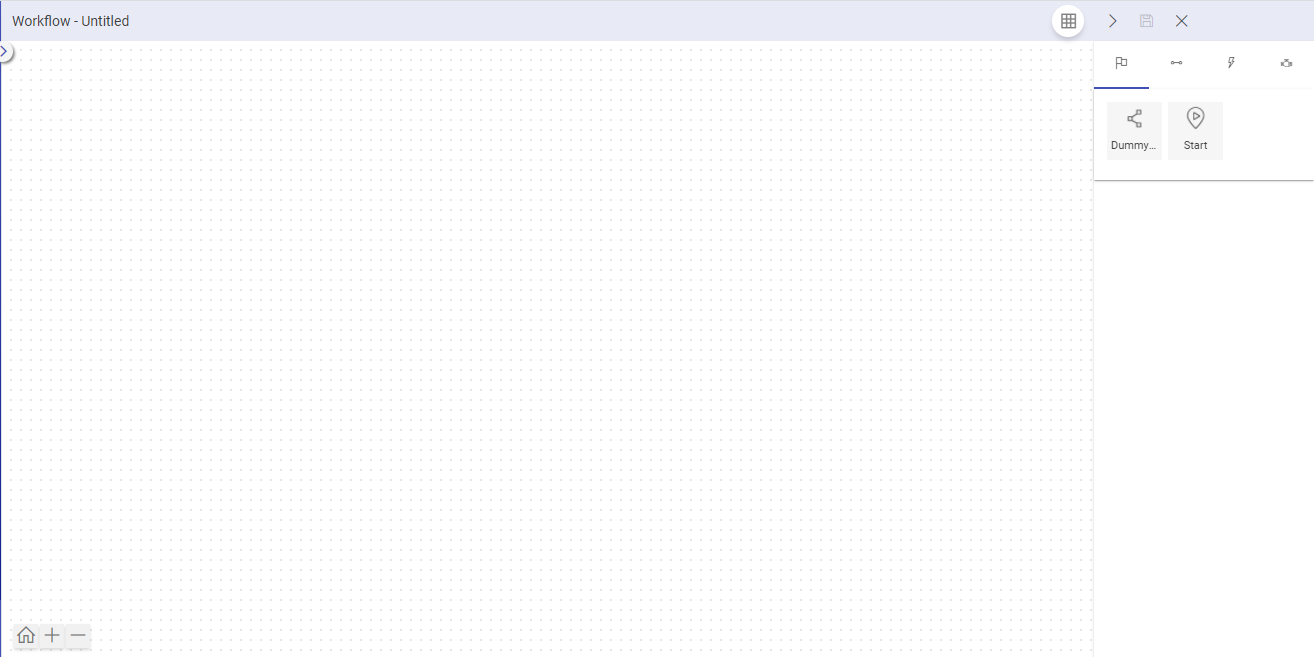

You will be redirected to Workflow design canvas.

This page has nodes to create and execute the workflow. They are explained below:

Nodes

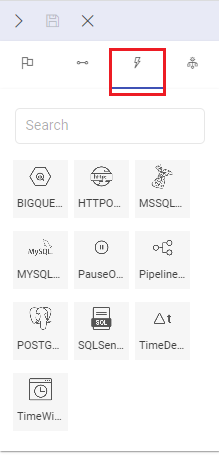

To define a workflow, four nodes are available:

Control Nodes

Pipelines

Actions

Workflow

Add Control node with one or multiple pipelines, with actions applied on it. Save your workflow. Once the Workflows are saved, you can also concatenate Workflows from the Workflow tab.

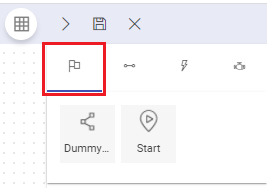

Control Nodes

Two types of control nodes are available:

Dummy: This node controls the flow of the workflow, based on trigger condition or group tasks defined in a workflow.

Start: This node is mandatory for defining a workflow. This node is used to represent logical start of a workflow. You can only use one start node in a workflow.

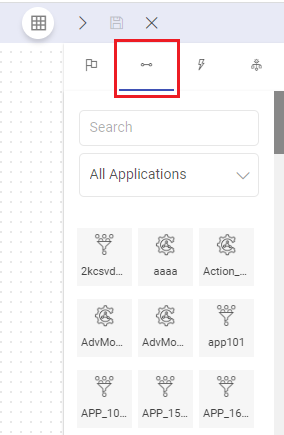

Application Nodes

All the Ingestion and ETL Applications created by you will be reflected here.

Action Nodes

Action nodes are available to provide functionality of the following actions to a workflow.

Following are the Actions available under Action node.

Prerequisites

For the database operators to run successfully in Gathr:

Gathr IP format:

ec2-3-13-24-114.<aws-region>.compute.amazonaws.com

BIGQUERY Operator

This operator is used to execute StoredProcedure(SP) in BigQuery database.

Following are the properties under the configuration:

Connection ID: Create a new BigQuery connection or select a connection ID from the drop-down which is defined in Airflow to connect with the required BigQuery database.

To know more about how to create BigQuery connection, see BigQuery Connection →

Query: SQL queries that will be used to perform operations should be provided.

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

dbtCore Operator

Git Connection: Select the Git connection.

Note: Git Connection can be created from Gathr’s main menu > Connections page.

Git Branch: Select the branch of the dbt project.

Warehouse: Select the warehouse or database where the models will be executed.

Postgres

BigQuery

Databricks

Redshift

Snowflake

Connection: Select the Warehouse connection if already created. Or a connection can be created from Gathr’s main menu > Connections page.

Profile Configuration: Profile configuration is auto populated with connection details & default values. Provide any optional profile parameters required for the model execution.

Profile Name: Specify the name of the profile to be executed.

Note: Profile Name in the project’s dbt_project.yml file should match with the Profile Name specified here.

Target: Specify the target of project.

DBT Commands: Select or add the dbt commands to be executed, optional command arguments can also be added. Example: –debug, –warn-error, –fail-fast. The commands will be executed sequentially.

Environment Variables: Specify the environment variable key (name) and its corresponding value.

Dependencies: Enter the list of Python dependencies required for your DBT Core project.

These libraries will be installed automatically.

Example:

urllib3==1.26.16

numpy

pandas=2.1.0

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

HTTP Operator

The HTTP Operator in Gathr Workflows facilitates communication with an HTTP system by sending requests to specific endpoints.

This operator proves valuable for executing actions, making it an essential component in designing dynamic and interactive workflows.

Configuration Properties:

Connection ID: Select connection ID from the drop-down which is defined in airflow to connect with required HTTP.

Connections are the service identifiers. A connection ID can be selected from the list if you have created and saved connection details for HTTP operator earlier. Or create one as explained in the topic - HTTP Operator Connection ID

End Point: Specify the endpoint on the HTTP system to trigger the desired action.

Request Data: For POST or PUT requests, provide data in JSON format. For GET requests, use a dictionary of key/value string pairs.

GET Example:

https://10.xx.xx.238:xxx/Gathr/datafabric/func/list?projectName=XXX_1000068&projectVersion=1&projectDisplayName=xxxx&pageView=pipelinePOST Example:

{"connectionName": "JDBC_MYSQL_AUTO", "connectionId": "xxxxx", "componentType": "jdbc", "tenantId": "xxx", "connectionJson": {"databaseType": "mysql", "driver": "", "connectionURL": "", "driverType": "", "databaseName": "xxx", "host": "xxx", "port": "xxx", "username": "xxxx", "password": "xxxxx"}}

Header: Include HTTP headers in JSON format to be added to the request.

Retries: Define the number of attempts the workflow should make to run the task in case of failure.

Trigger Rule: Set a rule to determine the trigger condition for this node.

SLA: Optionally provide SLA-related details if required for task execution.

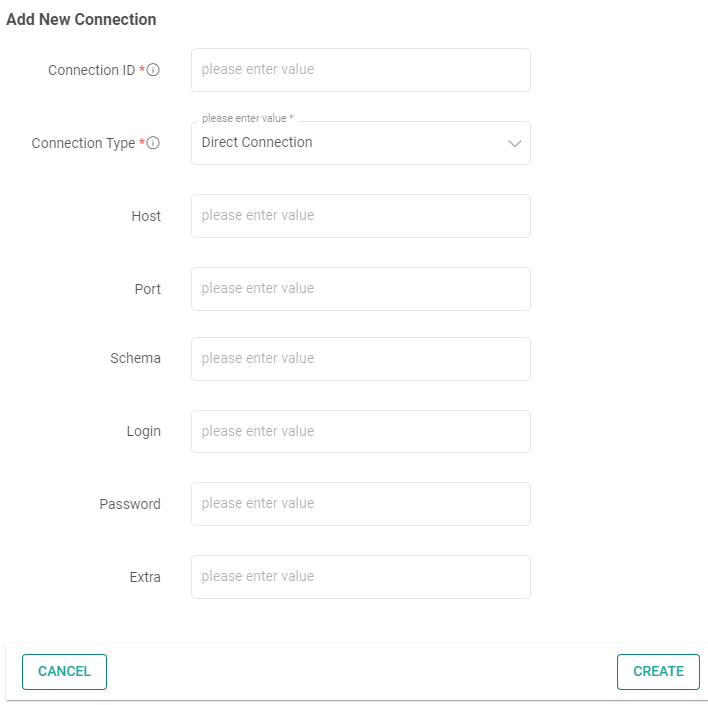

HTTP Operator Connection ID

Connection ID: Assign a unique and identifiable name for the connection ID.

Connection Type: Select either “Direct” for a direct connection or “SSH” for a secure connection via SSH.

SSH Key: If using SSH, provide the SSH key for authentication.

SSH Host: Specify the destination host address when using SSH for connection.

SSH User: Input the SSH username for authentication.

Host: Enter the host address for a direct connection.

Port: Define the port number for the connection.

Schema: Provide the schema information if applicable.

Login: Input the login or username for authentication.

Password: Enter the password for authentication.

Extra: Additional parameters or information relevant to the connection.

MSSQL Operator

This operator use to execute SQL statements on a Microsoft SQL database.

Connection ID: Create a new JDBC connection or select a connection ID from the drop-down which is defined in Airflow to connect with the required MSSQL database.

To know more about how to create JDBC connection, see JDBC Connection →

Query: SQL queries used to perform operations.

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

MySQL Operator

This operator is used to execute SQL statement on a MySQL database.

Connection ID: Create a new JDBC connection or select a connection ID from the drop-down which is defined in Airflow to connect with the required MySQL database.

To know more about how to create JDBC connection, see JDBC Connection →

If the MySQL database is SSL enabled, then you can enable the SSL option in Gathr.

This option determines whether or with what priority a secure SSL TCP/IP connection will be negotiated with the server. There are five modes:

PREFERRED: First try an SSL connection; if that fails, try a non-SSL connection.

DISABLED: Only try a non-SSL connection.

REQUIRED: Only try an SSL connection. If a root CA file is present, verify the certificate in the same way as if verify-ca was specified.

VERIFY_CA: Only try an SSL connection, and verify that the server certificate is issued by a trusted certificate authority (CA).

VERIFY_IDENTITY: Only try an SSL connection, verify that the server certificate is issued by a trusted CA and that the requested server host name matches that in the certificate.

Continue with the operator’s configuration:

Query: SQL queries used to perform operations.

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

Pause Operator

The Pause Operator is used to Pause the current workflow.

Following are the properties under the configuration:

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

Pipeline Operator

Pipeline operator is used to run selected pipeline. You can select pipelines that needs to run. Function of this operator is same as that of a Pipeline Node.

You have to set the following configurations for this operator.

Pipeline To Run: Write custom python code here that would be execute by workflow.

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

POSTGRES Operator

This operator is used to execute SQL statements on a PostgreSQL database.

Connection ID: Create a new JDBC connection or select a connection ID from the drop-down which is defined in Airflow to connect with the required Postgres database.

To know more about how to create JDBC connection, see JDBC Connection →

If the Postgres database is SSL enabled, then you can enable the SSL option in Gathr.

This option determines whether or with what priority a secure SSL TCP/IP connection will be negotiated with the server. There are six modes:

allow: First try a non-SSL connection; if that fails, try an SSL connection.

prefer: First try an SSL connection; if that fails, try a non-SSL connection.

disable: Only try a non-SSL connection.

require: Only try an SSL connection. If a root CA file is present, verify the certificate in the same way as if verify-ca was specified.

verify-ca: Only try an SSL connection, and verify that the server certificate is issued by a trusted certificate authority (CA).

verify-full: Only try an SSL connection, verify that the server certificate is issued by a trusted CA and that the requested server host name matches that in the certificate.

Continue with the operator’s configuration:

Query: SQL queries used to perform operations.

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger condition of this node.

SLA: Provide SLA related details (if required).

SQL Sensor

SQLSensor runs the SQL statement until first cell is in (0,’0’,’’).

It runs the SQL statement after each poke interval until Time-Out interval.

Connection ID: Select Connection ID from drop down which is defined in airflow to connect with required SQL.

Time Out Interval: Maximum time for which the sensor will check the given location (in seconds) once triggered.

Poke Interval: Time interval, that sensor will wait between each tries (in seconds).

Query: SQL queries used to perform operations.

Retries: Number of times the workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

Time Delta Sensor

Waits for a given amount of time before succeeding. User needs to provide configurations.

Time Out Interval: Maximum time till when sensor will wait.

Retries: Number of times workflow tries to run this task in case of failure.

Trigger Rule: Rules to define trigger conditions of this node.

SLA: Provide SLA related details (if required).

Connection ID

You can create connections against the Connection ID field. A pop-up will appear (Create Connection). Provide the information required to create a connection in this tile.

The following types of connections can be created:

BigQuery

HTTP

MSSQL

MySQL

Postgres

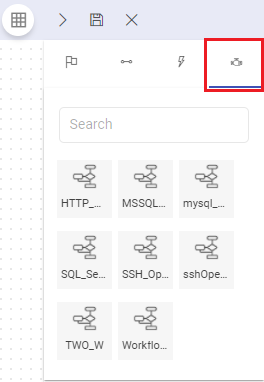

Workflow Nodes

All the Workflows that are created in Gathr for the logged in user are listed in the Workflow node.

You can add workflow as an operator inside a workflow (similar to pipeline). This workflow will act as a sub-workflow and a separate instance of workflow and will be executed as sub-workflow.

If you have any feedback on Gathr documentation, please email us!