A Data Source represents the source for a data pipeline in Gathr.

Typically, you start creating a pipeline by selecting a Data Source for reading data. Data Source can also help you to infer schema from Schema Type which can directly from the selected source or by uploading a sample data file.

Gathr Data Sources are built-in drag and drop operators. The incoming data can be in any form such as message queues, transactional databases, log files and many more.

Gathr runs on the computation system: Spark.

Within Spark, we have two types of Data Source’s behavior:

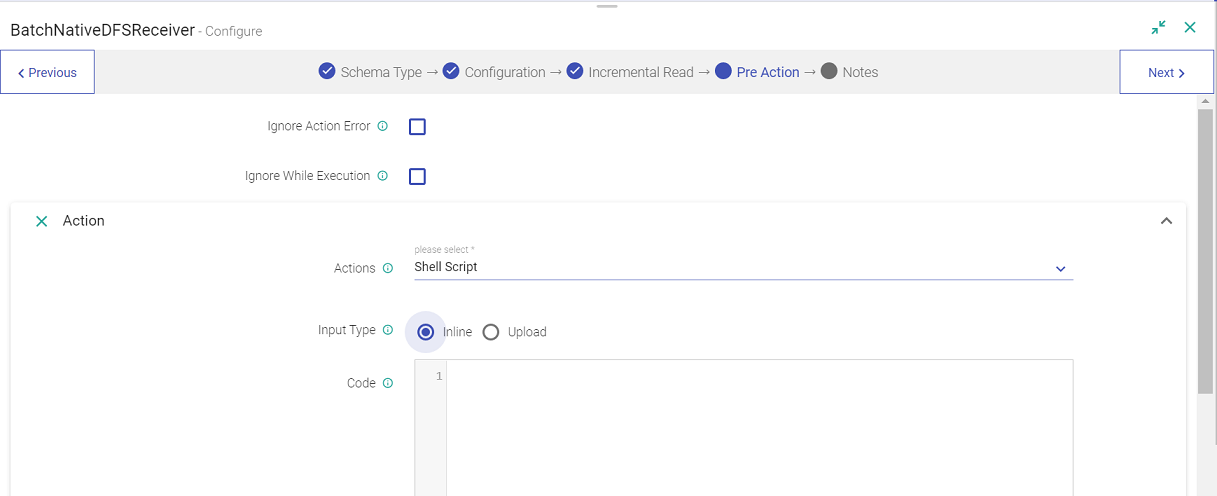

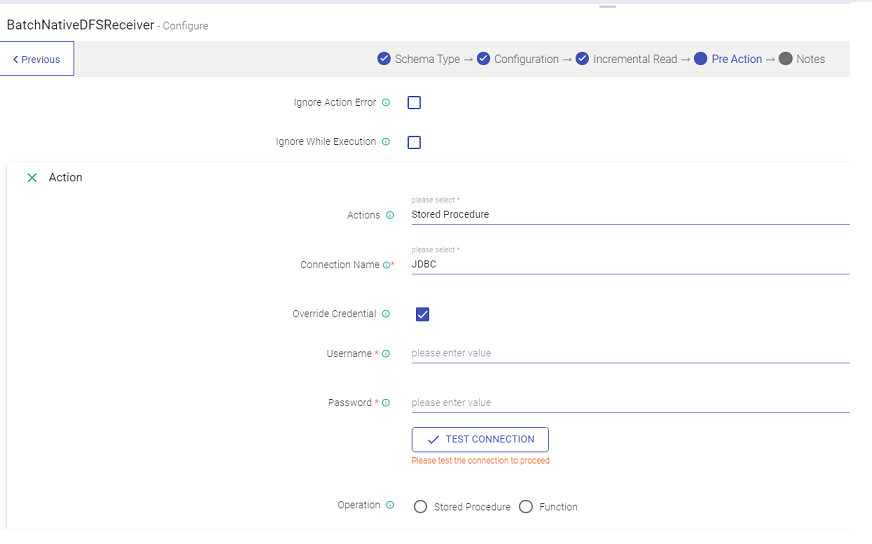

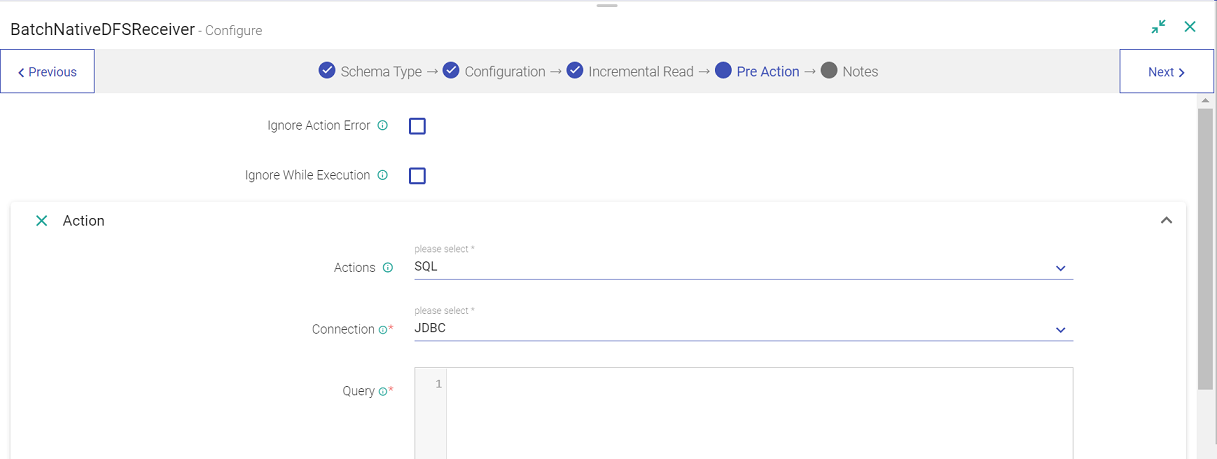

The user may want to perform certain actions before the execution of source components. A Pre-Action tab is available at the source.

These actions could be performed on the Source:

- If for some reason the action configured fails to execute, the user has an option to check mark the ‘Ignore Action Error’ option so that the pipeline runs without getting impacted.

- By check marking the ‘Ignore while execution’ option, the configuration will remain intact in the pipeline, but the configured action will not get executed.

- The user can also configure multiple actions by clicking at the Add Action button.

This feature helps define the schema while loading the data; with Auto Schema feature, first load data from a source then infer schema, modify data types and determine the schema on the go.

This feature helps design the pipeline interactively. Data can be loaded in the form of CSV/TEXT/JSON/XML/Fixed Length and Parquet file or you can fetch data from sources such as Kafka, JDBC and Hive. Auto schema enables the creation of schema within the pre-built operators and identifies columns in CSV/JSON/TEXT/XML/Fixed Length and Parquet.

Gathr starts the inference by ingesting data from the data source. As each component processes data, the schema is updated (as per the configuration and the logic applied). During the process, Gathr examines each field and attempts to assign a data type to that field based on the values in the data.

All our components (source) have auto schema enabled. Below mentioned are the common configuration of Auto schema for every Data Source.

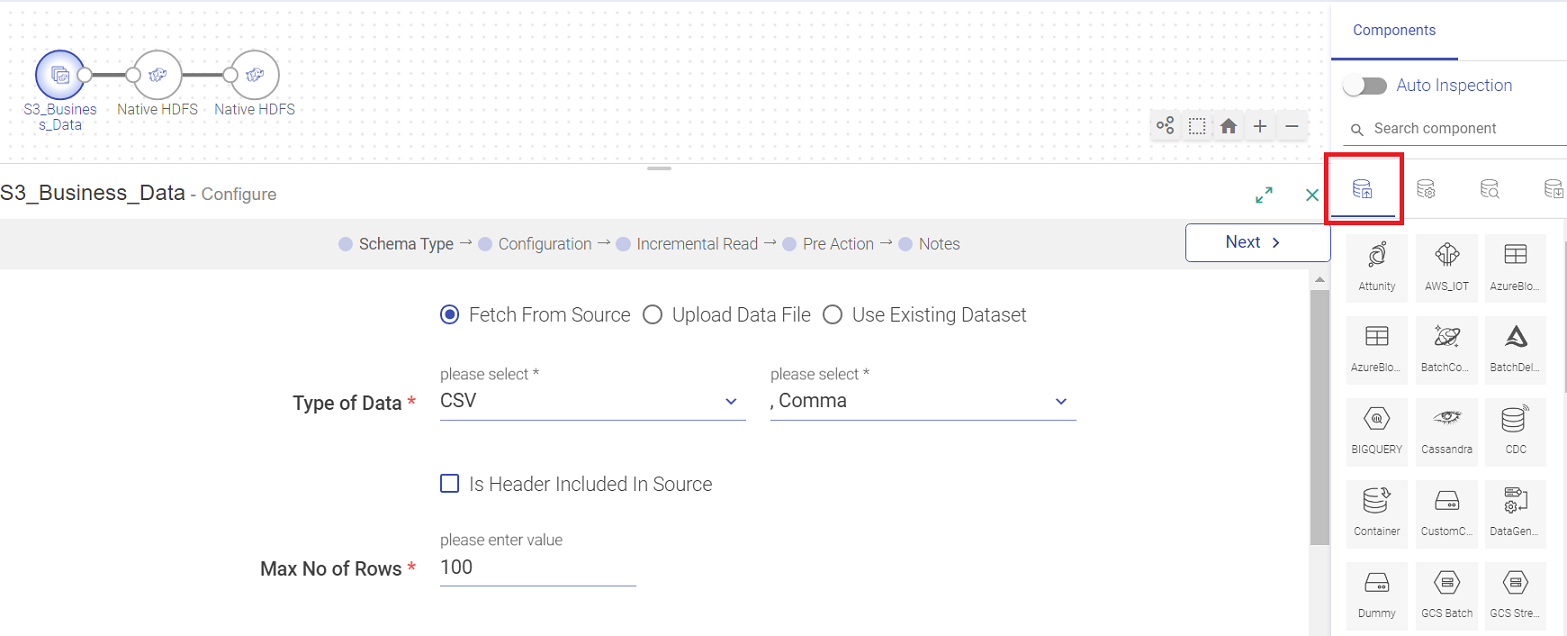

To add a Data Source to your pipeline, drag the Data Source to the canvas and right click on it to configure.

There are three tabs for configuring a component.

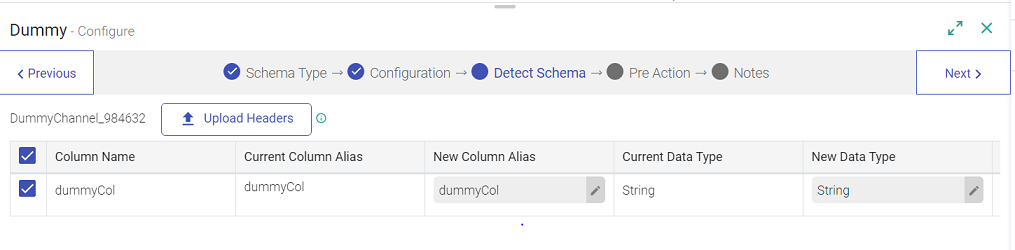

Note: An additional tab (DETECT SCHEMA) is reflected. This tab presents your data in the form of Schema.

Schema Type allows you to create a schema and the fields. On the Schema Type tab, select either of the below mentioned options:

Whether the data is fetched from the source or uploaded, the following configuration properties remain the same:

Note: In case of ClickStream Data Source, you can also choose from an existing schema.

Fetch data from any data source.

Depending on the type of data, determine the Data Source you want to use, then recognize the type of data (or data format) and its corresponding delimiters.

These are four types of data formats supported by Gathr to extract schema from them; CSV, JSON, TEXT, XML, Fixed Length and Parquet.

Once you choose a data type, you can edit the schema and then configure the component as per your requirement.

Note: Whenever Fetch from Source is chosen, the sequence of tabs will be as follows:

Fetch from Source< Configuration < Schema < Add Notes.

The data that is being fetched from the source is in the CSV format. From within the CSV, the data columns that will be parsed, will be accepting the following delimiters.

The default delimiter is comma (,).

If the data is fetched from the source, after uploading a CSV/TEXT/JSON/Parquet, next tab is the Configuration, where the Data Sources are configured and accordingly the schema is generated. Then you can add notes, and save the Data Source’s configuration.

Select JSON as your Type of Data. The data that is being fetched from the source is in JSON format. The source will read the data in the format it is available.

The System will fetch the incoming XML data from the source.

Select XML as your Type of Data and provide the XML XPath value.

XML XPath - It is the path of the tag of your XML data to treat as a row. For example, in this XML <books> <book><book>...</books>, the appropriate XML Xpath value for book would be /books/book.

The Default value of XML XPath is '/' which will parse the whole XML data.

The System will parse the incoming fixed length data. Select 'Fixed Length' as your Type of Data and Field Length value.

The Field length value is a comma separated length of each field.

If there are 3 fields f1,f2,f3 and their max length is 4,10 & 6 respectively.Then the field length value for this data would be 4,10,6.

Select Parquet as your Type of data. The file that is being fetched from the source is in parquet format. The source will read the data in the format it is available.

Text message parser is used to read data in any format from the source, which is not allowed when csv, json etc. parsers are selected in configuration. Data is read as text, a single column dataset.

When you select the TEXT as “Type of Data” in configuration, data from the source is read by Gathr in a single column dataset.

To parse the data into fields append the Data Source with a Custom Processor where custom logic for data parsing can be added.

Upload your data either by using a CSV, TEXT, JSON, XML, Fixed Length or a Parquet file.

Once you choose either data type, you can edit the schema and then configure the component as per your requirement:

Note: Whenever you choose Upload Data File, the sequence of tabs will be as follows:

Upload Data File< Detect Schema< Configuration< Add Notes.

You can choose the data type as CSV and the data formats are as follows:

Once you choose the CSV, the schema is uploaded.

After CSV/TEXT/JSON/XML Fixed Length is uploaded, next tab is the Detect Schema, which can be edited as per requirements. Next is the Configuration tab, where the Data Sources are configured as per the schema. Then you can add notes, and save the Data Source’s configuration.

Following fields in the schema are editable:

Note: Nested JSON cannot be edited.

• Schema Name: Name of the Schema can be changed.

• Column Alias: Column alias can be renamed.

• Date and Timestamp: Formats of dates and timestamps can be changed. Note: Gathr has migrated from spark 2 to spark 3.0. For further details on upgrading Gathr to Spark 3.0, refer to the link shared below:

https://spark.apache.org/docs/3.0.0-preview2/sql-migration-guide.html

• Data Type: Field type can be any one of the following and they are editable:

When you select JSON as your type of data, the JSON is uploaded in the Detect Schema tab, where you can edit the Key-Value pair.

After the schema is finalized, the next tab is configuration of Data Source (Below mentioned are the configurations of every Data Source).

Note: In case of Hive and JDBC, the configuration tab appears before the Detect schema tab.

The Text files can be uploaded to a source when the data available requires customized parsing. Text message parser is used to read data in any format from the source, which is not allowed when CSV, JSON, etc. parsers are selected in configuration.

To parse the data into fields append the Data Source with a Custom Processor where custom logic for data parsing can be added.

The system will parse the incoming XML data from the uploaded file. Select XML as your Type of Data and provide the XML XPath of the XML tag. These tags of your XML files are reflected as a row.

For example, in this XML <books> <book><book>...</books>, the output value would be /books/book

You can also provide / in XML X path. It is generated by default.

The system will parse the incoming fixed length data. If the field has data in continuation without delimiters, you can separate the data using Fixed Length data type.

Separate the field length (numeric unit) by comma in the field length.

When Parquet is selected as the type of data, the file is uploaded in the schema and the fields are editable.

Note: Parquet is only available in HDFS and Native DFS Receiver.

Auto schema infers headers by comparing the first row of the file with other rows in the data set. If the first line contains only strings, and the other lines do not, auto schema assumes that the first row is a header row.

After the schema is finalized, the next tab is configuration. Once you configure the Data Source, you can add notes and save the Data Source’s configuration.

Along with fetching data from a source directly and uploading the data on the data source; you can also, use an existing dataset in the data source RabbitMQ and DFS.

To read more about it, Refer the “Use Existing Dataset " in the Dataset Section.

Add an ADLS batch or streaming data source to create a pipeline. Click the component to configure it.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Edit the schema if required and click next to configure.

Provide the below fields to configure ADLS data source:

Click Next for Incremental Read option.

Note: The incremental Read option is available only for ADLS Batch.

Add an Advanced Mongo data source into your pipeline. Drag the data source to the canvas and click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Edit the schema if required and click next to Configure the Advanced Mongo source.

Configuring Advanced Mongo

Incremental Read in Advanced Mongo

Configure Pre-Action in Source

Configuring an Attunity Data Source

To add an Attunity Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Edit the schema if required and click next to Configure Attunity.

Configuring Attunity

Click on the add notes tab. Enter the notes in the space provided.

Configuring a Data Topics and Metadata Topic tab

Choose the topic names and their fields are populated which are editable. You can choose as many topics.

Choose the metadata topic and the topics’ fields are populated which are editable. You can only choose metadata of one Topic. Zookeeper path to store the offset value at per-consumer basis. An offset is the position of the consumer in the log.

Click Done to save the configuration.

AWS-IoT and Gathr allows collecting telemetry data from multiple devices and process the data.

Note: Every action we perform on Gathr is reflected on AWS-IoT Wizard and vice versa.

To add an IoT channel into your pipeline, drag the channel to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File.

Fetch from source takes you to Configuration of AWSIoT.

Upload Data File will take you to Detect Schema page.

Click on the add notes tab. Enter the notes in the space provided.

An Azure Blob Batch channel reads different formats of data in batch (json, csv, orc, parquet) from container. It can omit data into any emitter.

Configuring an Azure Blob Data Source

To add an Azure Blob Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source. Select the connection name from the available list of connections, from where you would like to read the data.

Configure Pre-Action in Source

An Azure Blob Stream channel reads different formats of streaming data (json, csv, orc, parquet) from container and emit data into different containers.

Configuring an Azure Blob Data Source

To add an Azure Blob Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source.

On Cosmos Channel, you will be able to read from selected container of a Database in batches. You can use custom query in case of Batch Channel.

Configuring a Batch Cosmos Data Source

To add a Batch Cosmos Data Source into your pipeline, drag the Data Source to the canvas and click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source.

Configure Pre-Action in Source

Click Next for Incremental Read option

On Delta Lake Channel, you should be able to read data from delta lake table on S3, HDFS, GCS, ADLS or DBFS. Delta Lake is an open-source storage layer that brings ACID transactions to Apache Spark™ and big data workloads. All data in Delta Lake is stored in Apache Parquet format. Delta Lake provides the ability to specify your schema and enforce it along with timestamps.

To add a Delta Data Source into your pipeline, drag the Data Source to the canvas and click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source. Below are the configuration details of the Delta Source (Batch and Streaming):

Configure Pre-Action in Source

The configuration for BIGQUERY is mentioned below:

Next, in the Detect Schema window, the user can set the schema as dataset by clicking on the Save As Dataset checkbox. Click Next. The user can set the Incremental Read option.

Note: The user can enable Incremental Read option if, date or integer column in our input data.

Configure Pre-Action in Source

Cassandra Data Source reads data from Cassandra cluster using specified keyspace name and table name.

Configuring Cassandra Data Source

To add a Cassandra Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source.

Note: Casandra keyspace name and table name should exist in the Cassandra cluster. Select the connection name from the available list of connections, from where you would like to read the data.

Configure Pre-Action in Source

The CDC Data Source processes Change Data Capture (CDC) information provided by Oracle LogMiner redo logs from Oracle 11g or 12c.

CDC Data Source processes data based on the commit number, in ascending order. To read the redo logs, CDC Data Source requires the LogMiner dictionary.

Follow the Oracle CDC Client Prerequisites, before configuring the CDC Data Source.

Oracle CDC Client Prerequisites

Before using the Oracle CDC Client Data Source, complete the following tasks:

2. Enable supplemental logging for the database or tables.

3. Create a user account with the required roles and privileges.

4. To use the dictionary in redo logs, extract the Log Miner dictionary.

5. Install the Oracle JDBC driver.

LogMiner provides redo logs that summarize database activity. The Data Source uses these logs to generate records.

LogMiner requires an open database in ARCHIVELOG mode with archiving enabled. To determine the status of the database and enable LogMiner, use the following steps:

1. Log into the database as a user with DBA privileges.

2. Check the database logging mode:

If the command returns ARCHIVELOG, you can skip to Task 2.

If the command returns NOARCHIVELOG, continue with the following steps:

3. Shut down the database.

4. Start up and mount the database:

5. Configure enable archiving and open the database:

Task 2. Enable Supplemental Logging

To retrieve data from redo logs, LogMiner requires supplemental logging for the database or tables.

1. To verify if supplemental logging is enabled for the database, run the following command: SELECT supplemental_log_data_min, supplemental_log_data_pk, supplemental_log_data_all FROM v$database;

For 12c multi-tenant databases, best practice is to enable logging for the container for the tables, rather than the entire database. You can use the following command first to apply the changes to just the container:

You can enable identification key or full supplemental logging to retrieve data from redo logs. You do not need to enable both:

To enable identification key logging

You can enable identification key logging for individual tables or all tables in the database:

Use the following commands to enable minimal supplemental logging for the database, and then enable identification key logging for each table that you want to use: ALTER DATABASE ADD SUPPLEMENTAL LOG DATA; ALTER TABLE <schema name>.<table name> ADD SUPPLEMENTAL LOG DATA (PRIMARY KEY) COLUMNS;

Use the following command to enable identification key logging for the entire database:

To enable full supplemental logging

You can enable full supplemental logging for individual tables or all tables in the database:

Use the following commands to enable minimal supplemental logging for the database, and then enable full supplemental logging for each table that you want to use: ALTER DATABASE ADD SUPPLEMENTAL LOG DATA; ALTER TABLE <schema name>.<table name> ADD SUPPLEMENTAL LOG DATA (ALL) COLUMNS;

Use the following command to enable full supplemental logging for the entire database:

To submit the changes

Create a user account to use with the Oracle CDC Client Data Source. You need the account to access the database through JDBC.

Create accounts differently based on the Oracle version that you use:

Oracle 12c multi-tenant databases

For multi-tenant Oracle 12c databases, create a common user account. Common user accounts are created in cdb$root and must use the convention: c##<name>.

1. Log into the database as a user with DBA privileges.

2. Create the common user account:

Repeat the final command for each table that you want to use.

When you configure the origin, use this user account for the JDBC credentials. Use the entire user name, including the "c##", as the JDBC user name.

For standard Oracle 12c databases, create a user account with the necessary privileges:

1. Log into the database as a user with DBA privileges.

2. Create the user account: CREATE USER <user name> IDENTIFIED BY <password>; GRANT create session, alter session, select any dictionary, logmining, execute_catalog_role TO <user name>;

Repeat the last command for each table that you want to use.

When you configure the Data Source, use this user account for the JDBC credentials.

For Oracle 11g databases, create a user account with the necessary privileges:

1. Log into the database as a user with DBA privileges.

2. Create the user account: CREATE USER <user name> IDENTIFIED BY <password>; GRANT create session, alter session, select any dictionary, logmining, execute_catalog_role TO <user name>;

Repeat the final command for each table that you want to use.

When you configure the origin, use this user account for the JDBC credentials.

Task 4. Extract a Log Miner Dictionary (Redo Logs)

When using redo logs as the dictionary source, you must extract the Log Miner dictionary to the redo logs before you start the pipeline. Repeat this step periodically to ensure that the redo logs that contain the dictionary are still available.

Oracle recommends that you extract the dictionary only at off-peak hours since the extraction can consume database resources.

To extract the dictionary for Oracle 11g or 12c databases, run the following command:

To extract the dictionary for Oracle 12c multi-tenant databases, run the following commands: ALTER SESSION SET CONTAINER=cdb$root; EXECUTE DBMS_LOGMNR_D.BUILD(OPTIONS=> DBMS_LOGMNR_D.STORE_IN_REDO_LOGS);

The Oracle CDC Client origin connects to Oracle through JDBC. You cannot access the database until you install the required driver.

Note: JDBC Jar is mandatory for Gathr Installation and is stored in Tomcat Lib and Gathr third party folder.

The CDC Data Source processes Change Data Capture (CDC) information provided by Oracle LogMiner redo logs from Oracle 11g or 12c.

CDC Data Source processes data based on the commit number, in ascending order. To read the redo logs, CDC Data Source requires the LogMiner dictionary.

The Data Source can create records for the INSERT, UPDATE and DELETE operations for one or more tables in a database

To add a CDC Data Source into your pipeline, drag the processor to the canvas and right click on it to configure.

To configure your schema, you can either use configured pluggable database or non-pluggable database.

The container Data Source is used to read the data from Couchbase. This Data Source is used as a caching container which read both the aggregated data and raw data from the bucket.

Configuring a Container Data Source

To add a Container Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema tab, you can Upload Data File Upload Data File and Fetch From Source.

Choose the scope of the Template (Global or Workspace) and Click Done for saving the Template and configuration details.

Configure Pre-Action in Source

Custom Data Source allows you to read data from any data source.

You can write your own custom code to ingest data from any data source and build it as a custom Data Source. You can use it in your pipelines or share it with other workspace users.

Create a jar file of your custom code and upload it in a pipeline or as a registered component utility.

To write a custom code for your custom Data Source, follow these steps:

1. Download the Sample Project. (Available on the home page of Data Pipeline).

Import the downloaded Sample project as a maven project in Eclipse. Ensure that Apache Maven is installed on your machine and that the PATH for the same is set on the machine.

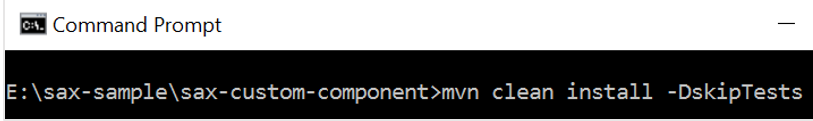

2. Implement your custom code and build the project. To create a jar file of your code, use the following command: mvn clean install –DskipTests.

For a Custom Data Source, add your custom logic in the implemented methods of the classes as mentioned below:

If you want high level, abstraction using only Java code, then extend BaseSource as shown in SampleCustomData Source class

com.yourcompany.component.ss.Data Source.SampleCustomData Source which extends BaseSource

• public void init(Map<String, Object> conf)

• public List<String> receive()

If you want low-level implementation using spark API, then extend AbstractData Source as shown in SampleSparkSourceData Source class.

com.yourcompany.component.ss.Data Source.SampleSparkSourceData Source extends AbstractData Source

• public void init(Map<String, Object> conf)

• public Dataset<Row> getDataset(SparkSession spark)

Configuring Custom Data Source

While uploading data you can also upload a Dataset in Custom Data Source.

Upload the custom code jar using the Upload jar button from the pipeline designer page. You can use this Custom Data Source in any pipeline.

Step 4: Click Done to save the configuration.

Data Generator Data Source generates test data for testing your pipelines. Once the pipeline is tested with fields and their random data, you can replace the Data Generator Data Source.

Configuring Data Generator Data Source

To add a Data Generator Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, you can only Upload Data File to this Data Source.

You can upload a file, of either JSON or CSV format to test data.

Every row from the file is treated as a single message.

Check the option repeat data, if you wish to continuously generate data from uploaded file.

Click on the add notes tab. Enter the notes in the space provided. Click Done to save the configuration.

This component is supported in Gathr on-premise. User have an option to utilize any existing dataset as a channel in the data pipeline.

On the Schema Type tab, update configuration for required parameters with reference to below table:

Once the Schema and Rules for the existing dataset are validated, click Next and go to the Incremental Read tab.

Incremental Read

Click NEXT to detect schema from the File Path file. Click Done to save the configuration.

Configure Pre-Action in Source.

Dummy channel is required in cases where the pipeline has a processor and emitter that does not require a channel.

For example, in case you only want to check the business logic or the processing of data, but do not require a data source to generate data for this pipeline. Now, with StreamAnalytix it is a mandatory to have an emitter in the pipeline. In such a scenario, you can use a Dummy Channel so that you can test the processors without the requirement of generating the data using an actual channel.

StreamAnalytix provides batch and streaming GCS (Google Cloud Storage) channels. The configuration for GCS data source is specified below:

- The user can add configuration by clicking at the ADD CONFIGURATION button.

- Next, in the Detect Schema window, the user can set the schema as dataset by clicking on the Save As Dataset checkbox.

- The Incremental Read option will be in GCS batch data source and not in the GCS Streaming channel.

Configure Pre-Action in Source

This component is supported in Gathr on-premise. A local file data source allows you to read data from local file system. Local file System is File System where Gathr is deployed.

Note: File reader is for batch processing.

The Batch data can be fetched from source or you can upload the files.

Schema tab allows you to create a schema and the fields. On the Detect Schema tab, select a Data Source or Upload Data.

Click NEXT to detect schema from the File Path file. Click Done to save the configuration.

Configure Pre-Action in Source

To add an HDFS Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Schema Type tab allows you to create a schema and the fields. On the Detect Schema tab, select a Data Source or Upload Data.

For an HDFS Data Source, if data is fetched from the source, and the type of data is CSV, the schema has an added tab, Is Header Included in source

Click on the Add Notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

Configure Pre-Action in Source

To use a Hive Data Source, select the connection and specify a warehouse directory path.

Note: This is a batch component.

To add a Hive Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Detect Schema Type tab, select Fetch From Source or Upload Data File.

Configuring Hive Data Source Single: If only one type of message will arrive on the Data Source. Multi: If more than one type of message will arrive on the Data Source.

After the query, Describe Table and corresponding Table Metadata, Partition Information, Serialize and Reserialize Information is populated.

Make sure that the query you run matches with the schema created with Upload data or Fetch from Source.

Click Done to save the configuration.

Configure Pre-Action in Source

Through HTTP Data Source, you can consume different URI and use that data as datasets in pipeline creation.

For example, there is a URL which returns the list of employees in JSON, TEXT or CSV format. You can consume this URL through HTTP Channel and use it as dataset for performing operations.

To add a HTTP into your pipeline, drag the Data Source to the canvas and right click on it to configure.

This option specify that URL can be accessed without any authentication.

This option specify that accessing URL requires Basic Authorization. Provide user name and password for accessing the URL.

Token-based authentication is a security technique that authenticates the users who attempts to log in to a server, a network, or other secure system, using a security token provided by the server

Oauth2 is an authentication technique in which application gets a token that authorizes access to the user's account.

Configure Pre-Action in Source

This component is supported in Gathr on-premise. Under the Schema Type tab, select Fetch From Source or Upload Data File.

When fetch from source is chosen, the schema tab comes after the configuration, and when you upload data, the schema tab comes before configuration.

Configuring Impala Data Source

Click on the Add Notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

JDBC Channel supports Oracle, Postgres, MYSQL, MSSQL,DB2 connections.

You can configure and test the above mentioned connection with JDBC. It allows you to extract the data from DB2 and other source into your data pipeline in batches after configuring JDBC channel.

Note: This is a batch component.

Prerequisite: Upload appropriate driver jar as per the RDBMS used in JDBC Data Source. Use the upload jar option.

For using DB2, create a successful DB2 Connection.

To add a JDBC into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File. or use existing dataset.

Enter the schema and select table. You can view the Metadata of the tables.

Once the Metadata is selected, Click Next and detect schema to generate the output with Sample Values. The next tab is Incremental Read.

Enter the schema and select table. You can view the Metadata of the tables.

Click Done to save the configuration.

Configure Pre-Action in Source

Under the Schema Type tab, select Fetch From Source or Upload Data File.

When fetch from source is chosen, the schema tab comes after the configuration, and when you upload data, the schema tab comes before configuration.

Configuring Kafka Data Source

Click on the Add Notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

Kinesis Data Source allows you to fetch data from Amazon Kinesis stream.

Configuring Kinesis Data Source

Under the Schema Type tab, select Upload Data File.

Click on the Add Notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

Apache Kudu is a column-oriented data store of the Apache Hadoop ecosystem. It enable fast analytics on fast (rapidly changing) data. The channel is engineered to take advantage of hardware and in-memory processing. It lowers query latency significantly from similar type of tools.

To add a KUDU Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Connections are the service identifiers. Select the connection name from the available list of connections, from where you would like to read the data.

Enter the schema and select table. You can view the Metadata of the tables.

Once the Metadata is selected, Click Next and detect schema to generate the output with Sample Values. The next tab is Incremental Read.

Enter the schema and select table. You can view the Metadata of the tables.

Click on the Add Notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

Configure Pre-Action in Source

Mqtt Data Source reads data from Mqtt queue or topic.

To add a MQTT Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Sourceor Upload Data File. Connections are the service identifiers. Select the connection name from the available list of connections, from where you would like to read the data.

Click on the Add Notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

This Data Source enables you to read data from HDFS. This is a streaming component.

For a NativeDFS Receiver Data Source, if data is fetched from the source, and the type of data is CSV, the schema has an added tab that reads, Is Header Included in source.

This is to signify if the data that is fetched from source has a header or not.

If Upload Data File is chosen, then there is an added tab, which is Is Header included in Source.

All the properties are same as HDFS.

This component is supported in Gathr on-premise. A Native File Reader Data Source allows you to read data from local file system. Local file System is the File System where Gathr is deployed.

Note: Native File reader is for Streaming processing.

Streaming data can be fetched from source or you can upload the files.

Detect Schema tab allows you to create a schema and the fields. On the Detect Schema tab, select a Data Source or Upload Data.

Click NEXT to detect schema from the File Path file. Click Done to save the configuration.

OpenJMS is a messaging standard that allows application components to create, send, receive and read messages.

OpenJMS Data Source reads data from OpenJMS queue or topic.

The JMS (Java Message Service) supports two messaging models:

1. Point to Point: The Point-to-Point message producers are called senders and the consumers are called receivers. They exchange messages by means of a destination called a queue: senders produce messages to a queue; receivers consume messages from a queue. What distinguishes the point-to-point messaging is that only one consumer can consume a message.

2. Publish and Subscribe: Each message is addressed to a specific queue, and the receiving clients extract messages from the queues established to hold their messages. If no consumers are registered to consume the messages, the queue holds them until a consumer registers to consume them.

Configuring OpenJMS Data Source

To add an OpenJMS Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Connections are the Service identifiers.Select the connection name from the available list of connections, from where you would like to read the data

Click on the add notes tab. Enter the notes in the space provided. Click Done to save the configuration.

The configuration for Pubsub data source is mentioned below:

The user can add further configuration by clicking at the ADD CONFIGURATION button.

The ‘Fetch from Source’ will work only if topic and subscription name exists and has some data already published in it.

Multiple Emitters to Pubsub source are supported only when auto create subscription name is enabled.

RabbitMQ Data Source reads messages from the RabbitMQ cluster using its exchanges and queues.

Configuring RabbitMQ Data Source

To add a RabbitMQ into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File.

Click on the add notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

RDS emitter allows you to write to RDS DB Engine, which could be through SSl or without ssl. RDS is Relational Database service on Cloud.

RDS Channel can read in Batch from the RDS Databases (Postgresql, MySql, Oracle, Mssql). RDS is Relational Database service on Cloud. The properties of RDS are similar to those of a JDBC Connector with one addition of SSL Security.

SSL Security can be enabled on RDS Databases.

System should be able to connect, read and write from SSL Secured RDS.

If security is enabled, it will be configured in Connection and automatically propagated to channel.

Please note: SSL Support is not available for Oracle.

To add a RDS Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File:

Enter the schema and select table. You can view the Metadata of the tables.

Once the Metadata is selected, Click Next and go to the Incremental Read tab.

Enter the schema and select table. You can view the Metadata of the tables.

Amazon Redshift is a fully managed, petabyte-scale data warehouse service in the cloud.

Note: This is a batch component.

Configuring RedShift Data Source

To add a Redshift Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Single: If only one type of message will arrive on the Data Source. Multi: If more than one type of message will arrive on the Data Source.

Click Done to save the configuration.

Configure Pre-Action in Source

S3 data source reads objects from Amazon S3 bucket. Amazon S3 stores data as objects within resources called Buckets.

For an S3 data source, if data is fetched from the source, and the type of data is CSV, the schema has an added tab, Header Included in source.

This is to signify if the data that is fetched from source has a header or not.

If Upload Data File is chosen, then there is an added tab, which is Is Header Included in the source. This signifies if the data uploaded is included in the source or not.

To add the S3 data source into your pipeline, drag the source to the canvas and click on it to configure.

Under the Schema Type tab, select Fetch From Sourceor Upload Data File.

Click on the add notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

S3 Batch Channel can read data from S3 Buckets in incremental manner. Amazon S3 stores data as objects within resources called Buckets.

On a S3 Batch Channel you will be able to read data from specified S3 Bucket with formats like json, CSV, Text, Parquet, ORC. How it helps is only the files modified after the specified time would be read.

For an S3 Data Source, if data is fetched from the source, and the type of data is CSV, the schema has an added tab, Is Header Included in source.

This is to signify if the data that is fetched from source has a header or not.

If Upload Data File is chosen, then there is an added tab, which is Is Header included in Source. This signifies if the data is uploaded is included in source or not.

Configuring S3 Batch Data Source

To add a S3 Batch Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

To add a S3 Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch From Source or Upload Data File.

Click Next for Incremental Read option.

Note: The incremental Read option is available only for S3 Batch.

Click on the add notes tab. Enter the notes in the space provided.

Click Done to save the configuration.

Configure Pre-Action in Source

Parse Salesforce data from the source itself or import a file in either data type format except Parquet. Salesforce channel allows to read Salesforce data from a Salesforce account. Salesforce is a top-notch CRM application built on the Force.com platform. It can manage all the customer interactions of an organization through different media, like phone calls, site email inquiries, communities, as well as social media. This is done by reading Salesforce object specified by Salesforce Object Query Language.

However there are a few pre-requisites to the same.

First is to create a Salesforce connection and for that you would require the following:

l A valid Salesforce accounts.

l User name of Salesforce account.

l Password of Salesforce account.

l Security token of Salesforce account.

Configuring Salesforce Data Source

To add a Salesforce Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch from Source or Upload Data File

Now, select a Salesforce connection and write a query to fetch any Salesforce object. Then provide an API version.

Configure Pre-Action in Source

SFTP channel allows user to read data from network file system.

Configuring SFTP Data Source

Configure Pre-Action in Source

The snowflake cloud-based data warehouse system can be used as a source/channel in Gathr for configuring ETL pipelines.

Configure the snowflake source by filling the below mentioned details: The user will be required to specify the maximum number of clusters for the warehouse. Specifies whether to automatically resume a warehouse when a SQL statement (e.g. query) is submitted to it.

Configure Pre-Action in Source

Socket Data Source allows you to consume data from a TCP data source from a pipeline. Configure Schema type and choose a Socket connection to start streaming data to a pipeline.

Configuring Socket Data Source

To add a Socket Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch from Source or Upload Data File. Connections are the Service identifiers. Select the connection name from the available list of connections, from where you would like to read the data.

Click on the add notes tab. Enter the notes in the space provided. Click Done to save the configuration.

This component is supported in Gathr on-premise. SQS Channel allows you to read data from different SQS queues. The Queue types supported are Standard and FIFO. Along with providing maximum throughput you can achieve scalability using the buffered requests independently.

To add a SQS Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema tab, you can select the Max No. of Rows and Sampling Method as Top-N or Random Sample.

Stream Cosmos Channel can read data in stream manner from CosmosDB (SQL API). It reads updated documents by specifying change-feed directory and using its options.

Note: Both BatchCosmos and StreamCosmos works with Local Session.

Configuring a Stream Cosmos Data Source

To add a Stream Cosmos Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source. Select the connection name from the available list of connections, from where you would like to read the data. It is the file path where Cosmos stores the checkpoint data for Change feed.

On Delta Lake Channel, you should be able to read data from delta lake table on S3, HDFS or DBFS.

Configuring a Streaming Delta Data Source.

This component is supported in Gathr on-premise. VERTICA Channel supports Oracle, Postgres, MYSQL, MSSQL, DB2 connections.

You can configure and connect above mentioned DB-engines with JDBC. It allows you to extract the data from DB2 and other sources into your data pipeline in batches after configuring JDBC channel.

Note: This is a batch component.

Prerequisite: Upload appropriate driver jar as per the RDBMS used in JDBC Data Source. Use the upload jar option.

For using DB2, create a successful DB2 Connection.

Configuring SQS a Vertica Data Source

To add a SQS Vertica Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Enter the schema and select table. You can view the Metadata of the tables:

Once the Metadata is selected, Click Next and detect schema to generate the output with Sample Values. The next tab is Incremental Read.

Enter the schema and select table. You can view the Metadata of the tables.

Click Done to save the configuration.

Configure Pre-Action in Source

This component is supported in Gathr on-premise. A TIBCO Enterprise Management Service (EMS) server provides messaging services for applications that communicate by monitoring queues.

To add a Tibco Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, you can Upload Data File and Fetch From Source.

Click on the add notes tab. Enter the notes in the space provided. Click Done to save the configuration.

VSAM Channel reads data stored in COBOL EBCDIC and Text Format.

Configuring a COBOL Data Source

To add a VSAM Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema tab, you can select the Max No. of Rows and Sampling Method as Top-N or Random Sample.