Configure Gathr for Kerberos (Optional)

Prerequisites

Make sure that you have an Existing MIT Kerberos

In addition, a setup of Kerberos Enabled CDH Cluster →

Once the prerequisites are met and all of your Setup properties are configured, you can follow the steps below to enable Kerberos on your environment.

Steps:

Create three principals, one for Gathr user, one for Kafka user and one for H2O using kadmin utility. The Principals will be “headless” principals. For example, if ‘sanalytix’ and ‘kafka’ are the Gathr and kafka users respectively, then run

kadmin –q “addprinc –randkey sanalytix” kadmin –q “addprinc –randkey kafka” kadmin –q “addprinc –randkey h2o”Use the kadmin utility to create keytab files for the above principals, using:

kadmin –q “ktadd –k <keytab-name>.keytab <username>”.Create keytab for H20 spengo configuration:

kadmin –q “ktadd –k <keytab-name>.keytab <HTTP/hostname>”.Also ensure that the keytabs are readable only by the Gathr user.Example:

kinit -kt sax.service.keytab sax kinit -kt sax.zk.keytab kafka kinit -kt h2o.service.keytab h2oCreate a JAAS configuration file named keytab_login.conf with the following sections:

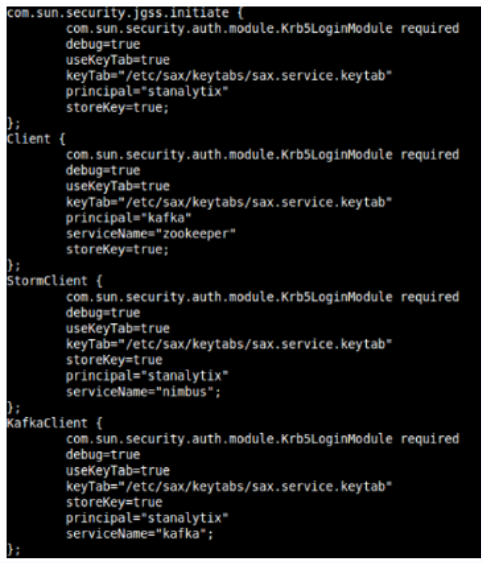

com.sun.security.jgss.initiate (For HTTP client authentication)

client (For Zookeeper)

StormClient (For Storm)

KafkaClient (For Kafka)

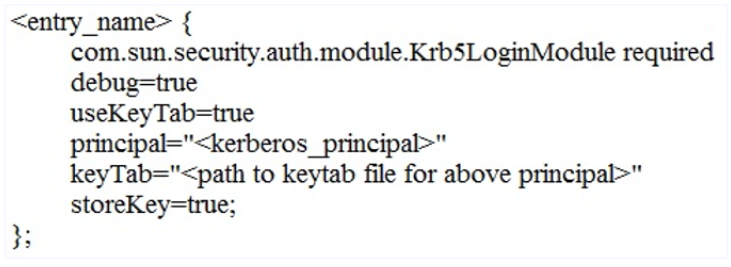

Each section in a JAAS configuration file while using keytabs for Kerberos security has the following format:

Shown below is the sample keytab_login.conf

Now, move the keytabs and keytab_login.conf to $SAX_HOME/conf/common/Kerberos folder and copy the files to: $SAX_HOME/conf/thirdpartylib folder.

Also, replace $SAX\_HOME with the path of Gathr home directory.Add the Gathr user to the supergroup of the HDFS user on all nodes.

Restart Gathr with $SAX_HOME/startServicesServer.sh -config.reload=true

On HBase master node, use kinit using HBase user and grant the Gathr user the read, write and create privileges as follows:

sudo –u hbase kinit –kt /etc/security/keytabs/hbase.headless.key-tab hbase sudo –u hbase $HBASE\_HOME/bin/hbase shellReplace $HBASE\_HOME with the path to hbase installation folder. ‘hbase’ is the user through which HBase is deployed.In hbase shell run grant ‘sanalytix’, ‘RWC’ where sanalytix is the Gathr user.

Grant cluster action permission on Kafka cluster. Run the following command on a kafka broker node:

sudo -u kafka $KAFKA\_HOME/bin/kafka-acls.sh -config $KAFKA\_HOME/config/server.properties -add -allowprincipals user:sanalytix -operations ALL -clusterRestart the Tomcat (Follow the Restart Gathr → Section to understand the steps) and the configurations mentioned in the steps below will be reflected in the Gathr UI.

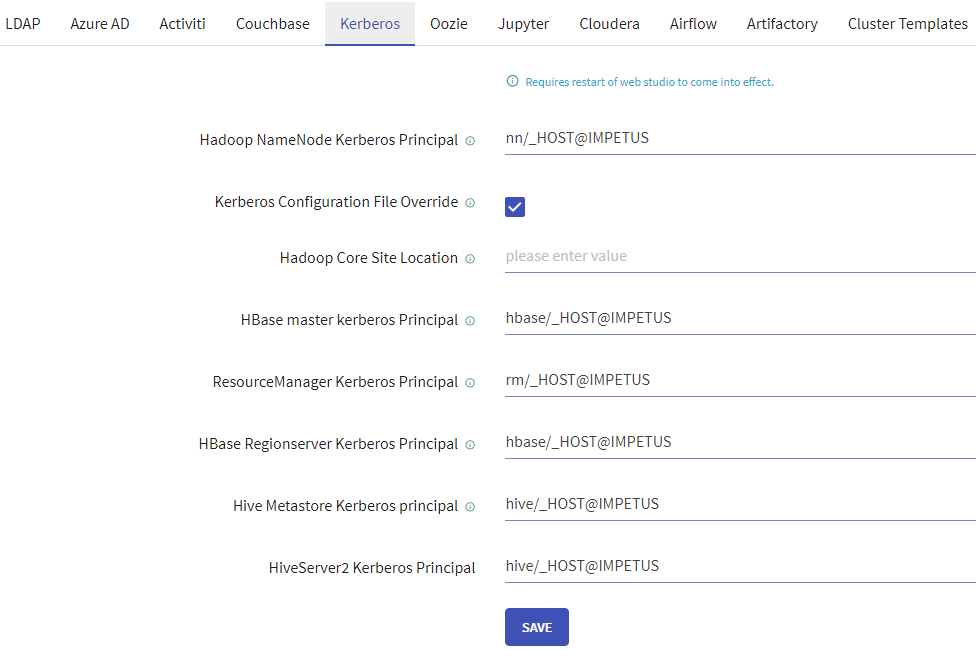

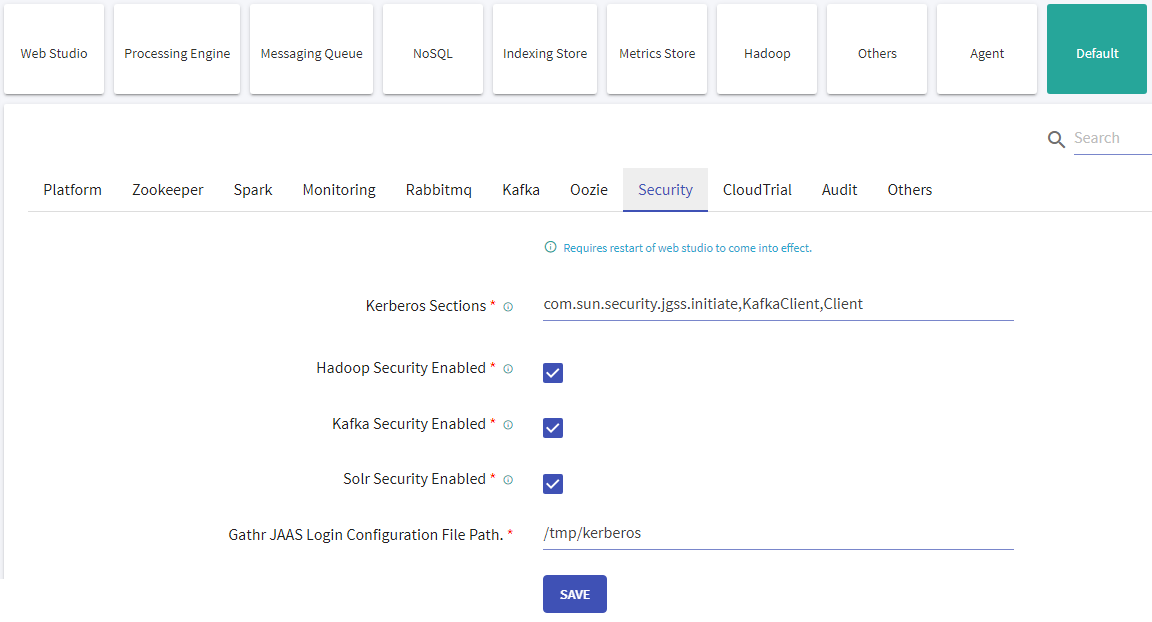

Verify the below configurations after completion of the above steps.

Update the HDFS, Hive and Hbase connections with Keytabs file.

Then, user can create Workspace and verify Local/Livy connection. This confirms that the environment is kerberised.

Configuration to launch and access H2O cluster on Kerberised environment:

Go to the location: Gathr/conf/common/kerberos and update the below files:

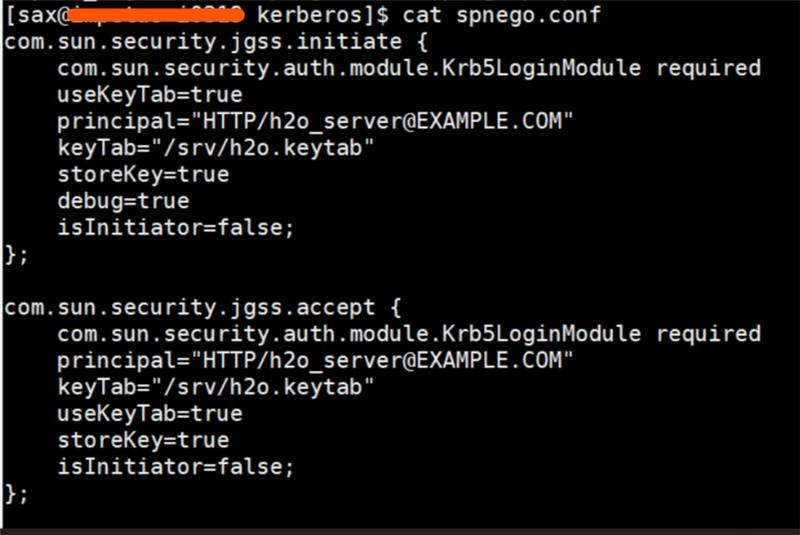

- Update spnego.conf with appropriate HTTP service principal and keytab.

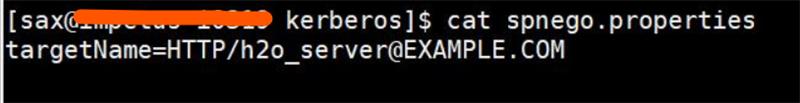

- Update targetName in spnego.properties with the HTTP service principal.

Go to the location: Gathr/bin/DS_scripts.

- In shell scripts createH2OCluster.sh and h2oClusterActions.sh, update the below kinit command with the service principal and keytab having access to HDFS services.

kinit -kt /etc/security/keytabs/sax.service.keytab sax

If you have any feedback on Gathr documentation, please email us!