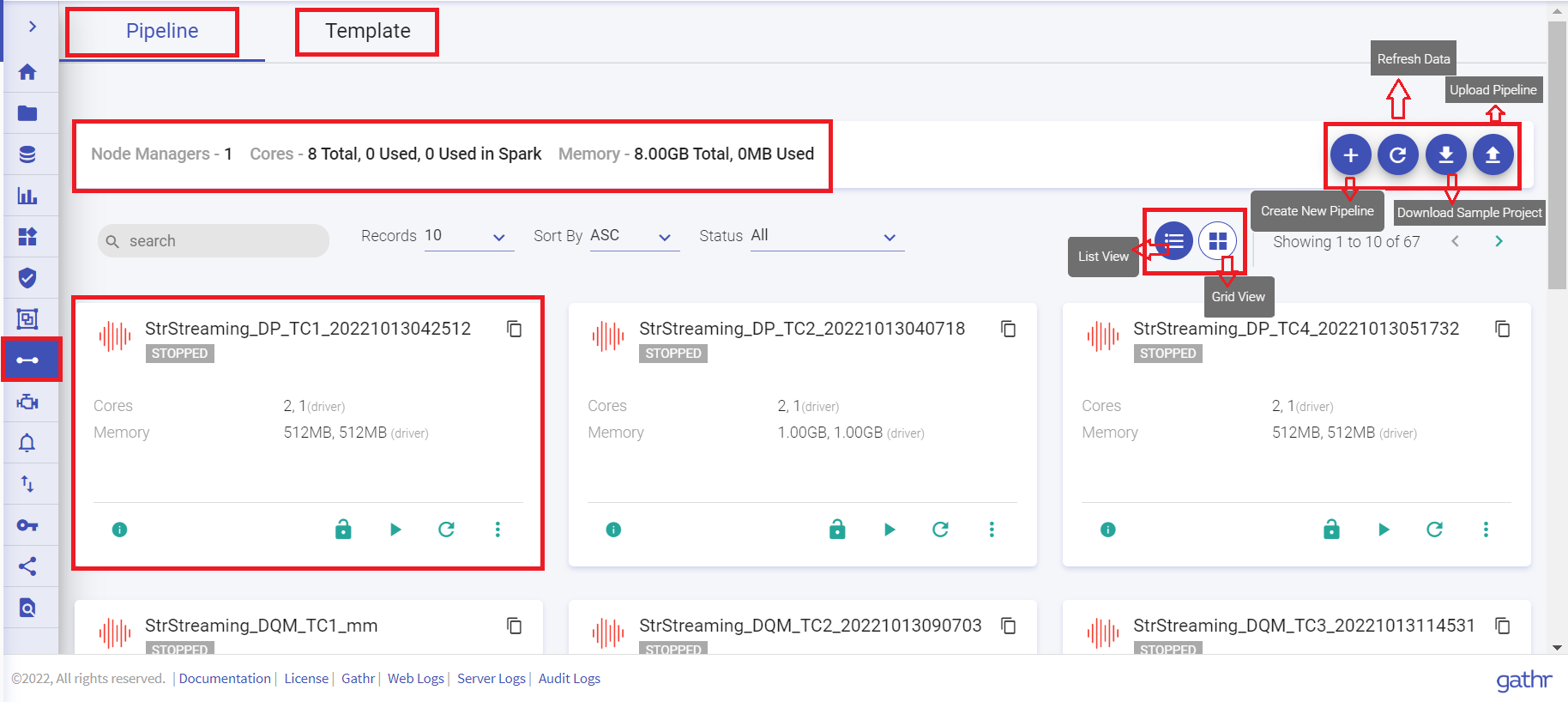

Pipeline Listing Page

In this article

If you are a new user and haven’t yet created a pipeline, then the Pipeline page displays an introductory screen. Click the Create a Pipeline button at top right side of the screen.

If you have already created pipelines, then the Pipeline listing page displays all the existing pipelines.

You can create a new pipeline, download sample project and upload a pipeline from your local system.

You can navigate to Templates listing page by clicking at the Template tab.

Template are the pipelines in which user can add variables and parameters. Instance(s) are created within the template once these value(s)/parameters are added. To know more, see Templates Introduction →

| Name | Description |

|---|---|

| Pipeline | All the data pipelines fall under this category. |

| Template | Template are the pipelines in which user can add variables and parameters. Instance(s) are created within the template once these value(s)/parameters are added. |

| Filter | Pipelines are filtered based on 1. Cloud Vendors: - All - Not Configured - Databricks - Amazon EMR 2. Status - All - Active - Stopped - Error 3. Cluster Status. (Explained below in detail) - All - Long Running Cluster - New Cluster There is a reset button to bring the settings to default. |

Actions for Pipelines | Create a new pipeline, integrate pipelines, audit the pipelines, clone, download a sample project, deploy on clusters and upload a pipeline from the same or different workspace. |

| Databricks | When a pipeline is deployed on Databricks. |

| Amazon EMR | When a pipeline is deployed on Amazon EMR. |

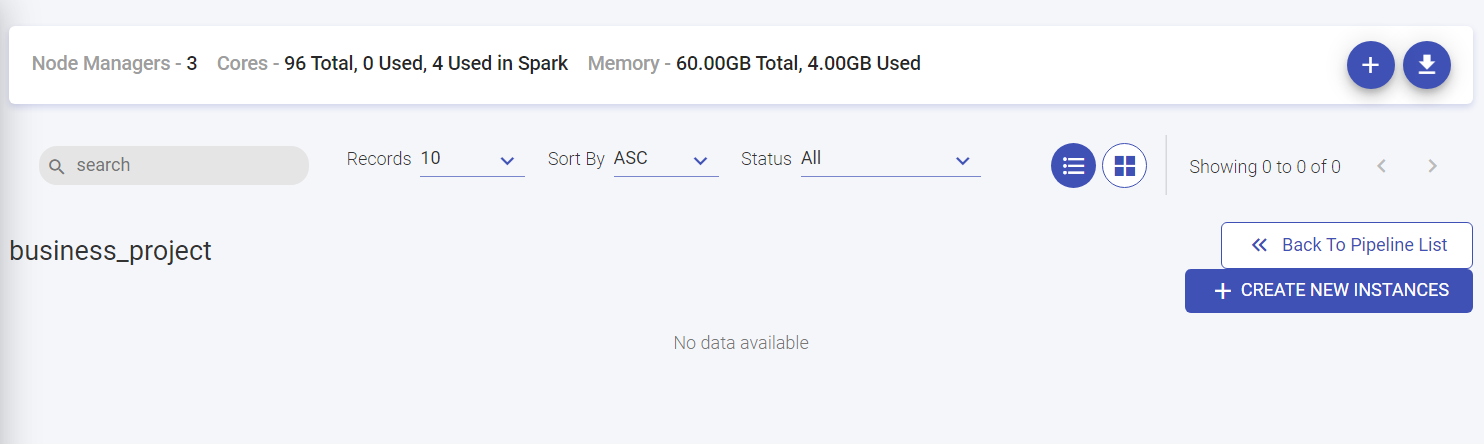

The Pipeline listing page will display all the batch and streaming data pipeline tiles. User can create a pipeline, download sample project and upload pipeline from options available at the top right of the listing page.

For AWS, the pipelines can be deployed on Databricks and EMR.

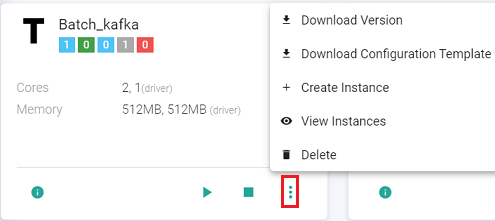

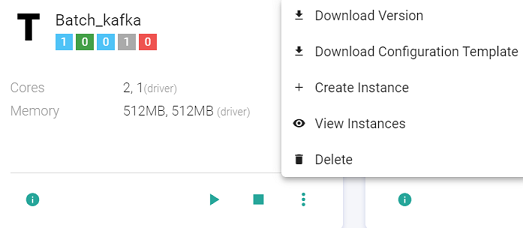

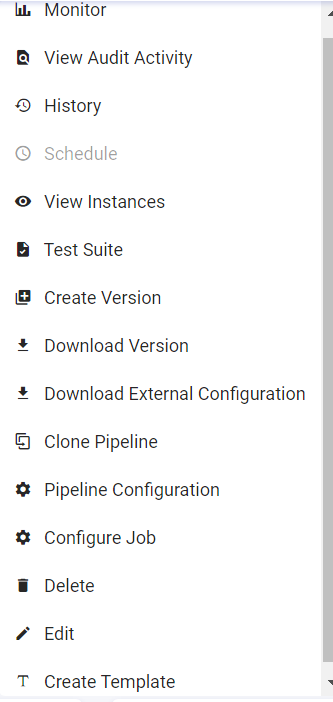

The below image shows actions that can be performed on the pipeline upon clicking the pipeline tile ellipses.

Within the Data Pipeline, user can perform the following actions:

Design, Import and Export real-time data pipeline.

Deploy pipelines on AWS Cloud platform.

Deploy pipelines on either Cloud vendor-Databricks or Amazon EMR.

Drag, drop and connect operators to create applications.

Create Datasets and reuse them in different pipelines.

Create Models from the analytics operators.

Monitor detailed metrics of each task and instance.

Run PMML-based scripts in real-time on every incoming message.

Explore real-time data using interactive charts and dashboards.

There could be a business scenario where the pipeline design remains the same for multiple pipelines, yet the configurations differ.

For an e.g. A user has 3 different Kafka topics containing similar data that needs to go through same kind of processing (Kafka –> Filter –> ExpressionEvaluator –> HDFS). Normally, a user would create two clones of the same pipeline and edit the configuration.

The easier way of doing this is now user can create a pipeline template that comprises of the pipeline structure and multiple instances which only differ in configuration and inherit the pipeline structure of the pipeline template.

Download Configuration Template

The user can use the Download Configuration Template option to download a json file that contains all the component configurations used in a data pipeline. This will be used to create an instance.

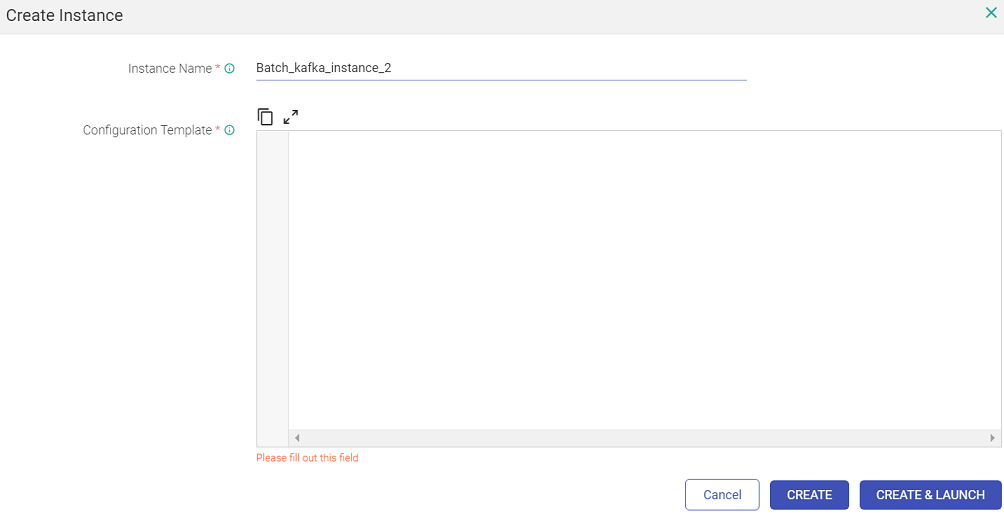

Create Instance

The user will modify the downloaded json file using ‘Download Configuration Template’ option to apply configuration changes for his first instance. For eg. The user changes the topic name in the Kafka channel and saves the modified json file.

The user can create the first instance by clicking the ellipses of the pipeline tile and click View Instance.

On clicking ‘View Instance’ a page opens where user can create an instance by providing instance name and provide configuration used for pipeline instance creation. Provide a name and paste the content of the modified json file that has the amended Kafka topic name.

Click on ‘CREATE’ button to create instance or optionally ‘CREATE & LAUNCH’ to create and launch the instance.

The user can create a template in the data pipeline listing page. Thus, a template can have multiple pipeline instances running using the same set of components and different configurations.

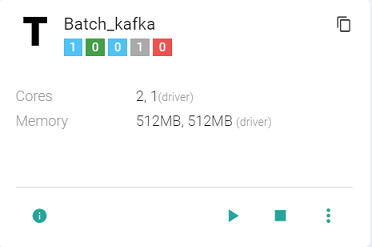

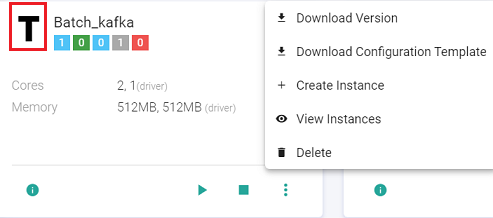

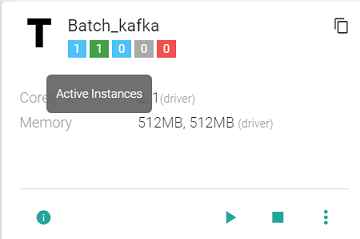

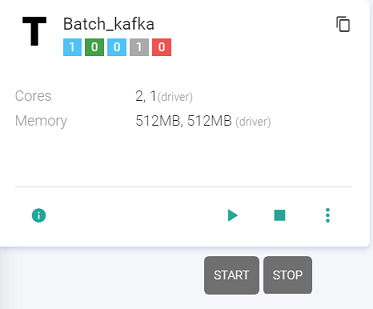

-T stands for Template.

-The Template tile shows following details: Total Instances, Active Instances, Starting Instances, Stopped Instances, Instances in Error.

The user can create further instances and view existing instances as well:

The user can delete the instance. The user cannot delete a template if instances exist. To delete a template, all instances should be deleted first.

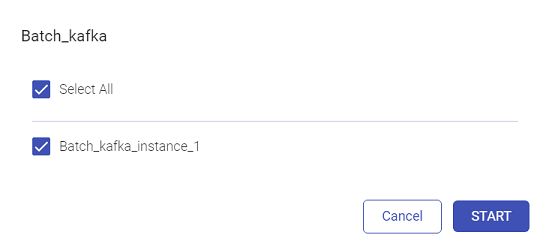

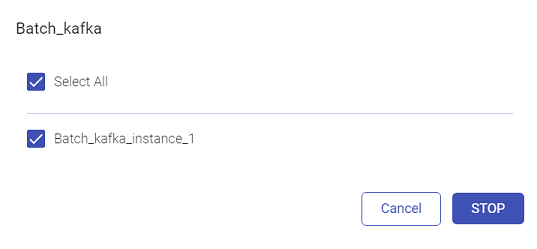

Start/Stop Template

The user can Start/Stop the template by clicking at the Start/Stop button available at the template. All the instances must be deleted to delete the template.

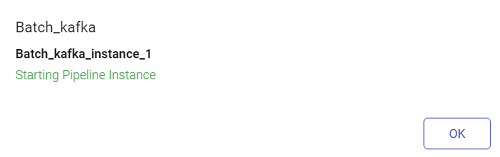

Once the user selects Start, the user will be required to select the instance(s):

Click Start.

Likewise, to stop the instances, click Stop.

If you have any feedback on Gathr documentation, please email us!