Others

Note: Some of the properties reflected are not feasible with Multi-Cloud version of Gathr. These properties are marked with **

Miscellaneous configurations properties left of the Web Studio. This category is further divided into various sub-categories.

LDAP

| Field | Description |

|---|---|

| Password | Password against which the user will be authenticated in LDAP Server. |

| Group Search Base | Defines the part of the directory tree under which group searches will be performed. |

| User Search Base | Defines the part of the directory tree under which DN searches will be performed. |

| User Search Filter | The filter which will be used to search DN within the User Search Base defined above. |

| Group Search Filter | The filter which is used to search for group membership. The default is member={0 corresponding to the groupOfMembers LDAP class. In this case, the substituted parameter is the full distinguished name of the user. The parameter {1} can be used if you want to filter on the login name. |

| Admin Group Name | LDAP group name which maps to application’s Admin role. |

| Developer Group Name | LDAP group name which maps to application’s Developer role. |

| Devops Group Name | LDAP group name which maps to application’s Devops role. |

| Tier-II Group Name | LDAP group name which maps to application’s Tier-II role. |

| LDAP Connection URL | URL of the LDAP server is a string that can be used to encapsulate the address and port of a directory server. For e.g. - ldap://host:port. |

| User Distinguished Name | A unique name which is used to find the user in LDAP Server. |

Activiti

| Field | Description |

|---|---|

| Alert Email Character Set | The character set used for sending emails. |

| Alert Sender Email | The email address from which mails must be sent. |

| JDBC Driver Class | The database driver used for activity setup. |

| JDBC URL | The database URL for activity database. |

| JDBC User | The database user name. |

| JDBC Password | JDBC Password. |

| Host | The email server host from which emails will be sent |

| Port | The email server port. |

| User | The email id from which emails will be sent. |

| Password | The Password of the email account from which emails will be sent. |

| Default Sender Email | The default email address from which mails will be sent if you do not provide one in the UI. |

| Enable SSL | If SSL (Secure Sockets Layer) is enabled for establishing an encrypted link between server and client. |

| Enable TSL | If Transport Layer Security (TLS) enables the encrypted communication of messages between hosts that support TLS and can also allow one host to verify the identity of another. |

| History | Activiti history is needed or not. |

| Database | The database used for activity setup. |

Couchbase

| Field | Description |

|---|---|

| Max Pool Size ** | The Couchbase Max Pool Size. |

| Default Bucket Memory Size ** | The memory size of default bucket in Couchbase. |

| Password ** | The Couchbase password. |

| Default Bucket Replica No ** | The Couchbase default bucket replication number. |

| Host Port ** | The port no. of Couchbase. |

| Host Name ** | The host on which the Couchbase is running. |

| HTTP URL ** | The Couchbase http URL. |

| Bucket List ** | The Couchbase bucket list. |

| Polling timeout ** | The polling timeout of Couchbase. |

| Polling sleeptime ** | The sleep time between each polling. |

| User Name ** | The username of the Couchbase user. |

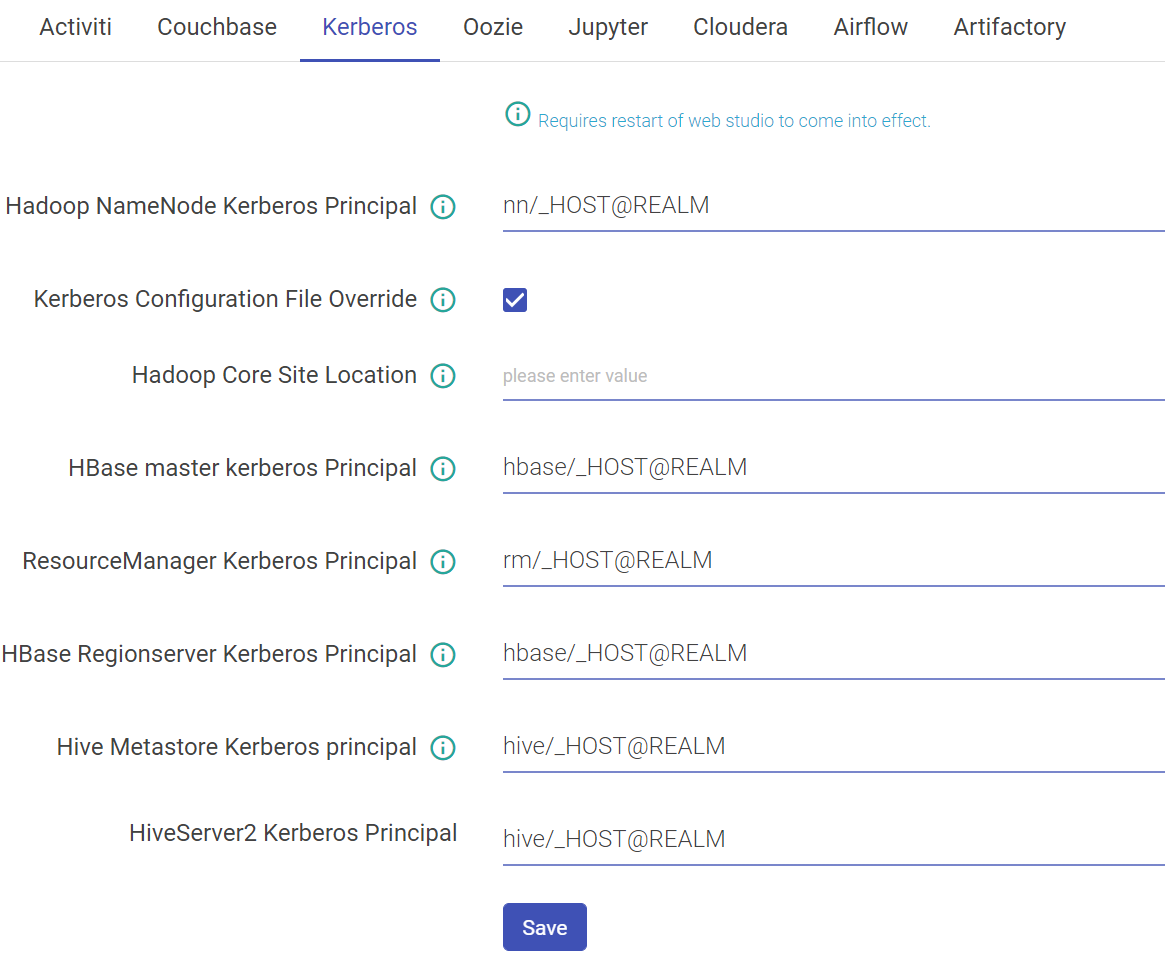

Kerberos

| Field | Description |

|---|---|

| Hadoop NameNode Kerberos Principal | Service principal of name node. |

| Kerberos Configuration File Override | Set to true if you want the keytab_login.conf file to be (re)created for every running pipeline when Kerberos security is enabled. |

| Hadoop Core Site Location | The property should be used when trying to connect HDFS from two different realms. This property signifies the path of Hadoop core-site.xml containing roles for cross-realm communications. |

| Hbase Master Kerberos Principal | Service principal of HBase master. |

| ResourceManager Kerberos Principal | Service principal of resource manager |

| Hbase Regionserver Kerberos Principal | Service principal of region server. |

| Hive Metastore Kerberos principal | Service principal of Hive metastore. |

| HiveServer2 Kerberos Principal | Service principal of hive server 2 |

Configuring Kerberos

You can add extra Java options for any Spark Superuser pipeline in following way:

Login as Superuser and click on Data Pipeline and edit any pipeline.

Kafka

HDFS

HBASE

SOLR

Zookeeper

Configure Kerberos

Once Kerberos is enabled, go to Superuser UI > Configuration > Environment > Kerberos to configure Kerberos.

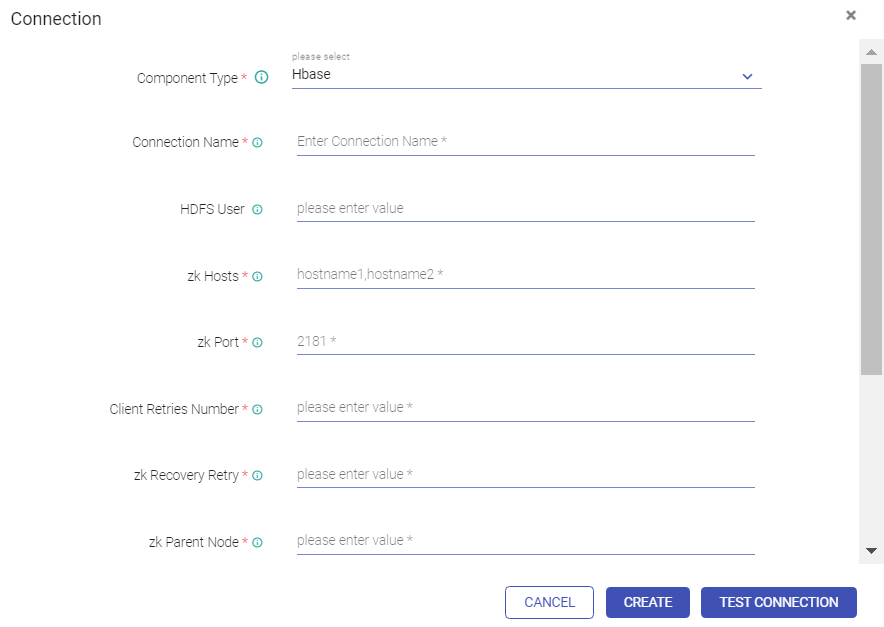

Configure Kerberos in Components

Go to Superuser UI > Connections, edit the component connection settings as explained below:

HBase, HDFS

| Field | Description |

|---|---|

| Key Tab Select Option | A Keytab is a file containing pair of Kerberos principals and encrypted keys. You can use Keytab to authenticate various remote systems. It has two options: Specify Keytab File Path: Path where Keytab file is stored Upload Keytab File: Upload Keytab file from your local file system. |

| Specify Keytab File Path | If the option selected is Specify** Keytab File Path,** system will display the field** KeyTab File Path where you will specify the keytab file location. |

| Upload Keytab File | If the option selected is Upload** Keytab File**,** system will display the** field** Upload Keytab File that will enable you to upload the Keytab file. |

By default, Kerberos security is configured for these components: Solr, Kafka and Zookeeper. No manual configuration is required.

Jupyter

| Field | Description |

|---|---|

| jupyter.hdfs.port | HDFS Http port. |

| jupyter.hdfs.dir | HDFS location where uploaded data will be saved. |

| jupyter.dir | Location where notebooks will be created. |

| jupyter.notebook.service.port | Port on which Auto create Notebook service is running. |

| jupyter.hdfs.connection.name | HDFS connection name use to connect HDFS (from gathr connection tab). |

| jupyter.url | URL contains IP address and port where Jupyter services are running. |

Cloudera

| Property | Description |

|---|---|

| Navigator URL | The Cloudera Navigator URL. |

| Navigator API Version | The Cloudera Navigator API version used. |

| Navigator Admin User | The Cloudera navigator Admin user. |

| Navigator User Password | The Cloudera navigator Admin user password. |

| Autocommit Enabled | Specifies of the auto-commit of entities is required. |

Airflow

| Property | Description |

|---|---|

| Enable AWS MWAA | Option to enable AWS Managed Airflow for gathr. It is disabled by default. |

If Enable AWS MWAA is check-marked, additional fields will be displayed as given below:

| Region | AWS region should be provided where MWAA environment is created. |

| Provider Type | Option to choose AWS credentials provider type. |

| AWS Access Key ID | AWS account access key ID should be provided for authentication if Provider Type is selected as AWS keys. |

| AWS Secret Access Key | AWS account secret access key should be provided for the Access Key ID specified above. The available options to choose from the drop-down list are: None, AWS Keys and Instance Profile. |

| Environment Name | Exact AWS environment name should be provided that is required to be integrated with gathr. |

| Gathr Service URL | Default URL where gathr application is installed. This should be updated for any modifications done to the gathr base URL. |

| DAG Bucket Name | Exact DAG bucket name should be provided that is configured in the environment specified above. |

| DAG Path | DAG path should be provided for the bucket name specified above. |

If Enable AWS MWAA is un-check, the below fields will be displayed:

| Airflow Server Token Name | It is the key that is used to authenticate a request. It should be same as the value given in section Plugin Installation>Authentication for property ‘sax_request_http_token_name’ |

| Airflow Server Token Required | Check if the token is required. |

| Airflow Server Token Value | HTTP token to authenticate request. It should be same as the value given in section Plugin Installation > Authentication for property ‘sax_request_http_token_value’ |

| Airflow Server URL | Airflow URL to connect to Airflow. |

If you have any feedback on Gathr documentation, please email us!