Manage Google Cloud Dataproc Clusters

GCP Dataproc services are used to manage Dataproc cluster(s) from the Gathr application.

The Cluster List View is a feature in Gathr where superuser and workspace users can manage Google Dataproc clusters.

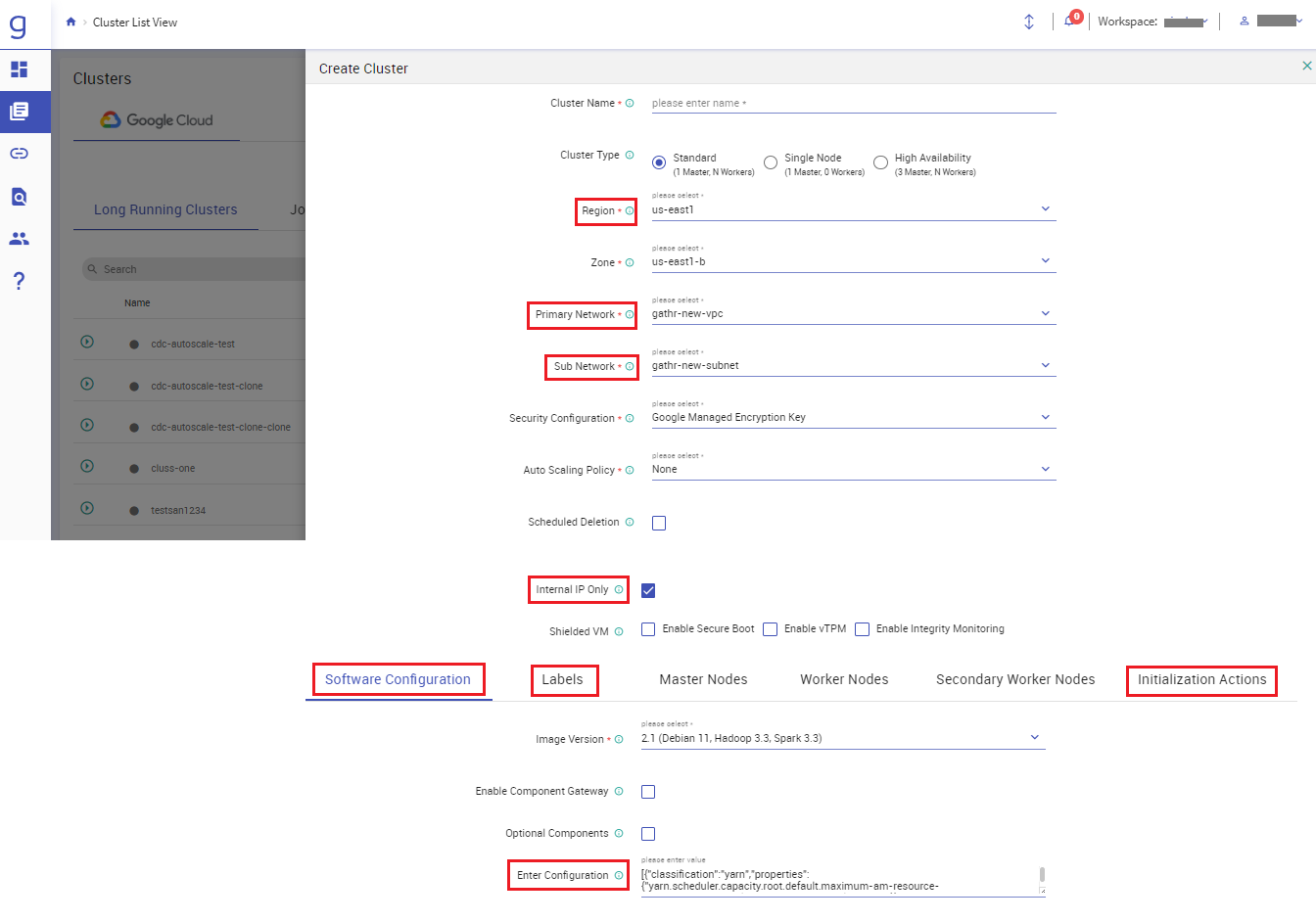

Create Cluster

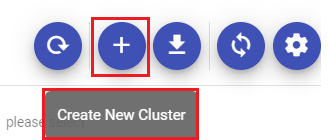

From the main menu navigate to the Cluster List View page.

To create a cluster click the Create New Cluster option.

On the Create Cluster window, the below options are available:

| Field | Description |

|---|---|

| Cluster Name | Option to provide a unique name of the cluster. |

| Cluster Type | Option to choose from various cluster types. i.e., Standard (1 Master, N Workers), Single Node Cluster (1 Master, 0 Workers), High Availability (3 Master, N Workers). |

| Region | Option to select the Cloud Dataproc regional service, determining the zones and resources that are available. Example: us-east4 |

| Zone | Option to select the available computing resources where the data is stored and used from. Example: us-east4-a. Auto-zoning is used for creating clusters when the ‘Any’ zone option is selected from the drop-down list. Auto Zone prioritizes creating a cluster in a zone with resource reservations. If requested cluster resources can be fully satisfied by reserved, and if required, the on-demand resources in a zone, Auto Zone will consume the reserved and on-demand resources, and create the cluster in that zone. Auto Zone prioritizes zones for selection according to total CPU core (vCPU) reservations in a zone. Example: A cluster creation request specifies 20 n2-standard-2 and 1 n2-standard-64 (40 + 64 vCPUs requested). Auto Zone will prioritize the following zones for selection according to the total vCPU reservations available in the zone: zone-c available reservations: 3 n2-standard-2 and 1 n2-standard-64 (70 vCPUs). zone-b available reservations: 1 n2-standard-64 (64 vCPUs). zone-a available reservations: 25 n2-standard-2 (50 vCPUs). Assuming each of the above zones has additional on-demand vCPU and other resources sufficient to satisfy the cluster request, Auto Zone will select zone-c for cluster creation. If requested cluster resources cannot be fully satisfied by reserved plus on-demand resources in a zone, Auto Zone will create the cluster in a zone that is most likely to satisfy the request using on-demand resources. |

| Primary Network | Option to select the default network or any VPC network created in this project for the cluster. |

| Sub NetWork | Includes the subnetworks available in the Compute Engine region that you have selected for this cluster. |

| Security Configuration | Provide Security Configuration for the cluster. |

| Auto Scaling Policy | Option to automate cluster resource management based on the auto scaling policy. |

| Scheduled Deletion | Option to schedule deletion. You can delete on a fixed time schedule or delete after cluster idle time period without submitted jobs. |

| Internal IP Only | Configure all instances to have only internal IP addresses. |

| Shielded VM | Turn on all the settings for the most secure configuration. Available options are: Enable Secure Boot, Enable vTPM, Enable Integrity Monitoring. |

Other configuration options available are explained below:

Software Configuration

| Field | Description |

|---|---|

| Image Version | Cloud Dataproc uses versioned images to bundle the operating system, big data components and Google Cloud Platform connectors into one package that is deployed on your cluster. |

| Enable Component Gateway | Option to provide access to web interfaces of default & selected optional components on the cluster. |

| Optional Components | Select additional component(s). |

| Enter Configuration | Option to provide cluster properties. The existing properties can also be modified. |

Labels

| Field | Description |

|---|---|

| Add Label | Option to add labels. |

Master Nodes

| Field | Description |

|---|---|

| Machine Types | Select GCP machine type from the master node. Available options are: Compute Optimized, Memory Optimized, Accelerator Optimized, General Purpose. |

| Series | Select series for your Master Node. |

| Instance Type | The maximum number of nodes are determined by your quota and the number of SSDs attached to each node. |

| Primary Disk | The primary disk contains the boot volume, system libraries, HDFS NameNode metadata. |

| Local SSD | Each Solid State Disk provides 375 GB of fast local storage. If one or more SSDs are attached, the HDFS data blocks abd local execution directories are spread across these disks. HDFS does not run on preemptible nodes. |

Worker Nodes

| Field | Description |

|---|---|

| Machine Types | Select GCP machine type from the master node. Available options are: Compute Optimized, Memory Optimized, Accelerator Optimized, General Purpose. |

| Series | Select series for your Worker Node. |

| Instance Type | The maximum number of nodes are determined by your quota and the number of SSDs attached to each node. |

| Primary Disk | The primary disk contains the boot volume, system libraries, HDFS NameNode metadata. |

| Local SSD | Each Solid State Disk provides 375 GB of fast local storage. If one or more SSDs are attached, the HDFS data blocks and local execution directories are spread across these disks. HDFS does not run on preemptible nodes. |

Secondary Worker Nodes

| Field | Description |

|---|---|

| Instance Count | The maximum number of nodes are determined by your quota and the number of SSDs attached to each node. |

| Preemptibility | Spot and preemptible VMs cost less, but can be terminated at any time due to system demands. |

| Primary Disk | The primary disk contains the boot volume, system libraries, HDFS NameNode metadata. |

| Local SSD | Each Solid State Disk provides 375 GB of fast local storage. If one or more SSDs are attached, the HDFS data blocks and local execution directories are spread across these disks. HDFS does not run on preemptible nodes. |

Initialization Actions

| Field | Description |

|---|---|

| GCS File Path | Provide the GCS file path. |

The below fields are stored with their default values in Gathr metastore and will be auto-populated while creating the cluster in Gathr.

- Region

- Primary Network

- Sub Network

- Internal IP Only

- Enter Configuration

- Initialization Actions

- Labels

These values can be updated as per your requirements either from Gathr UI manually or by update query as mentioned below.

You can modify the below query as per your requirement to update default fields:

UPDATE gcp_cluster_default_config set default_config_json = '{"internalIpOnly":"","subnetworkUri":"","region":"","executableFile":"","properties":[{"classification":"yarn","properties":{"yarn.scheduler.capacity.root.default.maximum-am-resource-percent":"0.50","yarn.log-aggregation.enabled":"true"}},{"classification":"dataproc","properties":{"dataproc.scheduler.max-concurrent-jobs":"5","dataproc.logging.stackdriver.enable":"true","dataproc.logging.stackdriver.job.driver.enable":"true","dataproc.logging.stackdriver.job.yarn.container.enable":"true","dataproc.conscrypt.provider.enable":"false"}},{"classification":"spark","properties":{"spark.yarn.preserve.staging.files":"false","spark.eventLog.enabled":"false"}}],"networkUri":"","labels":{}}'

Upon clicking SAVE button the cluster will be saved on database but it will not be launched.

You can click SAVE AND CREATE button to save the cluster on database and create the cluster on dataproc.

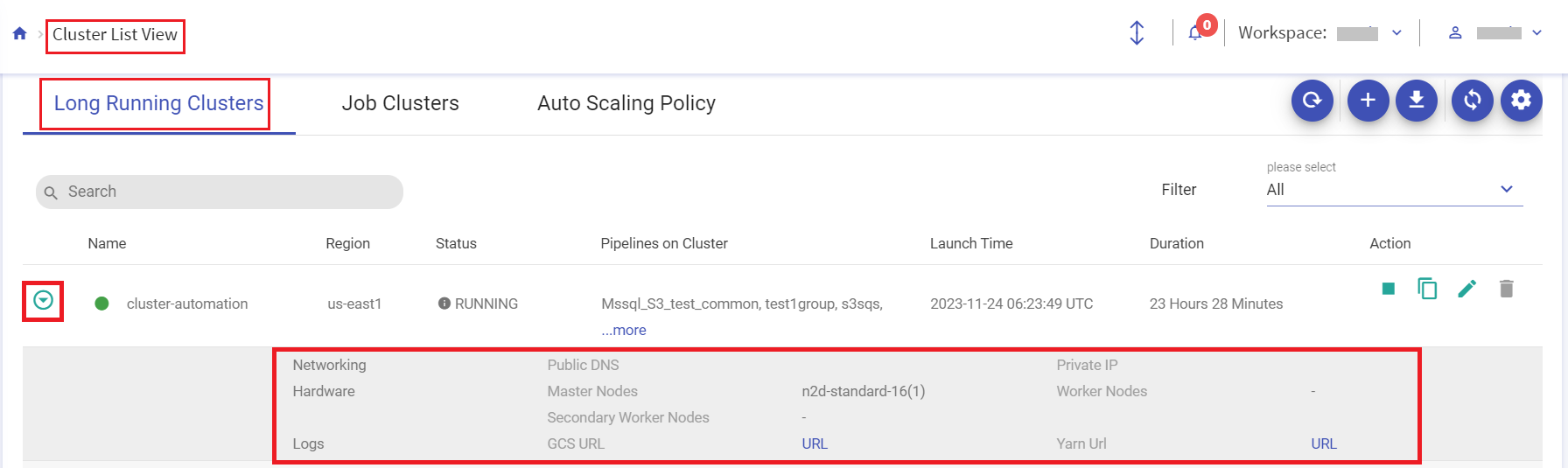

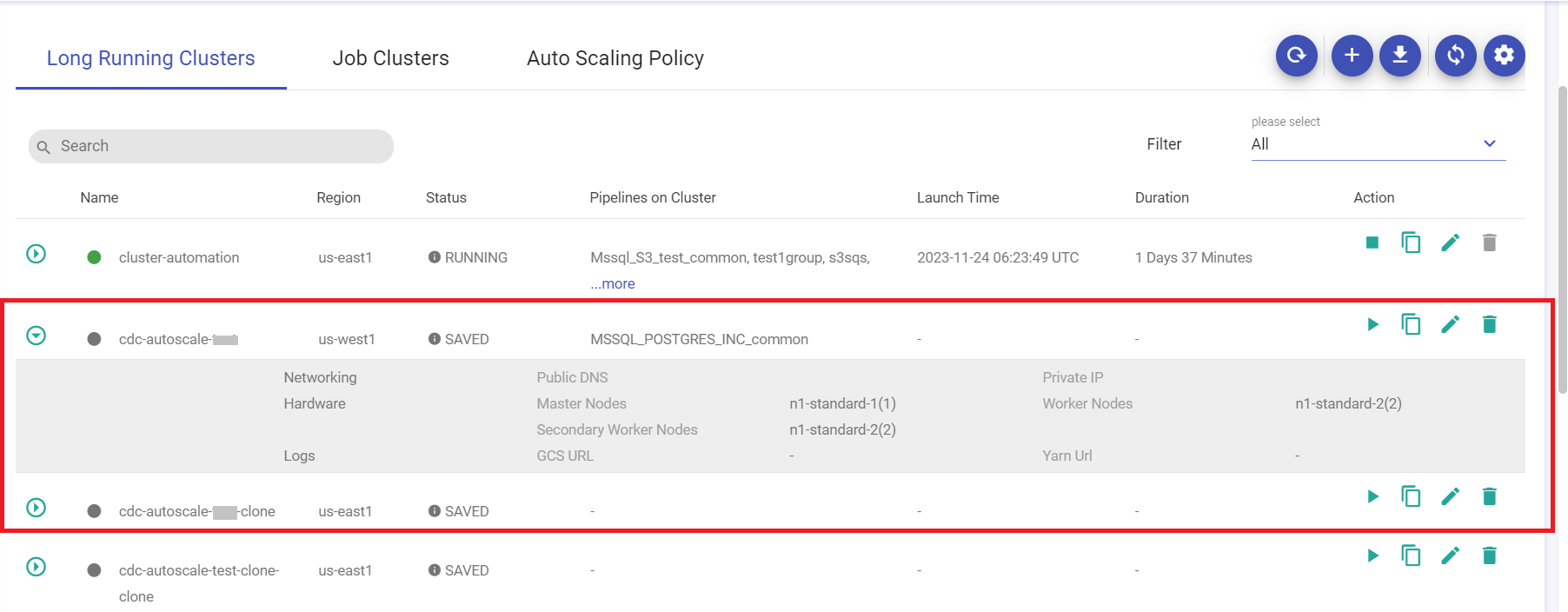

Cluster(s) Listing page

On the listing page, under the Long Running Clusters tab, the below details are shown for all the long running clusters:

You can view the details of the created cluster by clicking on the expand button available on the listing page.

Details of the cluster will be available on the listing page as mentioned below:

| Field | Description |

|---|---|

| Networking | The network details of the cluster are available here. Example: Private IP. |

| Hardware | Master nodes represent the type of nodes that you have chosen for your master. For example: n2d-standard-16(1) where, n2d-standard specifies the type, 1 denotes the number of nodes and 16 denotes the number of CPU’s utilized. If details of Worker nodes are not available then it denotes that a single node cluster is used. |

| Logs | GCS URL is available using which you can access the url of GCS bucket folder where all the logs of the pipeline are stored. Yarn URL is available using which you can access the yarn when the cluster is running. |

Other cluster details include:

| Field | Description |

|---|---|

| Name | Name of the cluster. |

| Region | Allotted region of the cluster. |

| Status | Current status of the cluster. i.e., RUNNING, STOPPED, SAVED, DELETED. |

| Pipelines on Cluster | The existing pipelines on the cluster. |

| Launch Time | Cluster launch time. Example: 2023-10-12 06:12:21 UTC |

| Duration | Running duration of the cluster. Example: 2 Hours 42 Minutes. |

| Actions | Under the tab various actions including Start or Stop Cluster→, Clone Cluster→, Edit Cluster→, Delete Cluster→ can be performed. These actions are explained in detail below: |

Start or Stop Cluster

You can start/stop a cluster that is created by clicking at the Start/Stop option available under the Action tab of listing page.

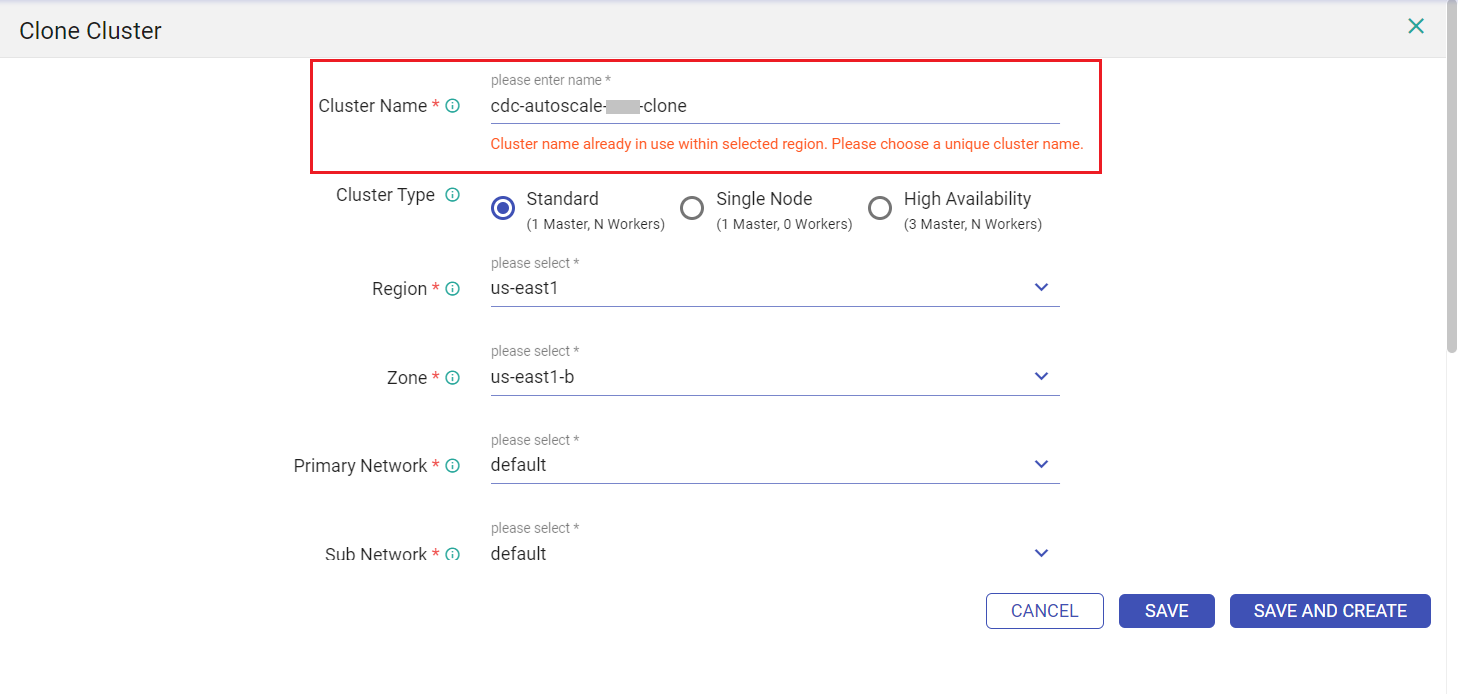

Clone Cluster

You can clone a cluster, by clicking on the Clone option under Action tab.

Edit Cluster

You can edit a cluster, by clicking at the Edit option under Action tab.

Upon clicking Edit option, the Edit Cluster window opens with the following options:

| Field | Description |

|---|---|

| Cluster Name | Option to provide a unique name of the cluster. Provide unique name in lower case only. You can create clusters with name starting with a lowercase letter followed by up to 51 lowercase letters, numbers or hyphens. Ensure that the name should not end with a hyphen. |

| Cluster Type | Option to choose from various cluster types. i.e., Standard (1 Master, N Workers), Single Node Cluster (1 Master, 0 Workers), High Availability (3 Master, N Workers). |

| Region | Option to select the Cloud Dataproc regional service, determining the zones and resources that are available. |

| Zone | Option to select the available computing resources and where the data is stored and used from. |

| Primary Network | Option to select the network or any VPC network created in this project for the cluster. |

Click SAVE to update the changes of the cluster.

A cluster can be edited/updated only when it is in following state:

- RUNNING

- SAVED

- DELETED

The below fields can be updated while updating the cluster when it is in RUNNING state:

- graceful-decommission-timeout

- num-secondary-workers

- num-workers

- labels

- autoscaling-policy

Delete Cluster

You can Delete a cluster, by clicking at the Delete option under Action tab.

On a running cluster, if no pipelines are configured and you want to delete the cluster, then you will have two options to delete:

Delete from GCP, where the cluster will be deleted from GCP and continue to remain in the Gathr database. So, later the same cluster can be started.

Delete cluster from both GCP and Gathr and the cluster will be removed from both.

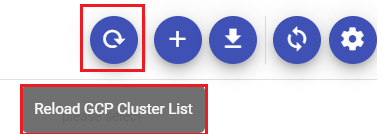

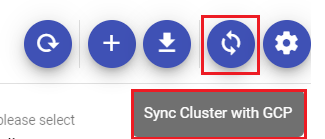

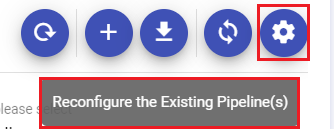

Options to Reload GCP Cluster List→, Create New Cluster →, Fetch Cluster from GCP →, Sync Cluster with GCP →,Reconfigure the Existing Pipelines→ are available on the Long Running Clusters page. These are explained below.

Reload GCP Cluster List

You can reload/refresh the GCP cluster listing by clicking at the Reload GCP Cluster List option available on the Cluster List View page.

Create New Cluster

You can create a GCP Dataproc cluster by clicking at the Create New Cluster option to run the ETL jobs. For details on creating a cluster click Create Cluster

Fetch Cluster from GCP

If you create cluster from GCP console, then you have an option to fetch the cluster at Gathr UI using the Fetch Cluster from GCP option.

If you have created a cluster in GCP console and you want to use that cluster in Gathr for running the pipelines, then click the Fetch Cluster from GCP option.

Upon clicking this option, you will be able to view the cluster created in GCP, on Gathr UI and you will be able to register the same cluster in Gathr.

Upon clicking this option, provide the GCP Cluster ID and Click FETCH on the Fetch Cluster window.

| Field | Description |

|---|---|

| Fetch Cluster From GCP | Option to fetch the existing cluster from GCP. Upon clicking this option, provide the GCP Cluster ID and click FETCH on the Fetch Cluster window. |

| Sync Cluster with GCP | Option to sync the existing cluster on Gathr database with GCP. |

| Reconfigure the Existing Pipeline(s) | Option to reconfigure the existing pipeline. Upon clicking this button, the below options are available. |

Sync Cluster with GCP

There could be scenarios when you need to sync your gathr cluster with GCP.

When you have a cluster with Deleted or Saved status in Gathr but that cluster is launched in GCP console.

That cluster will not be automatically synced with Gathr as it will be synced only with user request.

Click Sync cluster from GCP button on Gathr to sync your cluster.

Reconfigure the Existing Pipelines

This option enables you to reconfigure the pipelines from one cluster to another.

In certain scenario this functionality is required if you have to delete a cluster and use another cluster for your pipelines. Or if you want to shift a few pipelines from one or more clusters to another cluster.

To reconfigure pipeline(s), click on the Reconfigure the Existing Pipeline(s) option.

The Migrate Pipelines to other Cluster window opens with below fields:

| Field | Description |

|---|---|

| Source Cluster | Option to choose the source cluster(s) from where the required pipeline must be configured to run on the target cluster. |

| Pipelines | For the listed pipelines from the selected source cluster(s) choose the required pipelines that must be configured to run on the target cluster. |

| Target Cluster | Option to choose target cluster on which all the previously selected pipelines must be configured to run. |

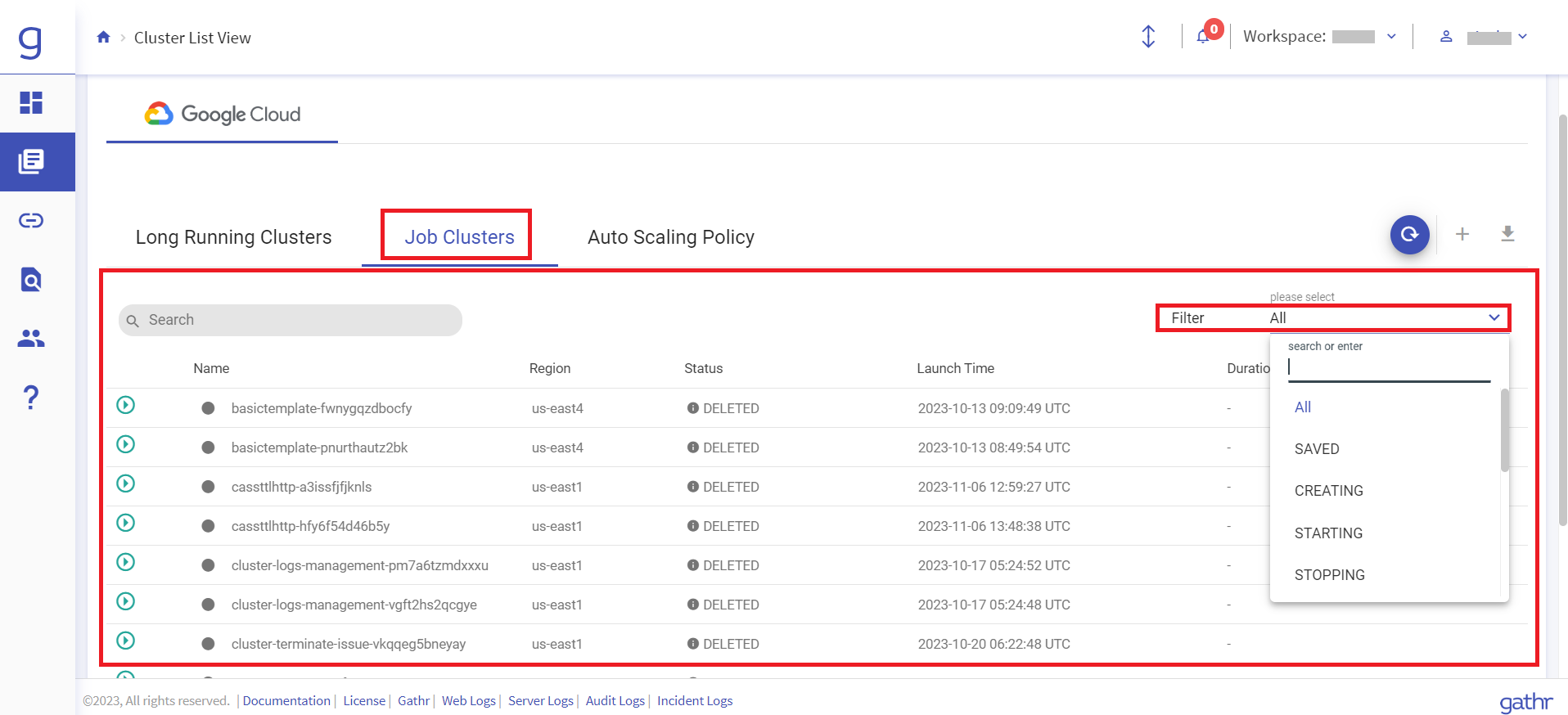

Job Clusters

Under the Job Clusters tab, the list of all job clusters are available. You can filter the job clusters based on the options available in the Filters drop-down list:

- ALL

- SAVED

- CREATING

- STARTING

- STOPPING

- STOPPED

- DELETING

- ERROR

- UNKNOWN

- ERROR_DUE_TO_UPDATE

- RUNNING

- UPDATING

- DELETED

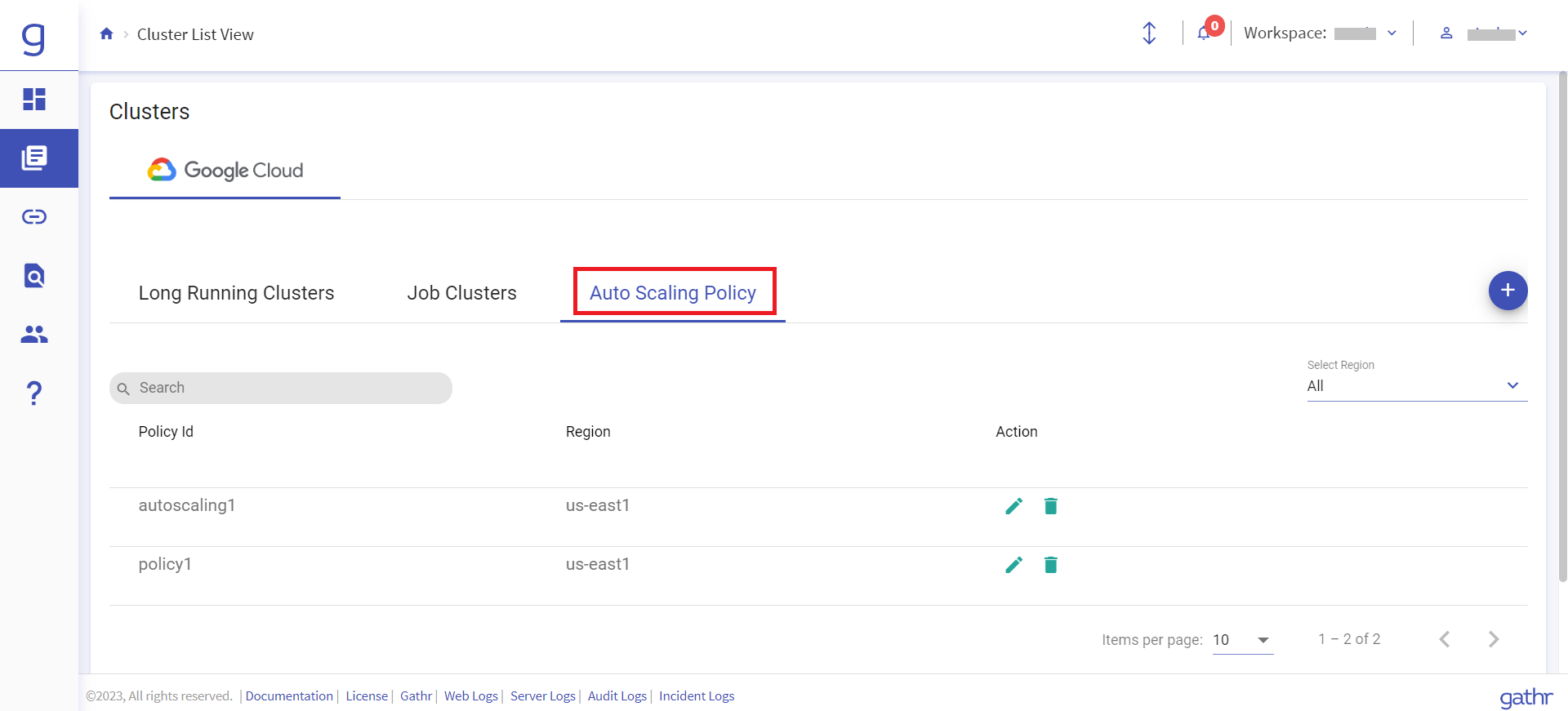

Auto Scaling Policy

Auto scaling option lets you to automatically add or delete virtual machine instances based on increase or decrease in load, thus letting you handle increase in traffic and reduce costs when the need for resources is lower.

Option to Create and manage Auto Scaling Policy is available on the Cluster List View landing page.

Upon clicking the Auto Scaling Policy tab, below options are available:

| Field | Description |

|---|---|

| Policy ID | The existing policy ID. |

| Region | The region in which the policy is created. Example: us-east4 |

| Action | Option to Edit or Delete the policy. |

Create Policy

Click the + CREATE POLICY option to create a new policy under the Auto Scaling Policy page.

| Field | Description |

|---|---|

| Policy Name | Provide a unique name for the policy. |

| Region | The region in which the policy is to be created. Example: us-east4 |

| Yarn Configuration | Provide Yarn Configuration details including Scale up factor and Scale up minimum worker fraction, Scale up minimum worker fraction and Scale down minimum worker fraction. |

| Graceful Decommission | Provide Graceful Decommission timeout per Hour/Min/Second/day. |

| CoolDown Duration | Provide Cooldown duration in hour(s)/minute(s)/second(s)/ day(s). |

| Scale primary workers | Check the option to scale primary workers. |

| Worker Configuration | Provide Worker Configuration details including number of instance(s), Secondary minimum instance(s), Secondary maximum instance(s). |

After cluster creation you can Configure GCP cluster in data pipeline → on Gathr.

If you have any feedback on Gathr documentation, please email us!