Gathr Automated Deployment on IBM

Prerequisites

OS Supported: Centos7.x/RHEL7.x or Centos8.x/RHEL8.x.

Gathr latest build should be downloaded from https://arrival.gathr.one

Above URL should be accessible from the client network

Minimum Hardware configurations needed for deployment:

| VM’s | Cores/RAM(GB’s) | Storage | OS |

|---|---|---|---|

| 1 | 16/32 | 200GB | CentOS/RHEL 7.x/8.x |

Firewall and SELINUX should be disabled.

Below ports should be open for dependent services: 2181,2888,3888,5432,8090,8009,8005,9200,9300,15671,5672

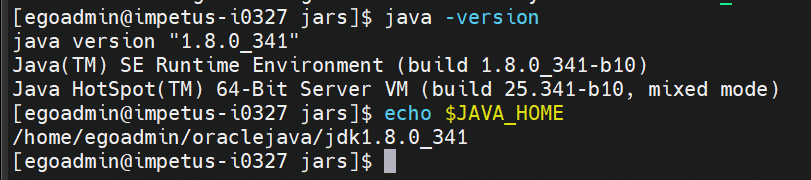

JAVA_HOME should be set properly as Gathr may give some issues on IBM JAVA. Here we have used Oracle Java:

- Python-3.8.8 should be configured on the machines.

Gathr installation user must have Read/write permissions to NFS location (that contains shared resource like keytab files, SSL certs, spark jars etc).

Edge node must have IBM Spark Installation directory present to configure it in Gathr configs.

If kafka has configure with Kerberos or SSL, then it requires keystore.jks and trustore.jks, keytab files with respective certificates passwords.

If we are using Kerberos connections like HDFS, it requires krb5.conf file & Gathr installation user keytab file which can access all kerberised services.

Ansible should be installed on the edge node (where deploying Gathr) Commands : -

sudo yum install epel-release

sudo yum install ansible

ansible –version

- We need to run ansible-playbook with “root” user for RMQ and Postgres installation

Installer scripts

Download the installation files, from the shared location provided by Gathr support team on the edge node where the user needs to setup Gathr. After extracting, “GathrDeploymentforIBM-Spectrum” folder would be available which contains the setup files.

Folder structure & necessary permissions

The table below specifies the location and permission of various artifacts provided on the edge node.

| Name | Sample Path | Permission | Owner | Description |

|---|---|---|---|---|

| Gathr Bundle | /home/sax/Gathr-5.0.0-SNAPSHOT.tar.gz | 444 | sax | Gathr tar setup file |

| Automation Scripts | /home/sax/GathrDeploymentforIBM-Spectrum | 755 | sax | Scripts to automate deployment |

Example:

chmod 444 <path/ Gathr-5.0.0-SNAPSHOT.tar.gz>

chmod -R 755 <path/GathrDeploymentforIBM-Spectrum>

SSL certificates

If any CDP services configured with SSL, then the following SSL certificates must be pre-installed on Gathr machine’s JAVA_HOME:

Example to import certificate:

keytool -import -alias <resource manager hostname> -file <path of resource manager pem file/xyz.pem> -keystore $JAVA_HOME/jre/lib/security/cacerts -storepass changeit

If Gathr tomcat is required to start with https, then use tomcat port as 8443 in the saxconfig_parameter (mentioned in section “installation options”).

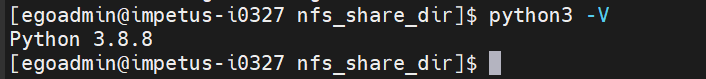

Flow Diagram

Below diagram represents the high-level steps of the Gathr installation process.

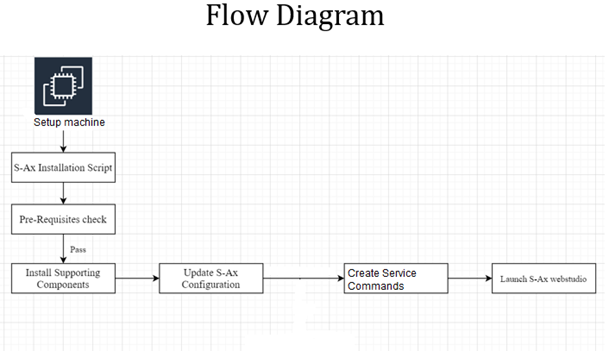

Ansible Script Execution Flow

Below diagram represents the detailed steps of the Gathr installation.

Installation Options

Below are the details of each parameter being passed to the configuration file. (See section #10 - for SAX Config Parameters sample file)

Update the “saxconfig_parameters” file located at GathrDeploymentforIBM-Spectrum/ saxconfig_parameters as per the actual values, referring to the sample values given in the table below.

| Parameter Name | Sample Value | Description | |

|---|---|---|---|

| User and group for gathr web studio deployment | |||

| User_Name | gathr | Application user | |

| Group_Name | gathr | Group | |

| Provide option for IBM Spectrum | |||

| spark_cluster_manager | Standalone or yarn | Provide option for IBM Cluster- “Standalone” for spark standalone or “yarn” for apache yarn cluster | |

| Server and worker IP & Hostnames (Multiple nodes are separated with comma) | |||

| all_host | ip1, ip2 | Server IP where spark worker, data node, node managers will be installed | |

| Hostnames | fqdn-hostname1, fqdn-hostname2 | Server host where spark worker, data node, node managers will be installed | |

| Individual service host entries | |||

| es_server | fqdn Hostname or IP | Elasticsearch deployment host | |

| rmq_server | fqdn Hostname or IP | RabbitMQ hostname | |

| postgres_server | fqdn Hostname or IP | Postgres hostname | |

| Gathr_host | fqdn Hostname or IP | Postgres client should be available on this host | |

| spark_jars_shared_path | Shared path where spark jars are present | ||

| Oracle JDK details | |||

| java_home | /usr/java/jdk1.8.0_121/ | Update the Oracle java_home. | |

| Java home path should be common for all hosts | |||

| tarball details and path | |||

| installation_path | /home/gathr/gathrautotest | Installation path for 3rd party components (e.g. ES, airflow) | |

| sax_installation_dir | /home/gathr/gathrautotest | Gathr installation path. gathr binary should be available in this path/ folder | |

| sax_tar_file | Gathr-x.x.x-SNAPSHOT.tar.gz | Gathr tar file name | |

| Hadoop user for S-AX (Any user which can access HDFS file system) | |||

| Hadoop user | gathr | Specify HDFS user which has access to HDFS file system | |

| tomcat_port | 8090 | S-Ax Tomcat Port (provide any available port for http setup (default 8090). For https use 8443 only) | |

| ## Components configuration | |||

| zookeeper_version | apache-zookeeper-3.5.10 | These are the default versions packaged with this setup. If any other version is needed, then tarball should be placed in /packages folder in installation dir and update version in saxconfig_parameter | |

| elasticsearch_version | elasticsearch-6.8.1 | These are the default versions packaged with this setup. If any other version is needed, then tarball should be placed in /packages folder in installation dir and update version in saxconfig_parameter | |

| cluster_name | ES681 | These are the default versions packaged with this setup. If any other version is needed, then tarball should be placed in /packages folder in installation dir and update version in saxconfig_parameter | |

| postgres configuration && tarball | Elasticsearch cluster name | ||

| Postgresconfig | /var/lib/pgsql | ||

| db_name | Postgres | Installation dir (default) | |

| db_type | postgresql | Required database name | |

| db_type_schema | Postgres | Default value | |

| db_user | Postgres | Default value | |

| db_password | Postgres | Default value (change as per db) | |

| RabbitMQ configuration && tarball | |||

| rmq_user | test | ||

| rmq_password | Test | rmq login username | |

| rmq_version | rabbitmq-3.9.16 | rmq login password | |

| Rmq version to be deployed, this package is already available in GathrDeploymentforIBM-Spectrum/packages |

Supported Service options

As part of the automated installation and mandatory services for Gathr- Postgres, RMQ, Elasticsearch and Zookeeper are included and would be installed with the versions available in the /GathrDeploymentforIBM-Spectrum/packages.

If any other or new version of packages are required, then respective tarball should be copied in packages folder and update same in the saxconfig_parameters.

S-Ax_deployment.yml will install the services RabbitMQ and Postgresql with root user and Elasticsearch, Zookeeper and Gathr with service user

If any of these services is already installed on the cluster node, then the user needs to comment the respective service details in “S-Ax_deployment.yml” file.

Deployment

Run the script ./config.sh then run ansible playbook (S-Ax_deployment.yml) from root user, (Example: /home/sax/GathrDeploymentforIBM-Spectrum)

./config.sh

ansible-playbook -i hosts S-Ax_deployment.yml -c local

Or use -vv option to start installation in debug mode:

ansible-playbook -i hosts S-Ax_deployment.yml -vv -c local

Track the installation progress, on the console when you run the above command.

The logs would also be available under /

If you already have the installation done, and in case you re-run this setup, then the existing Gathr folder would be renamed with date folder suffix and new installation would start.

Validate Deployment

Validate Gathr URL Please get the IP and host of the instance where Gathr is hosted (from saxconfig_parameters), and then launch:

http://<InstanceIP>:8090/Gathr or https://<InstanceIP>:8443/Gathr

depending on configuration, in a browser.

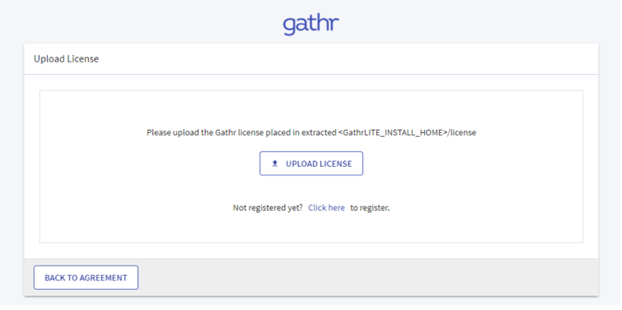

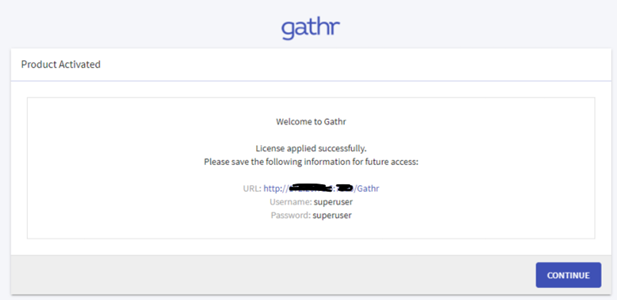

For first time login, it would ask for the license agreement – Please upload the Gathr license.

Default username and password would be superuser/superuser.

Check the installed service status. Gathr is setup as a service and the status/stop/start actions can be done from the below commands:

sudo systemctl status gathr-server.service

sudo systemctl stop gathr-server.service

sudo systemctl start gathr-server.service

The user can check the logs that are located in:

<Gathr/logs/sax-web-logs>.

Validate Rabbit MQ, postgres, Elasticsearch and Airflow

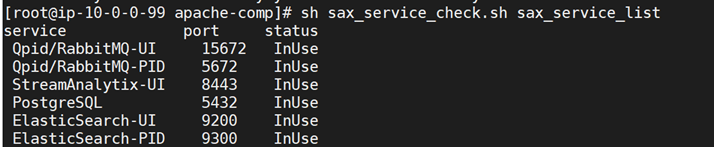

Sudo sh sax_service_check.sh sax_service_list

For postgres, the user should be able to login to console (using username and password details provided in “saxconfig_parameters”).

Rabbit MQ and Elasticsearch URL’s should be accessible on the ports as per output in the above screenshot.

For postgres, the user should be able to login to console (using username and password details provided in “saxconfig_parameters”).

Rabbit MQ and Elasticsearch URL’s should be accessible on the ports as per output in the above screenshot.

SAX Config Parameters

#### User and group for S-Ax web studio deployment

User_Name=sax

Group_Name=sax

####Server and worker IP & Hostnames (Multiple nodes are seperated with comma)

all_hostip=172.x.x.x

hostnames=172.x.x.x

### Path where thirdparty components will install (ES, postgres, RMQ etc)

installation_path=/home/sax/standalone_services/

####Individual service host entries

### ZOOKEEPER START ###

zk=172.x.x.x

client_port=2181

forward_port1=2888

forward_port2=3888

admin_serverPort=8081

zookeeper_version=apache-zookeeper-3.5.10-bin

### ZOOKEEPER END ###

### ELASTICSEARCH START ###

es_server=172.x.x.x

ES_CONNECT_PORT=9200

ES_TRANSPORT_PORT=9300

elasticsearch_version=elasticsearch-6.8.1

cluster_name=ES681

### ELASTICSEARCH END ###

### RABBITMQ START ###

rmq_server=localhost

rmq_user=test

rmq_password=test

rmq_version=rabbitmq-3.9.16

rabbitmq_port=5672

rabbitmq_management_port=15672

rabbitmq_web_stomp_port = 15679

### RABBITMQ END ###

###POSTGRESQL START-

postgres_server=localhost

##Postgres config files and data path

postgresconfig=/var/lib/pgsql

##Postgres rpms name

psql1=lz4-1.8.3-1.el7.x86_64.rpm

psql2=postgresql14-14.7-1PGDG.rhel7.x86_64.rpm

psql3=postgresql14-libs-14.7-1PGDG.rhel7.x86_64.rpm

psql4=postgresql14-server-14.7-1PGDG.rhel7.x86_64.rpm

psql5=postgresql14-devel-14.7-1PGDG.rhel7.x86_64.rpm

psql6=libicu-50.2-4.el7_7.x86_64.rpm

###POSTGRESQL END###

### GATHR START ###

Gathr_host=172.x.x.x

## installation path is Gathr installation path and where Gathr-5.0.0-SNAPSHOT.tar.gz should be available

sax_installation_dir=/home/sax/gathrtest/

##Gathr tar name

sax_tar_file=Gathr-5.0.0-SNAPSHOT.tar.gz

## IBM spark jars path (NFS path where spark jars present)

spark_jars_shared_path=/data/nfs/var/nfs_share_dir/spark-3.0.1-hadoop-3.2/jars

## StreamAx Tomcat Port (provide any available port for http setup (default 8090). for https use 8443 only

tomcat_port=8090

### GATHR END ###

## Oracle jdk path

java_home=/opt/jdk1.8.0_341

####Hadoop user for non-KRB cluster to access HDFS file system will be 'hdfs'(default) or any other created user as per access. For KRB environment keytab user should be specified having access to hadoop filesystem

HADOOPUSER=sax

## Provide option for Apache - "Standalone" for spark standalone or "yarn" for apache yarn cluster

spark_cluster_manager=yarn

spark_version=spark-3.3.0-bin-hadoop3

### DATABASE CONFIGS FOR GATHR - START ###

db_name=gathribm9j

## DBtype values postgresql, mssql

db_type=postgresql

db_user=postgres

db_password=postgres

db_host=172.x.x.x

db_port=5432

#jdbc_driver=com.microsoft.sqlserver.jdbc.SQLServerDriver

#default org.postgresql.Driver

jdbc_driver=org.postgresql.Driver

### DATABASE CONFIGS FOR GATHR - END ###

### CERTIFICATE DETAILS (IF REQUIRED) ###

keystorejks=/etc/ssl/certs/keystore.jks

keystorepasswd=Impetus1!

alias_hostname=instance-2.us-east4-c.c.gathr-360409.internal

## Shraed location where all usera have access example /opt

certs_path=/etc/ssl/certs/

cert_alias=gathr_impetus_com

certpassword=Impetus1!

### CERTFIFICATE CONFIGS END ###

Post Deployment

Once the Gathr deployment is complete and the setup is validated, all the additional required components / connections can be configured.

For more details to setup Dashboard, Jupyter, H20, and other configurations, refer to the Gathr Installation Guide (under “Appendix-2 Post Deployment steps”).

Post-deployment

- Gathr will open using the below url:

http://<Gathr_IP>:8090/Gathr

- Accept the End User License Agreement and hit Next button:

- The Upload License page opens:

- Upload the license and click “Confirm”

- Login page is displayed:

- Login with superuser/superuser as default creds.

Additional Configurations for adding Gathr

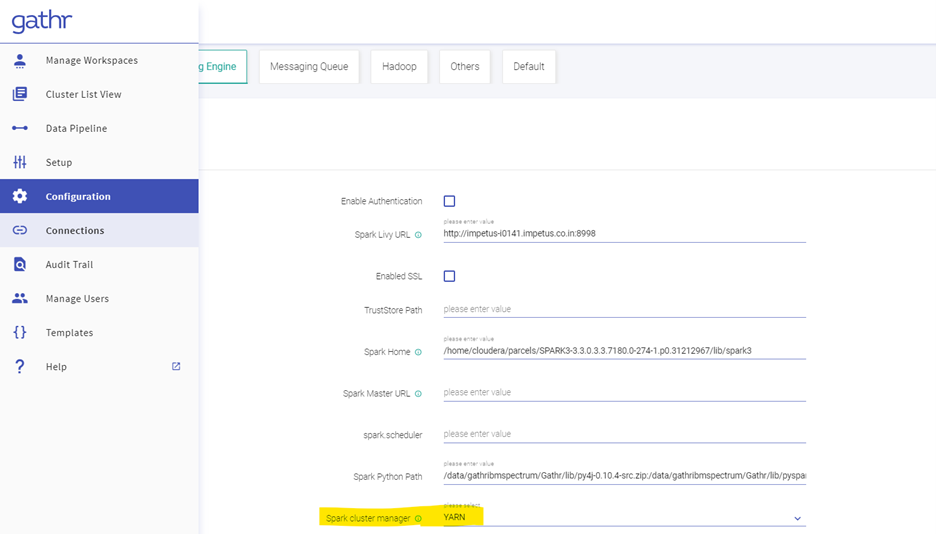

- Go to Configurations. Processing Engine and select YARN as the Spark Cluster Manager. Click on Save.

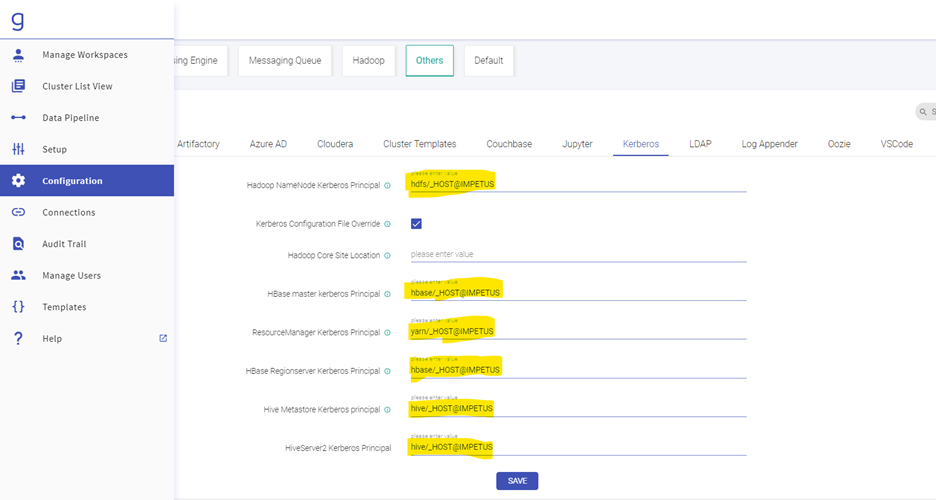

- Go to Configuration, then click Others followed by Kerberos and Edit your Kerberos Principals accordingly. Click on Save.

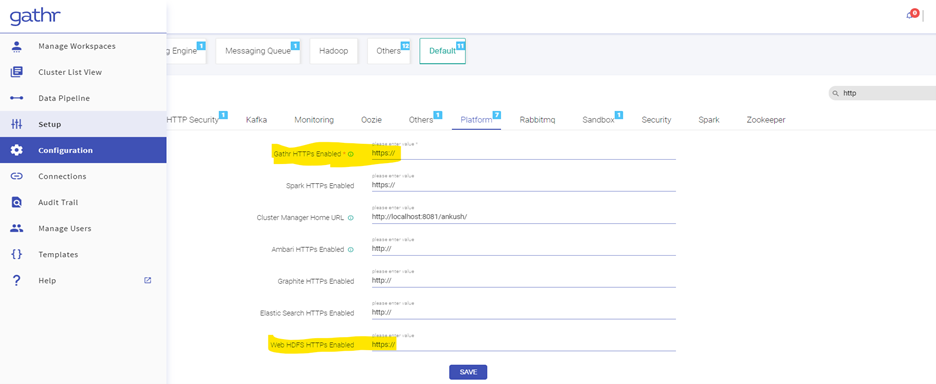

- Go to Configuration in the search box type http, Click on Default and then Platform, Edit the Gathr and HDFS URL as https:// and finally Click on Save.

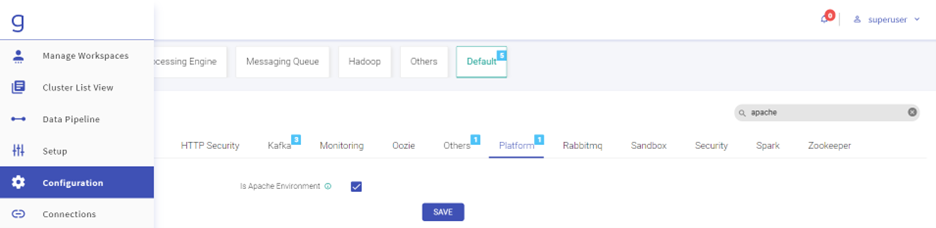

- Go to Configurations, then click Default. Search “Apache” and select “Is Apache Env”. Click on Save.

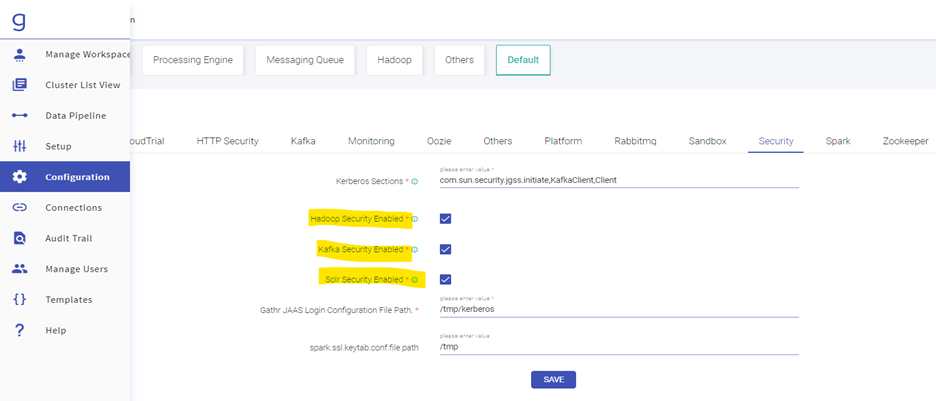

- Go to Configuration. Click on Default and then Security. Enable the check boxes of Hadoop, Kafka & Solr security. Click on Save.

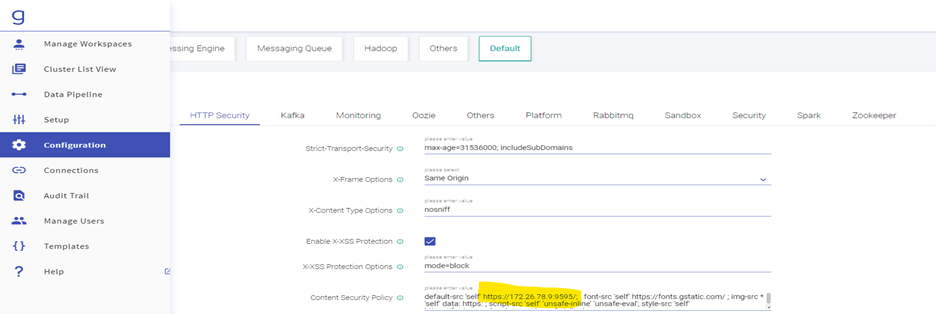

- For Starting Frontail Server on SSL go to Go to Configuration. Click on Default, then HTTP Security and in content security policy sections add you IP instead of localhost.

Now Go to Gathr/bin folder and start the frontail server command, change the key.path & certificate.path accordingly (this is when your Gathr is SSL enabled. If not, then you can simply run ./startFrontailServer.sh to start the frontail server):

./startFrontailServer.sh -key.path=/etc/ssl/certsnew/my_store.key -certificate.path=/etc/ssl/certsnew/gathr_impetus_com.pem

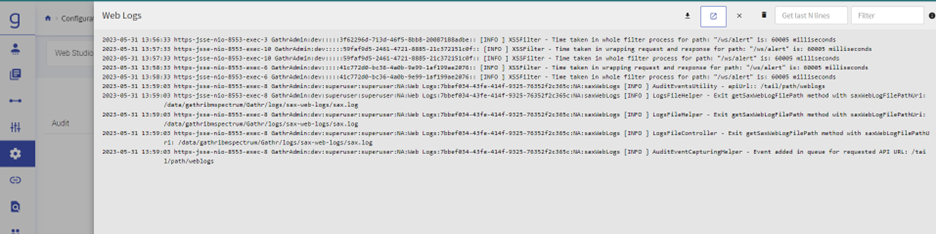

- Web Logs can be seen through UI.

Creating Connection on Gathr

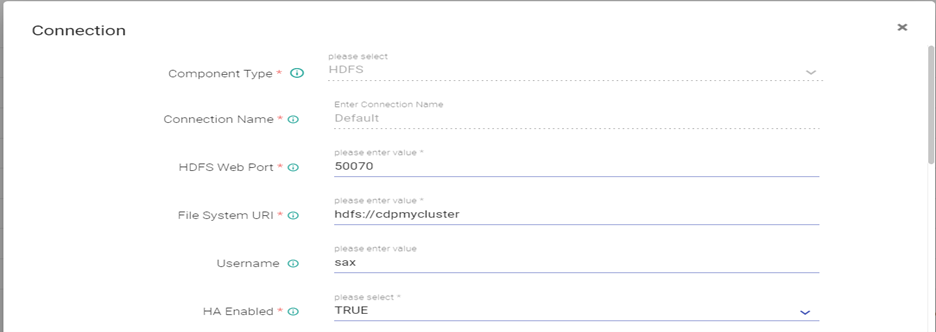

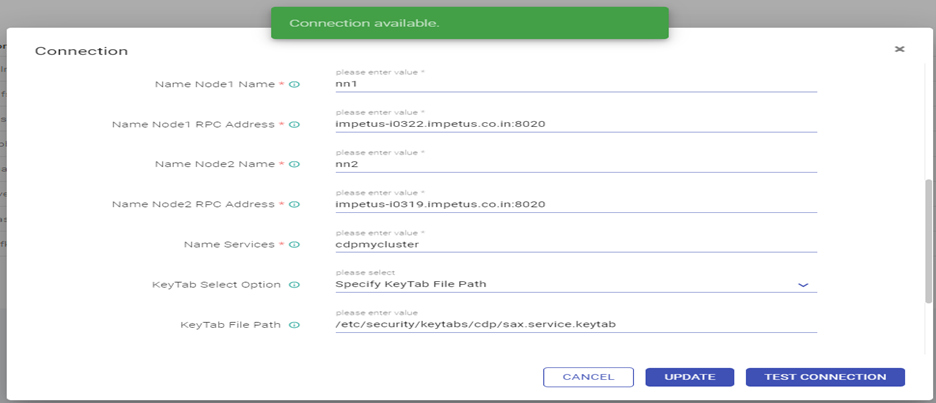

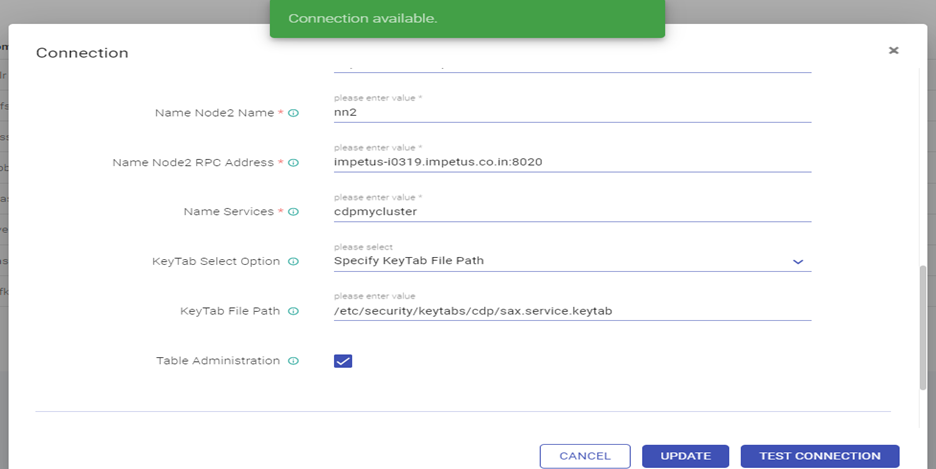

- Edit the HDFS Connection as below: Test connection (Connection should be available) and updated

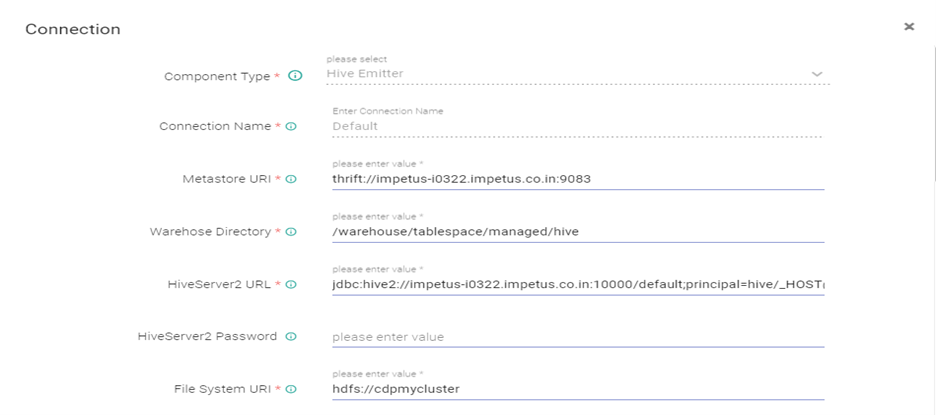

- Edit the Hive Connection as below: Test connection (Connection should be available) and updated

Hive server 2 url - jdbc:hive2://impetus-i0322.impetus.co.in:10000/default;principal=hive/_HOST@IMPETUS;ssl=true

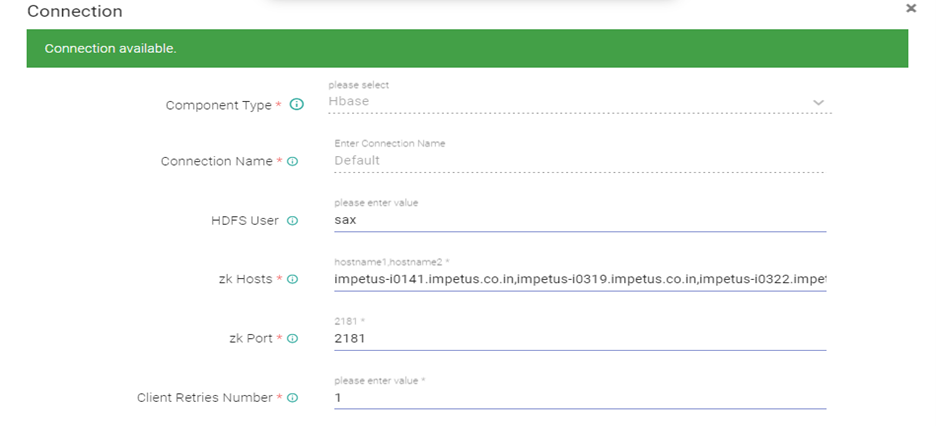

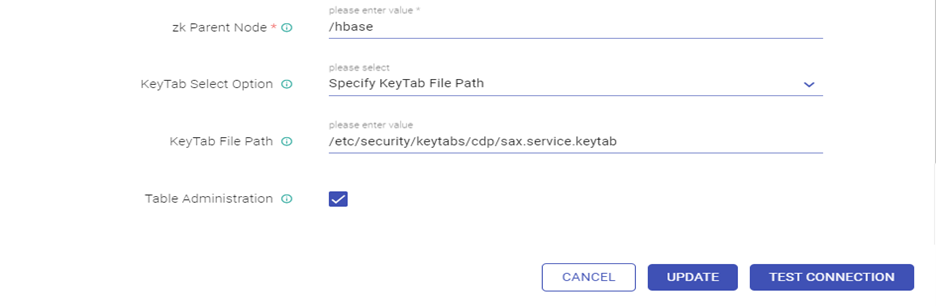

- Edit the HBase Connection and Test connection (Connection should be available) and updated.

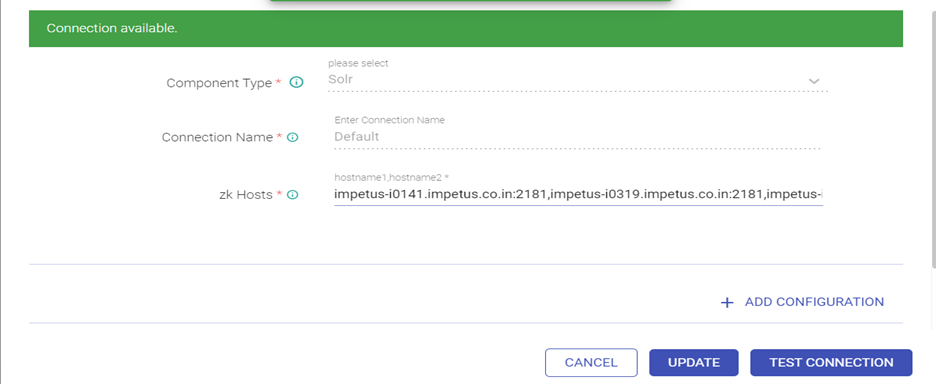

- Edit the Solr Connection and Test connection (Connection should be available) and updated.

ZK Hosts - <zk_hosts:port>/<solr_zk_node>

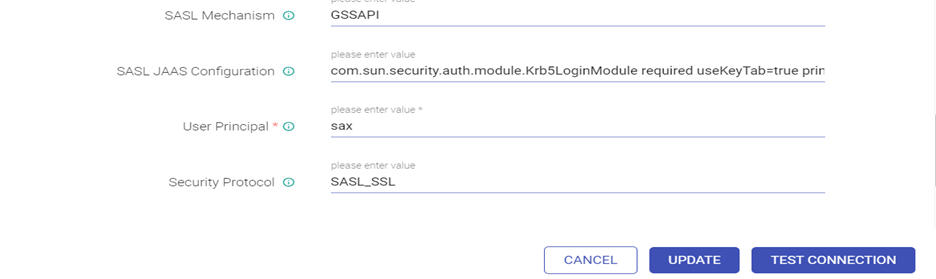

Edit the Kafka connection as below.

Provide ZK hosts & Kafka Brokers:

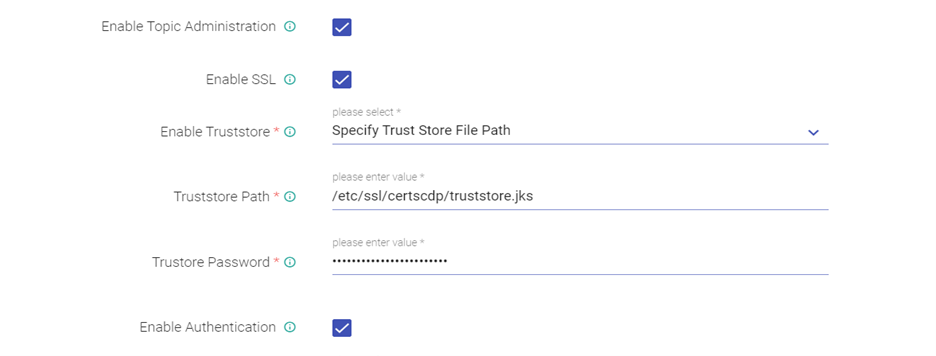

Enable Topic Administration, Enable SSL and Authentication for Kafka, Provide truststore path and password:

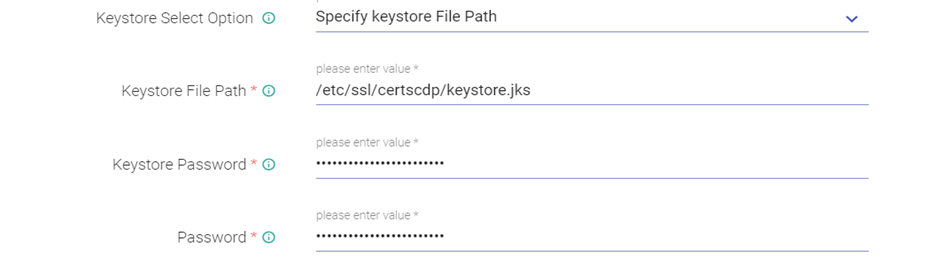

Give keystore path and password:

Enable Kerberos:

Enter SASL Configuration as below:

com.sun.security.auth.module.Krb5LoginModule required useKeyTab=true principal="sax@IMPETUS" keyTab="<Keytab_Path> " storeKey=true serviceName="kafka" debug=true;

Give user Principal & Security Protocol as below:

Test connection (Connection should be available) and updated.

If you have any feedback on Gathr documentation, please email us!