SSL Components Setup

The setup details for SSL components are provided below:

Setup steps for Kafka (Databricks)

Copy from local terminal to DBFS or upload from DBFS UI the required certificates (

keystore.jks&truststore.jks) in the specific DBFS directory.databricks fs cp truststore.jks dbfs:/<KAFKA\_CERT\_DBFS\_PATH> databricks fs cp keystore.jks dbfs:/<KAFKA\_CERT\_DBFS\_PATH>Create a script file that will copy these certificates from DBFS to the cluster node path.

Create a file

kafka\_cert.shand add the below content in it.mkdir -p /tmp/kafka/keystore mkdir -p /etc/ssl/certsThis is required when the upload option is used in Kafka connection.

cp /dbfs/<KAFKA\_CERT\_DBFS\_PATH>/truststore.jks /tmp/kafka/keystore cp /dbfs/<KAFKA\_CERT\_DBFS\_PATH>/keystore.jks /tmp/kafka/keystoreThis is required when the

specify pathoption is used in Kafka connection.cp /dbfs/<KAFKA\_CERT\_DBFS\_PATH>/truststore.jks /etc/ssl/certs cp /dbfs/<KAFKA\_CERT\_DBFS\_PATH>/keystore.jks /etc/ssl/certsSave this file and upload to DBFS path.

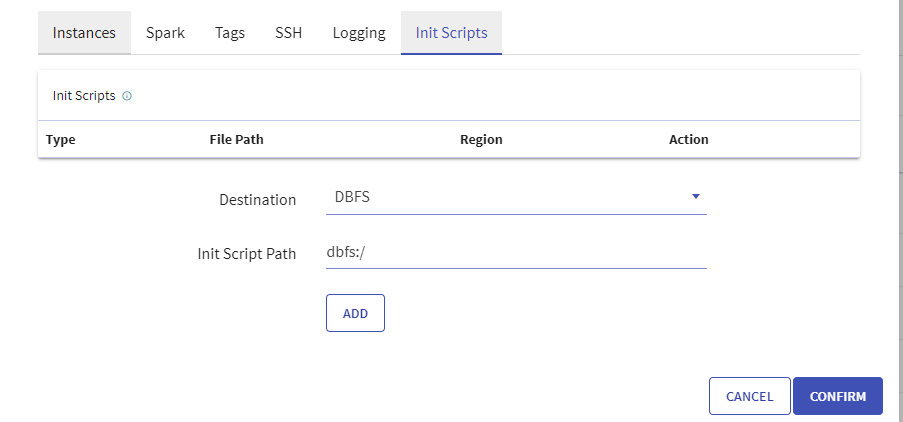

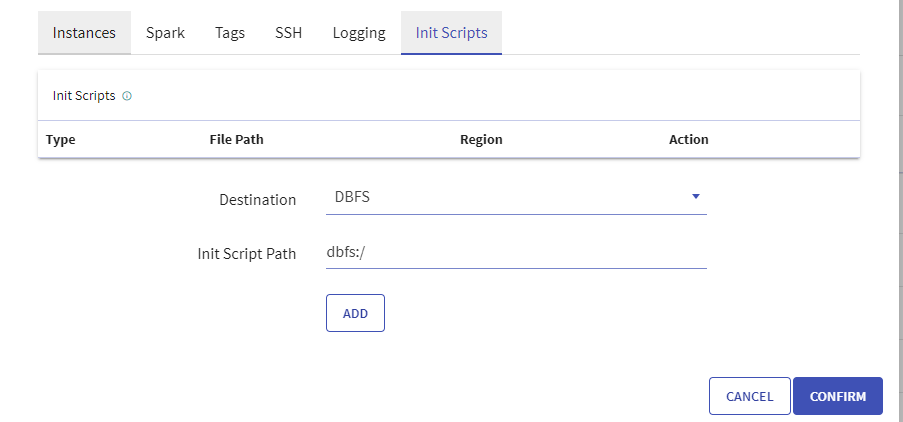

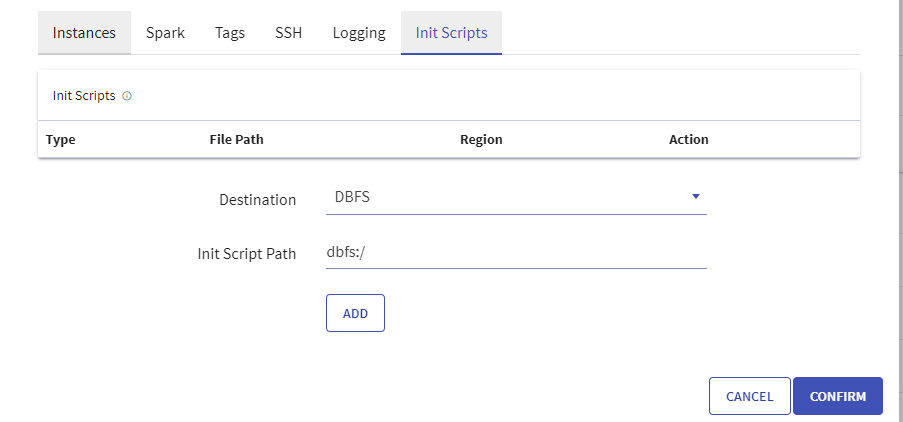

Make sure Kafka connection should have same keystore & truststore path as above script (etc/ssl/certs/). If any other path is used, then update the same in the above script accordingly.Add that script path to init-script during cluster creation process for Databricks in Gathr Home Page> Cluster List View> Databricks> Create Cluster> Init Scripts.

The Init Script can also be added from Databricks URL during cluster creation.

The Init Script can also be added from Databricks URL during cluster creation.

Setup steps for Kafka (EMR-AWS)

In case of pipeline submission on EMR Cluster, the below steps are required only if specify path option is used in Kafka connection. (Not required if upload option is used)

Copy from the local terminal to S3 the required certificates (

keystore.jks&truststore.jks) in specific S3 directory.aws s3 cp truststore.jks s3://<KAFKA\_CERT\_S3\_PATH>/ aws s3 cp keystore.jks s3://<KAFKA\_CERT\_S3\_PATH>/Create a script file that will copy these certificates from S3 to the cluster node path.

Create file

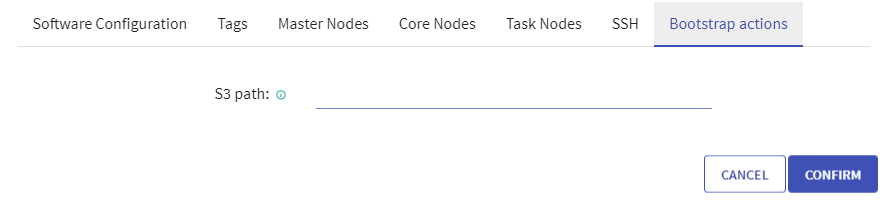

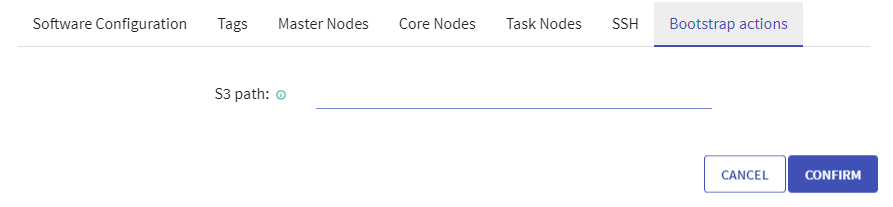

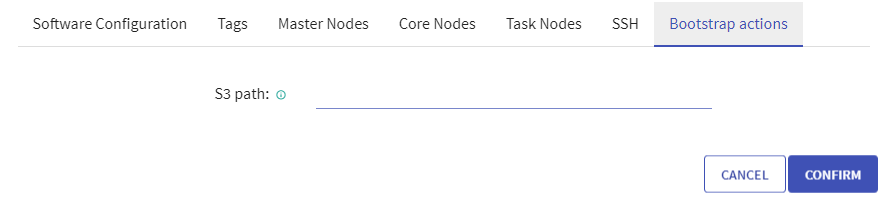

kafka\_cert.shand add the below content in it:sudo mkdir -p /etc/ssl/certs sudo aws s3 cp s3://<KAFKA\_CERT\_S3\_PATH>/truststore.jks /etc/ssl/certs sudo aws s3 cp s3://<KAFKA\_CERT\_S3\_PATH>/keystore.jks /etc/ssl/certsMake sure that Kafka connection should have same keystore & truststore path as above script (etc/ssl/certs/). If any other path is used, then update the same in the above script accordingly.Add that script path to Bootstrap actions during cluster creation process in EMR from Cluster List View> Amazon EMR> Create Cluster> Bootstrap actions.

Setup steps for MSSQL (Databricks-Azure & AWS)

In MSSQL connection, this script should be added in both Databricks and EMR as in MSSQL connection only the path is specified.

Setup steps for Databricks (Azure & AWS)

Copy from the local terminal to DBFS or upload from DBFS UI the required certificates (host pem file &

truststore.jks) in the specific DBFS directory.databricks fs cp truststore.jks dbfs:/<MSSQL\_CERT\_DBFS\_PATH>/ databricks fs cp <hostname -f>.pem dbfs:/<MSSQL\_CERT\_DBFS\_PATH>/Create a script file that will copy these certificates from DBFS to the cluster node path.

Create file

mssql\_cert.shand add the below content in the file:mkdir -p /etc/ssl/certs/mssqlcert cp /dbfs/<MSSQL\_CERT\_DBFS\_PATH>/truststore.jks /etc/ssl/certs/mssqlcert/ cp /dbfs/<MSSQL\_CERT\_DBFS\_PATH>/<hostname -f>.pem /etc/ssl/certs/mssqlcert/Make sure that the MSSQL connection should have the same path as above script (etc/ssl/certs/mssqlcert). If any other path is used, then update the same in above script accordingly.Add that script path to init-script during the cluster creation process for Databricks in Gathr Home Page> Cluster List View> Databricks> Create Cluster> Init Scripts.

Setup steps for EMR (AWS)

Copy from the local terminal to S3 the required certificates (host pem file &

truststore.jks) in specific s3 directory.aws s3 cp truststore.jks s3://<MSSQL\_CERT\_S3\_PATH>/ aws s3 cp <hostname -f>.pem s3://<MSSQL\_CERT\_S3\_PATH>/Create a script file that will copy these certificates from S3 to the cluster node path.

Create file

mssql\_cert.shand add the below content in it:sudo mkdir -p /etc/ssl/certs/mssqlcert sudo aws s3 cp s3://<MSSQL\_CERT\_S3\_PATH>/truststore.jks /etc/ssl/certs/mssqlcert sudo aws s3 cp s3://<MSSQL\_CERT\_S3\_PATH>/<hostname -f>.pem /etc/ssl/certs/mssqlcertAdd that script path to Bootstrap actions during the cluster creation process in EMR from Cluster List View> Amazon EMR> Create Cluster> Bootstrap actions.

Setup steps for Redshift (Databricks-Azure & AWS)

Copy the required certificates (

redshift-ca-bundle.crt) from the local terminal to DBFS or Upload them to DBFS UI in the specific DBFS directory.databricks fs cp redshift-ca-bundle.crt dbfs:/<REDSHIFT\_CERT\_DBFS\_PATH>/Create a script file that will copy these certificates from DBFS to the cluster node path.

Create the file

redshift\_cert.shand add the below content to it.mkdir -p /tmp/rds mkdir -p /etc/ssl/certsThis is required when the upload option is used in the Redshift connection.

cp /dbfs/<REDSHIFT\_CERT\_DBFS\_PATH>/redshift-ca-bundle.crt /tmp/rdsThis is required when the specify path option is used in the Redshift connection.

cp /dbfs/<REDSHIFT\_CERT\_DBFS\_PATH>/redshift-ca-bundle.crt /etc/ssl/certsAdd that script path to init-script during the cluster creation process for Databricks in Gathr Home Page> Cluster List View> Databricks> Create Cluster> Init Scripts.

Setup steps for EMR

In case of pipeline submission on EMR Cluster, the below steps are required only if specify path option is used in Redshift connection. (Not required if upload option is used)

Copy the required certificates (

redshift-ca-bundle.crt) from local terminal to S3 in specific s3 directory.aws s3 cp redshift-ca-bundle.crt s3://<REDSHIFT\_CERT\_S3\_PATH>/Create a script file that will copy these certificates from S3 to cluster node path.

Create a file

redshift\_cert.shand add the below content to it:sudo mkdir -p /etc/ssl/certs sudo aws s3 cp s3://<REDSHIFT\_CERT\_S3\_PATH>/redshift-ca-bundle.crt /etc/ssl/certsAdd that script path to Bootstrap actions during the cluster creation process in EMR from Cluster List View> Amazon EMR> Create Cluster> Bootstrap actions.

Setup steps for Tibco

For Databricks (Azure & AWS)

Copy/Upload the required certificates (pem files) in specific DBFS directory:

databricks fs cp server.cert.pem dbfs:<DBFS\_CERTS\_PATH> databricks fs cp server.key.pem dbfs:<DBFS\_CERTS\_PATH>Create a script file that will copy these certificates from DBFS to cluster node path.

Create a file

tibco\_cert.shand add the below content to it:#!/bin/sh echo "start init script" mkdir -p /opt/tibco-certs cp /dbfs/<DBFS\_CERTS\_PATH>/server.cert.pem /opt/tibco-certs cp /dbfs/<DBFS\_CERTS\_PATH> /server.key.pem /opt/tibco-certs echo "end init script"Add the script path to init-script during the cluster creation process.

Here, /opt/tibco-certs is the path that we are using in Tibco Connection in Gathr.

Here, /opt/tibco-certs is the path that we are using in Tibco Connection in Gathr.

For EMR:

Copy/Upload the required certificates (pem files of tibco) in the specific S3 directory.

aws s3 cp server.cert.pem s3://<S3\_CERTS\_PATH> aws s3 cp server.key.pem s3://<S3\_CERTS\_PATH>Create a script file that will copy these certificates from S3 to the cluster node path.

Create a file

tibco\_cert.shand add the below content to it:echo "tibco init script start" sudo mkdir -p /opt/tibco-certs sudo aws s3 cp s3://<S3\_CERTS\_PATH>/server.cert.pem /opt/tibco-certs sudo aws s3 cp s3://<S3\_CERTS\_PATH> /server.key.pem /opt/tibco-certs echo "tibco init script end"Add that script path to Bootstrap actions during the cluster creation process.

If you have any feedback on Gathr documentation, please email us!