Variables

In this article

Allows you to use variables in your pipelines at runtime as per the scope.

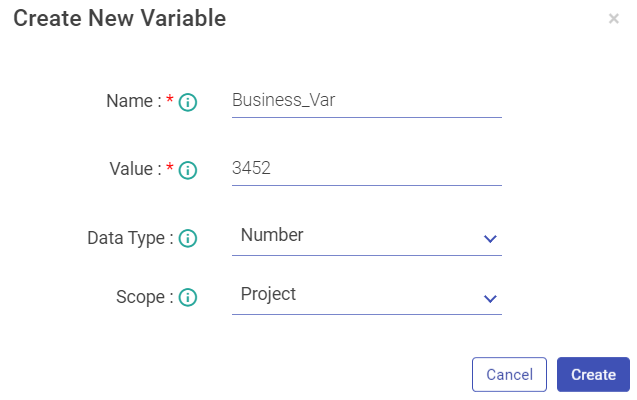

To add a variable, click on Create New Variable (+) icon and provide details as explained below.

| Field | Description |

|---|---|

| Name | Provide a name to the variable. |

| Value | Value of assigned to the variable (it can be an expression) |

| Data Type | Select the Data Type of the variable. The options are: - Number - Decimal - String |

| Scope | Select the Scope of the variable. Following are the types of scope: Project: If the user selects Project, then the scope of this variable will be within the project. Pipeline: If user selects Pipeline, then the scope of this variable will be within the selected pipeline. Global: If user selects Global, then the scope of this variable will be across the application. The global variable will be accessible across various workspaces/project where it was created. Note: - The Global variable can also be utilized in the Functions Processor →. Workspace: If the user selects Workspace, then the scope of variable will be within all the topologies of the workspace. |

For example, if you create the following variables: Name, Salary and Average.

Then by calling the following code, you will get all the variables in the varMap in its implementation class.

Map<String, ScopeVariable> varMap = (Map<String, ScopeVariable>) configMap.get(svMap);

If you want to use the Name variable that you have created by calling the following code you will get all the details of the scope variables.

The variable object has all the details of the variable Name, Value, Datatype and Scope.

ScopeVariable variable = varMap.get(Name);

String value = variable.getValue();

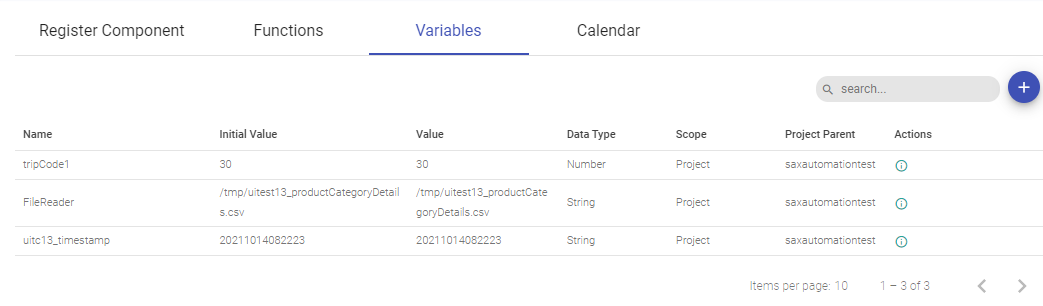

Variable listing page (shown below)

Scope Variable

You can now add Scope Variable so that you can use these variables to reuse and update them as and when needed on pipeline and pipeline components.

Cobol (Data Source) –> copybookPath –> dataPath

HDFS → (Data Source) –> file path

Hive → (Data Source) –> Query

JDBC → (Data Source) – > Query

GCS (Batch and Streaming) → (Data Source)–> File Path

File Writer → (Emitter)–> File Path

Formats supported are:

@{Pipeline.filepath} = /user/hdfs

@{Workspace.filepath} = /user/hdfs

@{Global.filepath}/JSON/demo.json = /user/hdfs/JSON/demo.json

@{Pipeline.filepath + ‘/JSON/demo.json’} = /user/hdfs/JSON/demo.json

@{Workspace.filepath + “/JSON/demo.json”} = /user/hdfs/JSON/demo.json

@{Global.lastdecimal + 4} // will add number = 14.0

If you have any feedback on Gathr documentation, please email us!