Advanced Kafka Emitter

Advanced Kafka Emitter stores data to Advanced Kafka cluster.

Data formats supported are JSON and DELIMITED.

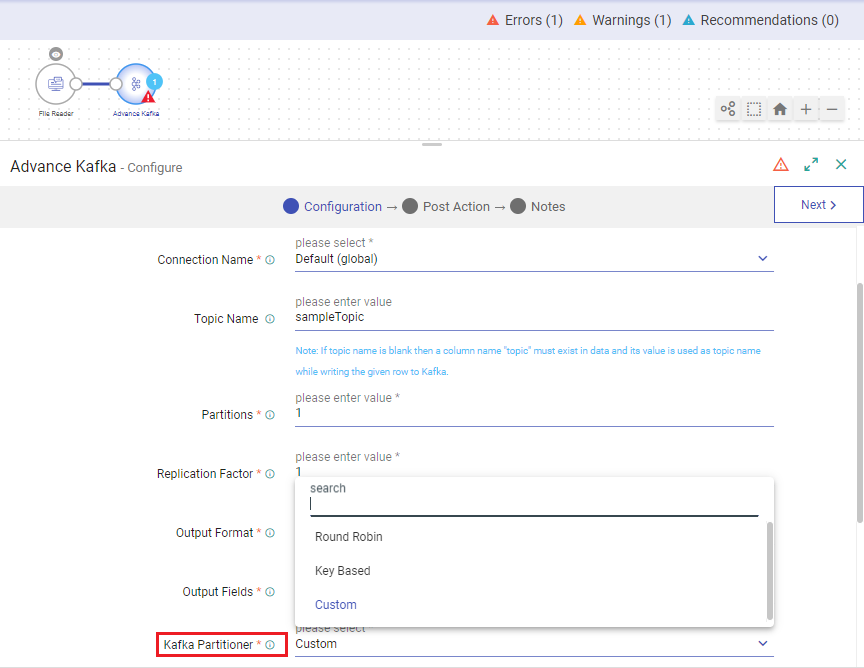

Advanced Kafka Emitter Configuration

To add an Advanced Kafka Emitter to your pipeline, drag the Advanced Kafka emitter onto the canvas, connect it to a Data Source or processor, and right-click on it to configure.

Connection Name

Select from the existing connections or navigate to the left menu to Connections Page to create a new Kafka connection. Once the connection is created, the same will be available to be selected here.

Topic Name

Specify the name of the topic to which you want to emit or send your data.

Each piece of data sent to Kafka will be associated with a specific topic, making it easier to organize and manage data streams within your Kafka cluster.

Partitions

Default value is 1. Enter how many partitions you want the Kafka topic to be divided into.

Each partition stores messages in order, like chapters in a book, making it easier to manage and process large amounts of data.

By specifying the number of partitions for a topic, you determine how Kafka distributes and manages the data within that topic.

Replication Factor

Default value is 1. Set the replication factor for the topic. It determines how many copies of each partition are stored across different Kafka brokers.

If set to 1, each partition will have only one copy. Increasing the replication factor improves fault tolerance, ensuring that data remains available even if some brokers fail.

For a topic with replication factor N, Advanced Kafka will tolerate up to N-1 failures without losing any messages committed to the log.

Output Format

Choose the data type format in which the emitted data will be structured. Options are:

JSON

Delimited

Select the format that best suits your use case.

Delimiter

Select the message field separator. This option appears when the output format is set to DELIMITED.

Output Fields

Specify the fields in the message that should be part of the output data.

Kafka Partitioner

Determine which partition within a Kafka topic a message should be written to. Partitioning mechanisms are:

Round Robin (Default): Evenly distributes messages across partitions in a round-robin fashion.

It does not consider message content but rather assigns partitions sequentially, cycling through each partition in turn.

This option ensures a balanced distribution of messages across partitions.

Key Based: In key based partitioning, the hash of a message’s key is used to map messages to partitions.

Example:

Order ID (Key) Hash Value Partition Assigned 123 1 Partition 1 456 0 Partition 0 123 (repeated) 1 Partition 1 Here:

Order ID (Key) is the unique identifier for each order.

Hash Value is a hash function applied to the order ID by Kafka.

Partition Assigned is the partition of the “orders” topic that the message will be placed into based on the hash value.

Therefore, in the given example:

When an order with ID “123” is received, its hash value is calculated to be 1. So, it is assigned to Partition 1.

Similarly, when an order with ID “456” is received, its hash value is calculated to be 0. Therefore, it is assigned to Partition 0.

If another order with ID “123” is sent later on, Kafka remembers that its hash value is 1. Hence, it will be consistently assigned to Partition 1, ensuring that all orders with the same ID are stored together.

Select the key(s) for key based partitioner and proceed with emitter configuration.

Custom: Define your own logic for partitioning messages. You can tailor the partitioning strategy to your specific needs or business requirements.

Steps to use Custom Kafka Partitioner

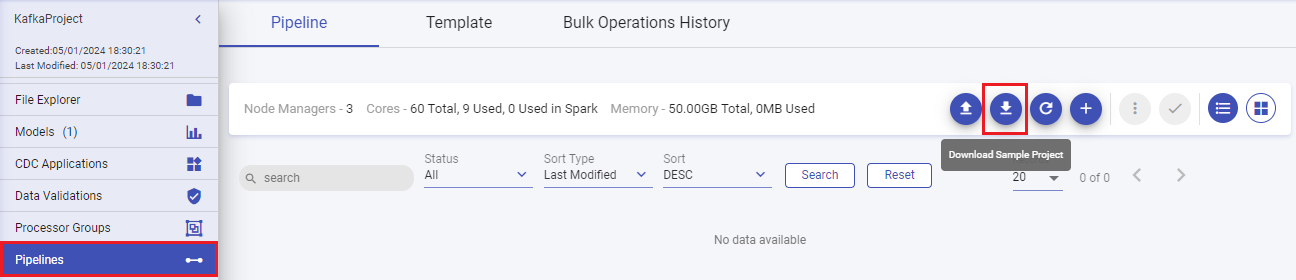

1. Download Sample Project

From your Project’s menu, navigate to the Pipelines page, and download sample project.

A zip file “sax-spark-ss-sample.zip” gets downloaded.

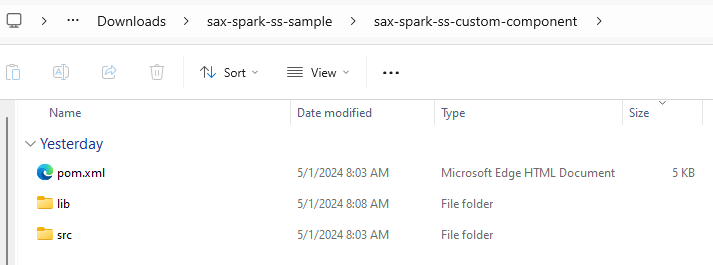

Unzip the file and navigate to the below path:

\sax-spark-ss-sample\sax-spark-ss-custom-component\src\main\java\com\yourcompany\custom\ss\partitioner

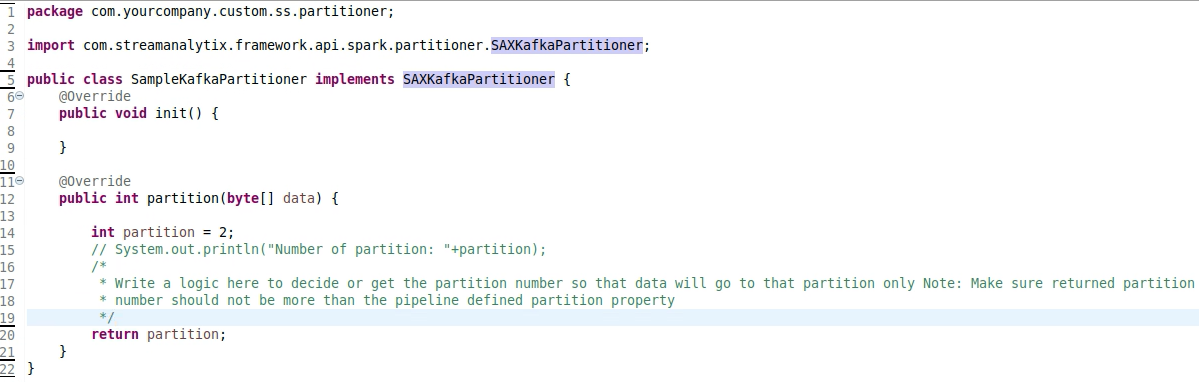

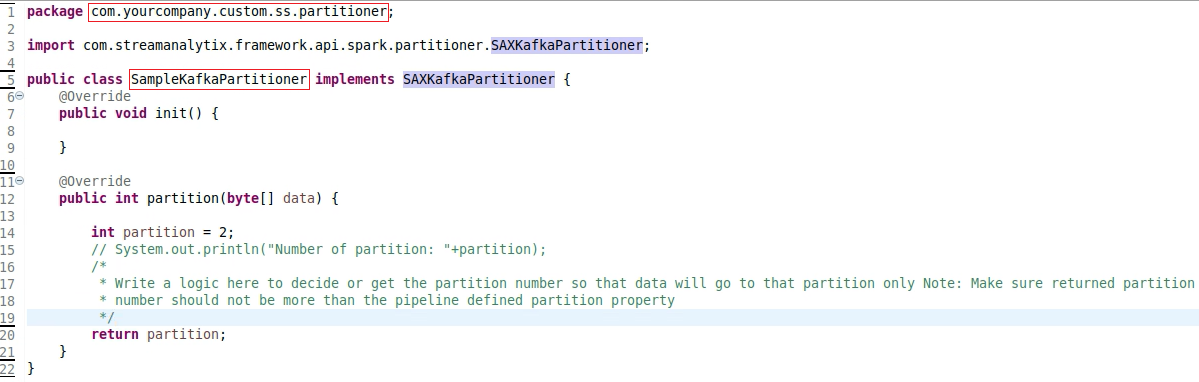

2. Write Custom Partition Logic

A file named “SampleKafkaPartitioner.java” will be available inside the partitioner directory. Open the file to write your custom partitioning code.

Custom Kafka Partitioner Example:

Save the file and close it.

Navigate to the root directory “sax-spark-ss-custom-component” and open it in any command line tool.

Now, a package needs to be generated that can be uploaded to Gathr to use the custom partitioning logic.

Run the below command in the root directory where the .pom file is kept.

Make sure that you have Java and Maven installed in your system to build the custom package. Recommended is Java 8.mvn clean installA Target folder will get created in your root directory containing a .jar file.

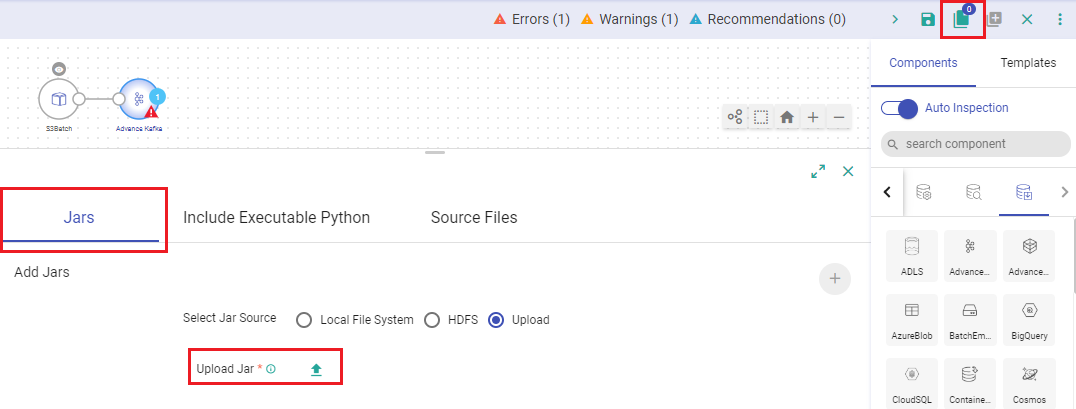

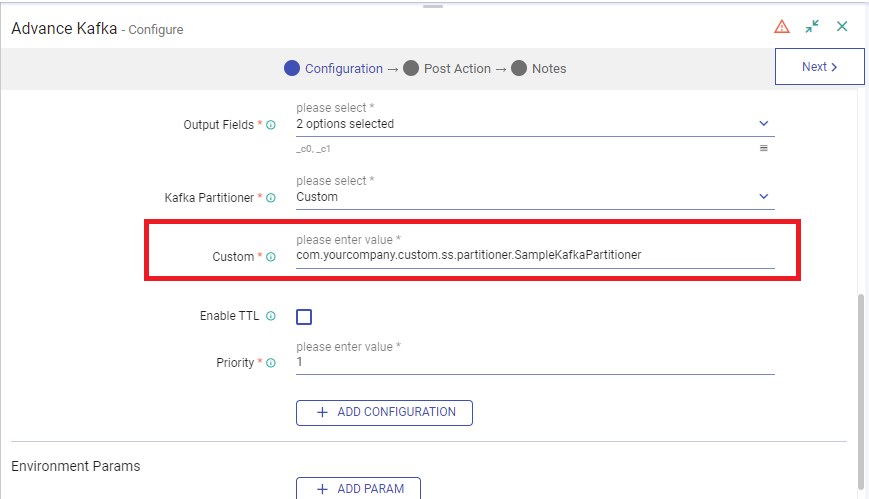

3. Use Custom Partition Logic in Gathr

Switch to Gathr’s Pipeline canvas and use the Add Artifacts option to open the Add Jars section.

Upload the JAR file containing all the dependencies.

Right-click the Advanced Kafka Emitter and open it’s configuration.

Select the “Kafka Partitioner” as Custom.

Provide the fully qualified class name of custom partition code for Kafka emitter.

For example, fully qualified class name for the below sample will be: com.yourcompany.custom.ss.partitioner.SampleKafkaPartitioner

Continue with the emitter configuration.

Enable TTL

Check this box to activate Time to Live (TTL) for records. When enabled, records will only persist in the system for a specified duration.

TTL Value

Specify the duration for which records should persist, measured in seconds.

Output Mode

Choose the output mode for writing data to the Streaming emitter:

Append: Only new rows in the streaming data will be written to the target.

Complete: All rows in the streaming data will be written to the targer every time there are updates.

Update: Only rows that were updated in the streaming data will be written to the target every time there are updates.

Enable Trigger

Define how frequently the streaming query should be executed.

Trigger Type

Choose from the following supported trigger types:

One-Time Micro-Batch: Processes only one batch of data in a streaming query before terminating the query.

Fixed Interval Micro-Batches: Runs a query periodically based on an interval in processing time.

Processing Time

This option appears when the Enable Trigger checkbox is selected. Specify the trigger time interval in minutes or seconds.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

If you have any feedback on Gathr documentation, please email us!