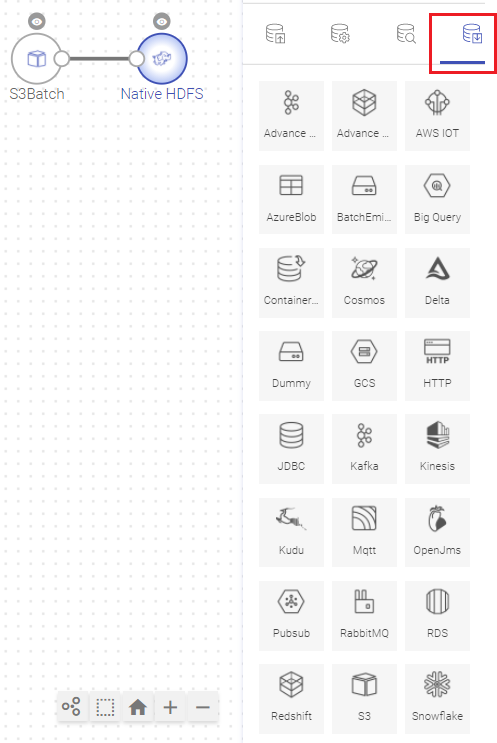

Emitters define the destination stage of a pipeline which could be a NoSQL store, Indexer, relational database, or third party BI tool.

The user may want to perform certain actions after the execution of source and channel components. A Post-Action tab is available after the Emitter is executed.

Note: All batch pipelines will have Post-Action at the emitter.

These actions could be performed on the Emitter:

- If for some reason the action configured fails to execute, the user has an option to check mark the ‘Ignore Action Error’ option so that the pipeline runs without getting impacted.

- By check marking the ‘Ignore while execution’ option, the configuration will remain intact in the pipeline, but the configured action will not get executed.

- The user can also configure multiple actions by clicking at the Add Action button.

Add an ADLS batch or streaming data source to create a pipeline. Click the component to configure it.

Under the Schema Type tab, select Fetch From Source or Upload Data File. Edit the schema if required and click next to configure.

Provide the below fields to configure ADLS data source:

Advance Kafka Emitter stores data to Advance Kafka cluster. Data format supported are JSON and DELIMITED.

Configuring Advance Kafka Emitter for Spark Pipelines

To add a Advance Kafka Emitter into your pipeline, drag the Advance Kafka to the canvas, connect it to a Data Source or processor, and right click on it to configure.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

Advanced Redshift works for Batch Datasets, which also signifies that it will only with a Batch Datasource. It uses S3 temp directory to unload data into Redshift database table.

Configuring Advanced Redshift Emitter for Spark Pipelines

To add a Advanced Redshift emitter into your pipeline, drag the emitter on the canvas and connect it to a Data Source or processor. Right click on the emitter to configure it as explained below:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

The AWS IOT emiter allows you to push data on AWS IOT Topics on MQTT client.

Configuring AWS IOT emitter for Spark pipelines

To add an AWS IOT emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

On a Blob Emitter you should be able to write data to different formats (json, csv, orc, parquet, and more) of data to blob containers by specifying directory path.

Configuring Azure Blob emitter for Spark pipelines

To add an Azure Blob emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

The configuration settings are as follows:

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

Batch emitter allows you to write your own custom code for processing batch data as per the logic written in the custom emitter.

In case, you want to use any other emitter which is not provided by Gathr, you can make use of this emitter.

For example, if you want to store data to HDFS, you can write your own custom code and store the data.

Configuring Custom Emitter for Spark Pipelines

To add a custom emitter into your pipeline, drag the custom emitter to the canvas, connect it to a Data Source or processor, and right click on it to configure.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Here is a small snippet of sample code that is used for writing data to HDFS.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

The Container Emitter is used to sink the data in Couchbase.

To add a Container Emitter into your pipeline, drag the Emitter to the canvas and right click on it to configure.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the NEXT button. An option to Save as Template will be available. Add notes in the space provided and click on Save as Template

Choose the scope of the Template (Global or Workspace) and Click Done for saving the Template and configuration details.

On a Cosmos Emitter you should be able to emit data into different containers of selected Cosmos database.

In case of Streaming Cosmos Channel and Batch Cosmos channel, the cosmos emitter has different properties, explained below:

Configuring Cosmos for Spark Pipelines

To add a Cosmos Emitter into your pipeline, drag the Emitter to the canvas, connect it to a Data Source or processor, and click on it to configure.

Note: If the data source in pipeline is streaming Cosmos then the emitter will show four additional properties: Checkpoint Storage Location, Checkpoint Connections, Checkpoint Directory, and Time-Based checkpoint.

On a Delta Lake Emitter, you should be able to emit data on to HDFS or S3 or DBFS in delta lake.

All data in Delta Lake is stored in Apache Parquet format enabling Delta Lake to leverage the efficient compression and encoding schemes that are native to Parquet.

Delta Lake can handle petabyte-scale tables with billions of partitions and files at ease.

Configuring Delta for Spark Pipelines

To add a Delta Emitter into your pipeline, drag the Emitter; to the canvas, connect it to a Data Source or processor, and right click on it to configure:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Dummy emitters are required in cases where the pipeline has a processor that does not require an emitter. For example, in case of a custom processor, if the data is to be indexed on elastic search, we may not require an emitter in such a case. However, with Gathr it is a mandate to have an emitter in the pipeline. In such a scenarios, you can use a Dummy Emitter so that you can test the processors without the requirement of emitting the data using an actual emitter.

In the configuration window of a Dummy Emitter enter the value. The user can ADD CONFIGURATION and click Next.

A local file emitter can save data to local file system. Local file System is the File System where Gathr is deployed.

Configure File Writer Emitter for Spark Pipelines

To add a File Writer emitter to your pipeline, drag it to the canvas, connect it to a Data Source or processor, and right click on it to configure.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Path where the data file will be read/saved. This is where you can use the Scope Variable using @. To know more about the same, read about Scope Variable. Select the check pointing storage location. Available options are HDFS, S3, and EFS. Select the connection. Connections are listed corresponding to the selected storage location. It is the path where Spark Application stores the checkpointing data. For HDFS and EFS, enter the relative path like /user/hadoop/, checkpointingDir system will add suitable prefix by itself. For S3, enter an absolute path like: S3://BucketName/checkpointingDir Select checkbox to enable timebased checkpoint on each pipeline run i.e. in each pipeline run above provided checkpoint location will be appended with current time in millis. Mode in which File writer will run. Output mode to be used while writing the data to Streaming emitter. Select the output mode from the given three options: Append: Output Mode in which only the new rows in the streaming data will be written to the sink. Complete Mode: Output Mode in which all the rows in the streaming data will be written to the sink every time there are some updates. Update Mode: Output Mode in which only the rows that were updated in the streaming data will be written to the sink every time there are some updates.

HTTP Emitter allows you to emit data into different APIs.

For example: You have Employee Information available in JSON, TEXT or CSV format and you would like to update information for any employee but also save the same on HTTP emitter. This can be done using PUT or POST method.

Configuring HTTP Emitter for Spark pipelines

To add an HTTP emitter into your pipeline, drag the emitter on the canvas and connect it to a Data Source or processor. The configuration settings of the HTTP emitter are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

This option specify that URL can be accessed without any authentication.

This option specify that accessing URL requires Basic Authorization. For accessing the URL you need to provide user name and password.

Token-based authentication is a security technique that authenticates the users who attempts to log in to a server, a network, or some other secure system, using a security token provided by the server.

Oauth2 is an authentication technique in which application gets a token that authorizes access to the user's account.

JDBC Emitter allows you to push data to relational databases like MySQL, PostgreSQL, Oracle DB and MS-SQL.

JDBC emitter also enables you to configure data on DB2 database using JDBC emitter for both batch and stream.

It is enriched with lookup functionality for the DB2 database so that you can enrich fields with external data read from DB2. Select a DB2 connection while configuring JDBC emitter.

Configuring JDBC Emitter for Spark pipelines

To add a JDBC emitter into your pipeline, drag the JDBC emitter on the canvas and connect it to a Data Source or processor. The configuration settings of the JDBC emitter are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the NEXT button. Enter the notes in the space provided. Click DONE for saving the configuration.

Kafka emitter stores data to Kafka cluster. Data format supported are JSON and DELIMITED.

Configuring Kafka Emitter for Spark Pipelines

To add a Kafka emitter into your pipeline, drag the Kafka emitter on the canvas and connect it to a Data Source or Processor. The configuration settings of the Kafka emitter are mentioned below.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Kinesis emitter emits data to Amazon Kinesis stream. Supported data type formats of the output are Json and Delimited.

Configure Kinesis Emitter for Spark Pipelines

To add a Kinesis emitter into your pipeline, drag the emitter on to the canvas, connect it to a Data Source or a Processor, and right-click on it to configure it:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided. Click Save for saving the configuration details.

Apache Kudu is a column-oriented data store of the Apache Hadoop ecosystem. It enable fast analytics on fast (rapidly changing) data. The emitter is engineered to take advantage of hardware and in-memory processing. It lowers query latency significantly from similar type of tools.

Configuring KUDU Emitter for Spark Pipelines

To add a KUDU emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor. The configuration settings are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE for saving the configuration.

To add Mongo emitter into your pipeline, drag the emitter on the canvas, connect it to a Data Source or processor, and click on it to configure.

Configuring Mongo Emitter

Mqtt emitter emits data to Mqtt queue or topic. Supported output formats are Json, and Delimited.

Mqtt supports wireless network with varying levels of latency.

Configuring Mqtt Emitter for Spark Pipelines

To add Mqtt emitter into your pipeline, drag the emitter on to the canvas, connect it to a Data Source or processor, and right click on it to configure it.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Add Notes tab. Enter the notes in the space provided.

Click SAVE for saving the configuration.

OpenJMS is used to send and receive messages from one application to another. OpenJms emitter is used to write data to JMS queues or topics. All applications that have subscribed to those topics/queues will be able to read that data.

Configuring OpenJms Emitter for Spark Pipelines

To add an OpenJms emitter into your pipeline, drag the emitter on to the canvas, connect it to a Data Source or processor, and right click on it to configure it.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

.

Click on the Next button. Enter the notes in the space provided.

Click SAVE for saving the configuration details.

The RabbitMQ emitter is used when you want to write data to RabbitMQ cluster.

Data formats supported are JSON and DELIMITED (CSV, TSV, PSV, etc).

Configuring RabbitMQ Emitter for Spark Pipelines

To add a RabbitMQ emitter into your pipeline, drag the RabbitMQ emitter on the canvas and connect it to a Data Source or processor. Right click on the emitter to configure it as explained below:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the NEXT button. Enter the notes in the space provided. Click SAVE for saving the configuration details.

RDS emitter allows you to write to RDS (un)Secured DB Engine. RDS is Relational Database service on Cloud.

Configuring RDS Emitter for Spark Pipelines

To add an RDS emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

The configuration settings are as follows:

Redshift emitter works for both Streaming and Batch Datasets. It allows data to be pushed into the Redshift tables.

Configuring Redshift Emitter for Spark Pipelines

To add a Redshift emitter into your pipeline, drag the emitter on the canvas and connect it to a Data Source or processor. Right click on the emitter to configure it as explained below:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Amazon S3 stores data as objects within resources called Buckets. S3 emitter stores objects on Amazon S3 bucket.

Configuring S3 Emitter for Spark Pipelines

To add a S3 emitter into your pipeline, drag the emitter on the canvas and connect it to a Data Source or processor. Right click on the emitter to configure it as explained below:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

The user can use snowflake cloud-based data warehouse system as an emitter in the ETL pipelines. The user will be required to configure as shown below:

Note: The user can add further configurations by clicking at the +Add Configuration option.

Note: Post table configuration, as the user clicks Next, The mapping of the table columns will be displayed here under the Mapping tab.

The User can select the auto fill option, download and upload the columns mapped from the top right of the mapping window.

SQS emitter allows to emit data in Streaming manner into SQS Queues.

Configuring SQS Emitter for Spark Pipelines

To add an SQS emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor. The configuration settings are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Streaming Emitter is an action provided by Spark Structured Streaming. It provides a custom implementation for processing streaming data which is executed using the emitter.

Configuring CustomStreamingEmitter for Spark Pipelines

To add a CustomStreamingEmitter into your pipeline, drag the CustomStreamingEmitter; to the canvas, connect it to a Data Source or processor, and right click on it to configure:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the Done button for saving the configuration.

Streaming emitter enables you to visualize the data running in the pipeline at the built-in real-time dashboard.

For example, you may use Streaming emitter to view real-time price fluctuation of stocks.

Click on the Next button. Enter the notes in the space provided. Click Done for saving the configuration details.

Advance HDFS emitter allows you to add rotation policy to the emitter.

Configuring Advance HDFS Emitter for Spark Pipelines

To add an Advance HDFS emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor. The configuration settings are as follows:

Cassandra emitter allows you to store data in a Cassandra table.

Configuring Cassandra emitter for Spark pipelines

To add a Cassandra emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

The configuration settings are as follows:

Note: Append output mode should only be used if an Aggregation Processor with watermarking is used in the data pipeline.

HBase emitter stores streaming data into HBase. It provides quick random access to huge amount of structured data.

Configure Hbase Emitter for Spark Pipelines

To add Hbase emitter to your pipeline, drag it to the canvas, connect it to a Data Source or processor, and right click on it to configure.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

Hive emitter allows you to store streaming/batch data into HDFS. Hive queries can be implemented to retrieve the stored data.

To configure a Hive emitter, provide the database name, table name along with the list of fields of schema to be stored. This list of data rows get stored in Hive table, in a specified format, inside the provided database.

You must have the necessary permissions for creating table partitions and then writing to partition tables.

Configuring Hive Emitter for Spark Pipelines

To add a Hive emitter into your pipeline, drag it to the canvas, connect it to a Data Source or processor, and right click on it to configure.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button after entering all the details.

HDFS emitter stores data in Hadoop Distributed File System.

To configure a Native HDFS emitter, provide the HDFS directory path along with the list of fields of schema to be written. These field values get stored in HDFS file(s), in a specified format, inside the provided HDFS directory.

Configuring Native HDFS for Spark Pipelines

To add Native HDFS emitter into your pipeline, drag the emitter on to the canvas, connect it to a Data Source or processor, and right click on it to configure it. You can also save it as a Dataset.

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Note: In case of Multi Level JSON, multi array testing is done. (Confirm this note and where should it be included)

Every option selected will produce a field as per the output selected, for example in case of Delimited, a Delimiter field is populated, select the delimiter accordingly.

Limitation-This emitter works only for batch pipeline. Partitioning does not work when output format is selected as XML.

Click on the NEXT button. Enter the notes in the space provided. Click SAVE for saving the configuration details.

Elasticsearch emitter allows you to store data in Elasticsearch indexes.

Configuring Elasticsearch Emitter for Spark Pipelines

To add an Elasticsearch emitter into your pipeline, drag the emitter to the canvas and connect it to a Data Source or processor. The configuration settings are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

Click on the Next button. Enter the notes in the space provided.

Click on the DONE button for saving the configuration.

Parse Salesforce data from the source itself or import a file in either data type format except Parquet. Salesforce channel allows to read Salesforce data from an Salesforce account. Salesforce is a top-notch CRM application built on the Force.com platform. It can manage all the customer interactions of an organization through different media, like phone calls, site email inquiries, communities, as well as social media. This is done by reading Salesforce object specified by Salesforce Object Query Language.

However there are a few pre-requisites to the same.

First is to create a Salesforce connection and for that you would require the following:

- A valid Salesforce accounts.

- User name of Salesforce account.

- Password of Salesforce account.

- Security token of Salesforce account.

Configuring Salesforce Data Source

To add a Salesforce Data Source into your pipeline, drag the Data Source to the canvas and right click on it to configure.

Under the Schema Type tab, select Fetch from Source or Upload Data File

Now, select a Salesforce connection and write a query to fetch any Salesforce object. Then provide an API version.

Solr emitter allows you to store data in Solr indexes. Indexing is done to increase the speed and performance of search queries.

Configuring Solr Emitter for Spark Pipelines

To add a Solr emitter into your pipeline, drag it on the canvas and connect it to a Data Source or processor. The configuration settings of the Solr emitter are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.

VERTICA emitter supports Oracle, Postgres, MYSQL, MSSQL, DB2 connections.

You can configure and connect above mentioned DB-engines with JDBC. It allows you to emit data into DB2 and other sources into your data pipeline in batches after configuring JDBC channel.

Note: This is a batch component.

For using DB2, create a successful DB2 Connection.

Configuring Vertica Emitter for Spark Pipelines

To add a Vertica emitter into your pipeline, drag it on the canvas and connect it to a Data Source or processor. The configuration settings of the Solr emitter are as follows:

Note: If the data source in pipeline has a streaming component, then the emitter will show four additional properties, Checkpoint Storage Location; Checkpoint Connections; Checkpoint Directory; and Time-Based checkpoint.